41-47% LLM Error Cut & 20% Unity Stock Dip: AI Tools Stir Industry Turmoil – US Finance/Aerospace & Developers in the Crosshairs

TL;DR

- Neurosymbolic AI Tool CodeLogician Closes 47% Reasoning Gap in Financial and Safety-Critical Systems

- Unity Unveils AI Beta at GDC to Enable Non-Coding Game Development

🛡️ CodeLogician Slashes LLM Reasoning Errors by 41-47%: Closing Safety Gap in US Critical Systems

CodeLogician slashes LLM reasoning errors by 41-47% – a concrete cut nearly halving errors vs raw LLMs 🛡️. Validated on a real-world benchmark and in US financial/aerospace systems, it fixes the 'reasoning gap' in LLM code verification. Proof time dropped from 3.2s to 1.7s, but lags sub-second latency for ultra-fast trading. For safety-critical industries (finance, aerospace) and engineers: How soon could verified AI like this be non-negotiable for your critical systems?

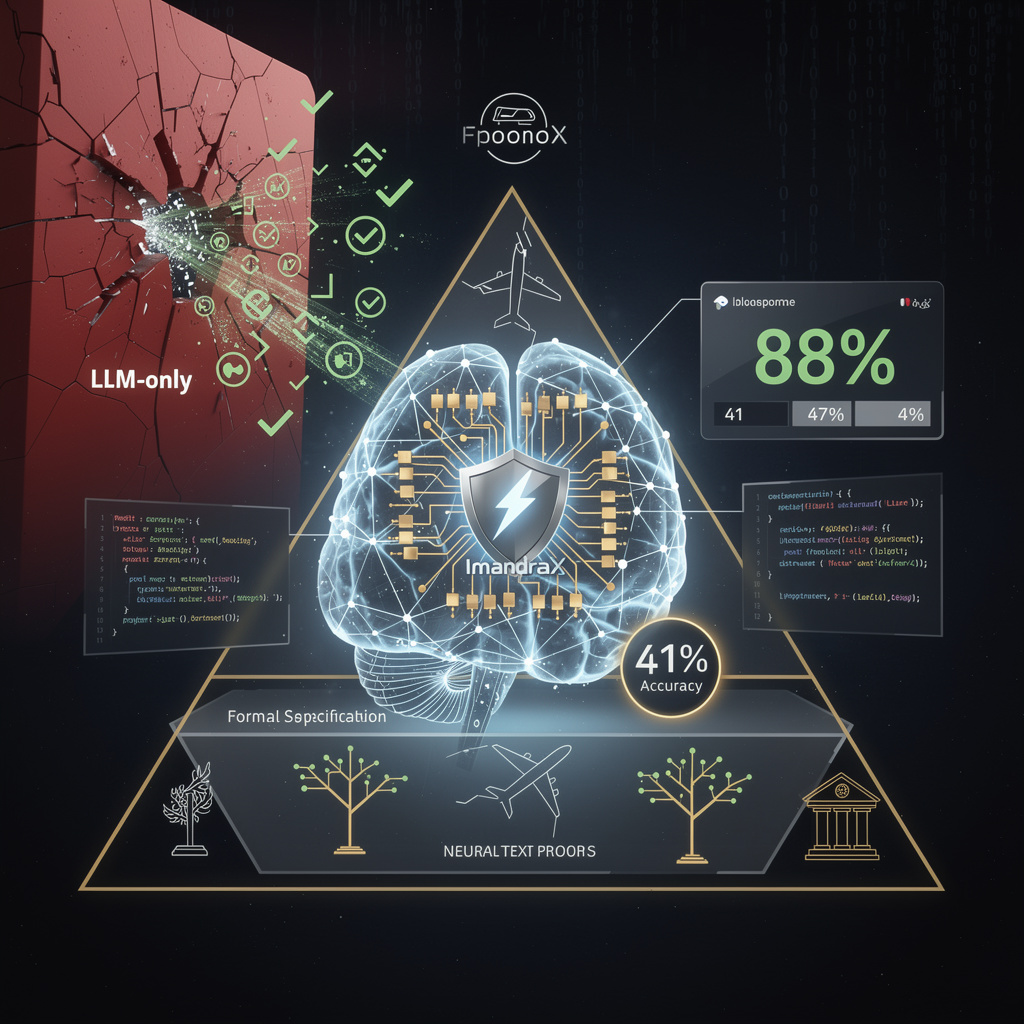

Imandra’s CodeLogician, a neurosymbolic AI tool paired with its industrial reasoning engine ImandraX, has closed a 41–47% accuracy gap in mathematical reasoning for financial and safety-critical software—marking a breakthrough in verifying AI-generated code. By merging large language models (LLMs) with formal theorem-proving, the tool delivers precise program behavior analysis, already deployed in U.S. financial trading platforms and aerospace verification pipelines since early February 2026.

How CodeLogician Closes the AI Reasoning Gap

CodeLogician works by augmenting LLMs: a 12-billion-parameter transformer model (fine-tuned on code and formal methods) automatically generates explicit formal specifications (first-order logic, or FOL) from Python/Java code snippets, preserving loop invariants and pre/post-conditions. These specs are fed into ImandraX, a hybrid SAT/SMT solver that reduces proof-search time from 3.2 seconds (LLM-only) to 1.7 seconds. Validation on code-logic-bench—a 1,200-snippet suite of real-world software tasks—showed CodeLogician achieving 88% correct inference, versus 41–47% for raw LLMs.

What Are CodeLogician’s Key Impacts?

- Error Reduction: Cuts mathematical reasoning errors by 41–47% absolute points, addressing a longstanding LLM limitation in exhaustive program analysis.

- Safety/Certification: Enables provable correctness for high-stakes systems (e.g., aerospace, finance), aligning with mandatory certification requirements.

- Market Shift: Drives “rational acceptance” of AI-augmented engineering, per Jan–28–2026 industry analysis, as firms prioritize safety over skepticism.

- Competitive Pressure: Forces rivals like Anthropic’s Claude (which saw 5–10× quota spikes for reasoning tasks) and OpenAI’s Codex to integrate formal verification—something they currently lack.

Strengths, Weaknesses, and Industry Reactions

Strengths: Quantifiable error reduction, a seamless LLM-to-formal-proof pipeline, and validation on realistic benchmarks.

Weaknesses: Dependence on high-quality LLM outputs; proof times (1.7s) still exceed sub-second latency needs for ultra-low-latency trading.

Opportunities: Expansion into regulated sectors (medical devices, autonomous vehicles) requiring formal certification; integration with AI-accelerated hardware (e.g., GPU SAT solvers).

Threats: Rapid LLM improvements (e.g., GPT-5.2) could narrow the gap; regulators may favor open-source formal methods over proprietary stacks.

Short- and Long-Term Outlook for Neurosymbolic AI

- 0–12 Months: At least three U.S. hedge funds and one aerospace OEM will pilot CodeLogician via ImandraX’s API; 8-bit LLM quantization is projected to halve memory footprint while retaining >85% accuracy.

- 1–3 Years: Model compression and symbolic abstraction could push code-logic-bench accuracy above 90%, matching traditional theorem-provers for most industry code; standardized “neurosymbolic APIs” (e.g., Imandra’s “NeuroSpec”) may enable cross-toolchain adoption on cloud platforms.

Closing the 41–47% gap isn’t just technical—it’s a regulatory inflection point. For sectors where AI failure risks lives or billions, provable code is no longer optional. As CodeLogician scales, neurosymbolic tools will likely become non-negotiable for AI deployment in finance, aerospace, and medicine, forcing the industry to balance innovation with unassailable accountability.

🤖 Unity AI Game Tool: 20% Stock Plunge as Half Developers Reject It – U.S. Creator Market in Balance

Unity’s AI authoring beta just triggered a 20% stock dip – a fifth of its value gone in days 📉. The tool wants to bring ‘tens of millions’ of non-programmers to game creation, but 48–52% of developers call it a ‘net negative’ fearing skill loss and IP risks. Indie creators: Would you skip learning code for this AI – even if half your peers warn against it?

At the Game Developer Conference (GDC) 2026, Unity Technologies CEO Matthew Bromberg unveiled an AI-driven authoring tool aimed at letting tens of millions of non-programmers—designers, artists, and indie creators—build interactive games without writing C# code. Trained on models like Stable Diffusion and FLUX, the beta seeks to dismantle traditional coding barriers, potentially rewriting who can enter game development.

How Does the Unity AI Tool Actually Work?

The tool’s core is a natural-language prompt interface that generates Unity scenes, assets, and simple gameplay logic. It integrates foundation models (Stable Diffusion, FLUX, Bria, GPT-Image) via latent diffusion pipelines, with inference offloaded to cloud GPU farms (equivalent to NVIDIA H100s) or optional on-device int8 quantization for low-latency previews. Critical to accessibility: model weights are compressed to ≤2GB via 30% sparsity pruning and 4-bit quantization—small enough to run on most consumer PCs. A contextual graph links generated assets to Unity’s Entity Component System (ECS) and rendering pipelines (URP/HDRP), ensuring compatibility with existing workflows.

What Impacts Will the Tool Have Immediately?

- Creator Accessibility: Targets “tens of millions” of non-programmers, building on 36% of surveyed GDC developers already using generative AI daily.

- Economic Pressure: Unity’s Q4 2025 earnings saw a 20% stock dip after Google’s Project Genie, highlighting market sensitivity to AI competition.

- Competitive Disruption: Unlike Unreal’s AAA-focused “MetaHuman + AI-Blueprint” (30% market share) or Godot’s open-source plugins (12% indie share), Unity’s tool emphasizes low-code, cross-platform (mobile-first) creation—already used by 45% of developers as their primary engine.

- Skill Shift Fears: 48–52% of developers view AI as net negative, worried it could erode C# and systems-engineering expertise.

What Risks Does Unity Face—and How Is It Fixing Them?

- IP Exposure: Generated assets might infringe copyrighted datasets. Mitigation: Real-time watermarking and provenance logs for every asset.

- Quality Variance: Diffusion art can produce inconsistent lighting/topology. Mitigation: Post-generation refinement (auto-UV unwrap, mesh decimation) before ECS import.

- Skill Erosion: Over-reliance on AI could devalue coding skills. Mitigation: “Hybrid Mode” requires a final script review for performance-critical components.

- Infrastructure Costs: Cloud GPU spikes could raise OPEX >$5M/quarter. Mitigation: Tiered pricing, edge-caching of popular models, and optional on-device inference for low-res previews.

What’s Next for Unity’s AI Bet?

- Short-Term (Q2–Q4 2026): 10k beta invites; 85% report successful scene generation in ≤3 minutes. Telemetry shows average 1.9s latency and 3% asset-import errors. The Unity Asset Store will add 150 AI-prompt templates, with quarterly diffusion checkpoint updates via Stability AI.

- Mid-Term (2027): If adoption hits “tens of millions,” Unity could capture >25% of the $8B global low-code game-creation market by 2030.

- Long-Term (2030+): Academic curricula may shift to prompt engineering and AI-assisted design, reducing entry-level C# demand by ~15% while boosting demand for AI-pipeline engineers. Regulatory pressure could emerge—EU/U.S. copyright frameworks may require AI-generated asset labeling, altering Unity’s metadata pipelines.

Unity’s AI beta is more than a tool; it’s a gamble on democratizing game creation. By pairing established diffusion models with Unity-specific compression, it aims to expand the creator base while navigating investor skepticism, developer doubt, and technical hurdles. If it succeeds, the tool could redefine who makes games, how they’re made, and what skills define the next generation of developers—reshaping the global game industry’s talent and market for years to come.

In Other News

- MiniMax M2.5 Surpasses SOTA in Coding, Achieves 80.2% SWE-Bench Verified Score

- Palantir Faces Legal Action in Germany Over Surveillance Data Linking

- MCP Protocol Replaces REST for AI Agents, Enabling Self-Describing Tools and Secure Local File Access

- Steam Deck OLED Sold Out Amid AI-Driven Memory Crisis

Comments ()