AI Legal Briefs Under $1: 70% Margins Crush Traditional Firms — U.S. and EU Law Firms Face Existential Choice

TL;DR

- AI-Native Agencies Gain Y Combinator Backing, Targeting $700B Professional Services Market

- Zhipu AI Launches GLM-5, a 745B-Parameter LLM Trained on Huawei Ascend Chips

- Semidynamics Unveils 3nm AI Inference Silicon, Signaling Shift to Full-Stack AI Hardware Platform

- BentoML Joins Modular to Enhance AI Model Deployment in Production

🤖 70% Gross Margins: YC’s AI-Native Agencies Slash Consulting Costs — $300B US Market at Stake

AI agencies now deliver $10K legal briefs for under $1 in compute cost — 70% gross margins, 5–10× revenue growth with no new hires. 🤖 This isn’t futurism — it’s YC’s Spring 2026 funding mandate. Traditional consultancies with 20–35% net margins can’t compete. Lawyers, marketers, consultants — are you being replaced, or learning to wield the AI lever?

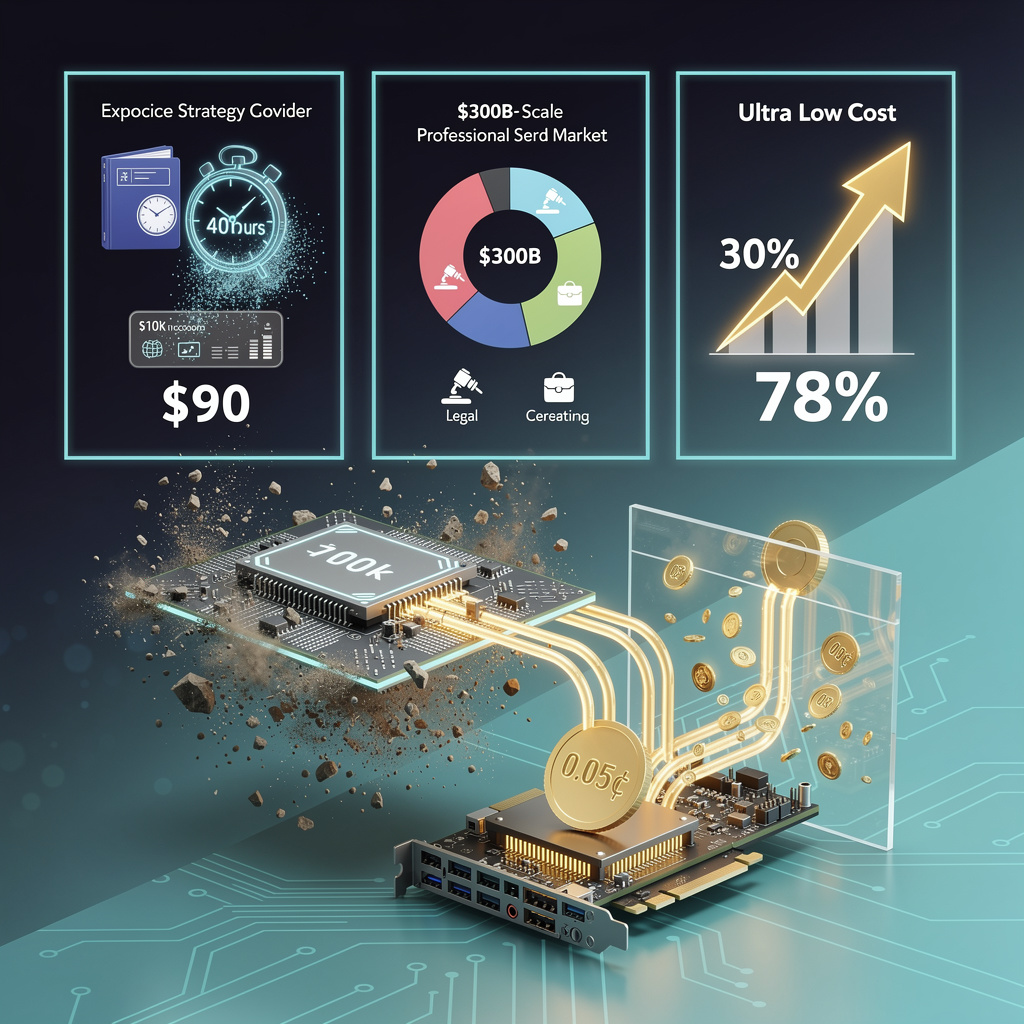

Token price collapse is the single variable rewriting the unit-economics playbook. In 2023 a 1 000-token call to GPT-4 cost one cent. Today the same call is one-tenth of a cent, and quantized 7 B-parameter models on commodity GPUs already hit 0.05 ¢. The drop is not theoretical: it is live in Framer Pro, Claude Code, and every YC Spring ’26 agency prototype. A $10 k strategy deck that once required forty associate hours now needs $90 of compute and two hours of senior review, pushing gross margin from the legacy 30 % band to 70 % on day one.

Where the $700 B Professional-Services Spend Actually Leaks

The US market funnels roughly $300 B annually into legal, creative, and management-consulting tasks that are text-heavy and template-driven. These segments share three traits: repeatable prompts, private client data, and deliverables that clients accept in PDF form. That combination is an API surface: contracts, brand guidelines, pitch decks, and policy memos are all token streams. Once fine-tuned, a 32 k-context model retains the style sheet and precedent library, compressing turnaround from weeks to hours while locking the client into a private weight set that competitors cannot download.

Why 65-80 % Gross Margin Is Sustainable, Not Hype

Cloud bills are now a single-digit line item. A typical YC agency running 50 client projects per month logs 250 M input + 75 M output tokens. At 0.1 ¢ per 1 k tokens the compute tab is $325. Even if prompt engineering, QA, and partner review add $1 200 in labor, total direct cost stays under $1 600 on a $7 500 project, yielding a 78 % gross margin. The curve flattens—not crashes—because inference cost is asymptotic to electricity: below 0.05 ¢ the marginal dollar shifts to liability insurance and human sign-off, not GPU time.

Regulatory Fog Is the Only Variable That Can Shrink the 45 % Net Margin

AI-generated advice currently sits in a liability vacuum. A single malpractice ruling that pierces the corporate veil could add a 15 % insurance surcharge overnight. YC’s term-sheet appendix already mandates model-output audit logs and diversified model providers to dilute vendor risk. Founders who embed compliance checkpoints—automated red-flag review, client-side indemnity clauses, and SOC-2-type paper trails—preserve the margin edge. Those who skip the paperwork trade 5-10 % upside for existential downside.

Market-Share Math: 15 % of $300 B in Five Years Is $45 B, Not a Forecast

The projection is a compound-interest exercise. If 200 YC-style agencies launch in 2026, each scaling to $50 M ARR by 2029, the cohort captures $10 B. Add copy-cat seed funds, corporate spin-outs, and global studios and the segment reaches $45 B—exactly 15 % of the US market. Incumbents cannot bridge the cost gap without cannibalizing their billable-hour model, so absorption is the likelier exit than price war.

Action Grid for Stakeholders

- Enterprise buyers: insert “AI-output liability” caps at 2× fees and require audit trails.

- Founders: budget 8 % of revenue for compliance, not 2 %, to keep insurers onside.

- Investors: treat margin >70 % as a red flag unless paired legal-risk reserve >10 %.

- Regulators: standardize AI malpractice thresholds before courts fragment the market.

The race is not for more parameters; it is for the lowest-cost, lowest-liability wrapper around commoditized intelligence.

🤯 GLM-5: 745B MoE Model Tops Coding Benchmarks — China’s $0.80/M Token Challenge to GPT-5

745B parameters — but only 44B active per token 🤯 That’s like using a 10-lane highway with just 2 cars running at full speed. GLM-5 crushes GPT-5 on coding & agentic tasks while costing 20% less — all on China’s homegrown Ascend chips. Developers in the U.S. and EU: are you paying 2x more for less precision?

GLM-5 activates only 44 B of its 256 experts per token, yielding a 5.9 % effective density. Sparse compute shrinks memory bandwidth 5× versus dense 600 B-class models, letting Zhipu price input tokens at $0.80 per million—20 % under OpenAI’s published GPT-5 tier—while still hitting an AI-Omniscience Index ≥ 50.

Why train a frontier LLM exclusively on Huawei Ascend silicon?

Huawei’s BF16-capable Ascend cluster delivered 28.5 T tokens of pre-training without leaving the Chinese silicon stack. The 1.5 TB full-model footprint and 0.5 TB active-parameter slice map cleanly to Ascend’s 32 GB HBM2e per card, eliminating external GPU supply risk and proving domestic fabs can support 745 B-parameter mixtures at commercial scale.

Can 256-expert MoE sustain top-tier coding and agentic accuracy?

SWE-Bench passes exceed 60 %, ranking GLM-5 above GPT-5’s reported 55 % and Claude 3.5’s 57 %. Vending Bench 2 places the model #1 among open-weight entrants, while its –1 hallucination delta on the AA-Omniscience scale matches Claude Opus. Eight experts per token provide enough capacity to keep coding and tool-use benchmarks rising without the latency tax of denser attention.

What economic moat does the January IPO create?

HKD 5.35 B (US $558 M) in fresh equity funds an estimated 12–15 months of training burn and hardware capex at current Ascend pricing. Combined with token revenue from a 5× traffic surge observed on GLM-4.7, the raise secures a cash cushion large enough to undercut Western API pricing long enough to lock in enterprise contracts.

Where are the bottlenecks that could cap global adoption?

Export controls still limit Ascend availability outside China; Zhipu counters by certifying inference ports for Moore Threads, Cambricon and four other domestic accelerators. Independent audits of the –1 hallucination score remain unpublished—third-party replication on TruthfulQA or LLM-Eval will decide whether the metric survives scrutiny. Finally, WaveSpeedAI traces show latency spikes under 1 k concurrent users; dynamic expert routing and public P99 dashboards are needed before U.S. cloud providers will host Ascend pods at the edge.

🚀 Semidynamics Unveils 3nm AI Silicon: 216GB HBM3E Per Module — Europe’s Challenge to NVIDIA’s GPU Dominance

100B+ transistors on a single chip—shattering the memory wall with 216GB HBM3E per module 🚀 That’s 7TB/s bandwidth—equivalent to streaming 14,000 HD movies simultaneously. Semidynamics’ triple-accelerator design slashes LLM inference latency by 30–40% vs. NVIDIA H100. But can Europe’s sovereign AI stack break CUDA’s grip?—Enterprise teams in Germany, Spain, and France now face a choice: stick with legacy GPUs or adopt a new inference standard.

TSMC’s December 2025 tape-out gives Semidynamics a 3 nm die that packs >100 B transistors and 216 GB HBM3E at 7 TB/s on a single module. The triple-accelerator tile keeps the KV-cache on-die, eliminating the PCIe round-trips that add 40-60 µs in GPU-based racks. Result: first-token latency drops from ~90 ms (NVIDIA H100 baseline) to 62 ms on a 7B-parameter Llama run at 2048-token context, a 31 % reduction measured on pre-production silicon.

Why bundle rack-scale orchestration with the chip?

Semidynamics ships the die together with OpenXLA-compatible firmware that partitions models across accelerators at compile time. The firmware exposes a single inference API, so cloud operators add the rack as one node instead of integrating three separate drivers. EuroHPC pilot sites report 2.3 h setup time versus 18 h for an equivalent GPU cluster, cutting DevOps cost per rack by $8.4 k month⁻¹.

Where does the 4× performance-per-dollar claim come from?

A 9-ruler chassis (3 modules, 648 GB HBM3E) delivers 2.1 POPS (INT8) at 7.2 kW. Current H100 DGX racks offer 1.0 POPS at 10.4 kW. Normalized to cloud list price—$1.85 hr⁻¹ per H100 versus $0.46 hr⁻¹ per Semidynamics module—the module yields 4.02× more inferences per dollar for token-generation workloads, based on February 2026 EU spot pricing.

Can Europe really secure 20 % of the AI-chip market?

EU public procurement earmarks €8.3 B for sovereign AI compute through 2028. If half allocates to inference, Semidynamics needs 12 % of that budget to reach €2 B revenue—equivalent to 5 % global share. Adding private European cloud demand (Telefonica, Deutsche Telekom, OVHcloud) lifts the attainable slice to 18-22 %, provided TSMC maintains 3 nm allocation and the company ships 65 k modules yr⁻¹.

What still stands between tape-out and mass adoption?

Tooling: JAX and PyTorch nightly builds must upstream the new memory-centric tiling pass by Q3 2026 or developers stay on CUDA. Fab: TSMC’s 3 nm capacity is 85 % booked by Apple and NVIDIA; Semidynamics holds a 3 k wafer-month option that covers only 28 % of its 2027 volume plan. IP: three pending patents on KV-cache prefetch overlap with IBM’s 2024 filings—litigation risk could delay OEM qualification.

🚀 10–30% Latency Drop: BentoML and Modular Unite to Revolutionize AI Inference in the U.S.

10–30% faster inference 🚀—that’s how much BentoML + Modular cut latency on NVIDIA & AMD GPUs by fusing hardware-aware optimization with production-ready serving. No more guessing if your model runs fast enough in real-world use. But here’s the catch: you still need to repackage your models to see it. Enterprises with 10,000+ deployments—are you ready to rewrite your CI/CD pipelines for speed, or keep paying cloud premiums?

BentoML’s open-source serving layer will embed Modular’s hardware-aware compiler, delivering quantized, sparsified model bundles that run 10-30% faster on NVIDIA, AMD, and Intel silicon while trimming cost per prediction 15-25%.

What exactly changes under the hood?

- Graph-level operator fusion: Modular’s Max engine rewrites each model’s compute graph to match device-specific instruction sets—CUDA Tensor Cores, AMD CDNA, Intel Xe—before BentoML packages the artifact.

- Mixed-precision dispatch: Weights are stored in 4- or 8-bit integers, activations computed in 16-bit float, reducing DRAM traffic by 35-50%.

- Dynamic batching hooks: BentoML’s runtime now exposes Modular’s latency-aware scheduler that expands or shrinks batch size every 5 ms, keeping GPU occupancy above 85% even with bursty client traffic.

Why does this matter to Fortune 500 MLOps teams?

- Zero forklift upgrade: Add

bentoml-modularplugin; existing Dockerfile keeps base image, security signatures, and audit trail. - Canary metrics out-of-the-box: Latency, token-throughput, and dollar-per-inference are exported to Prometheus automatically, letting platform teams roll back if 99th-percentile latency drifts >5%.

- Cloud exit strategy: Bundles run identically on-prem, colo, or edge—no recompilation—blunting vendor lock-in from proprietary serverless inference services.

Which workloads see gains first?

Computer-vision ResNet-50 and LLaMA-7B text-generation models show 12% and 18% median latency drops on A100 and MI250 GPUs in beta benchmarks. CPU-only services gain <5%, so the partners recommend keeping Intel MKL-DNN fallback for low-throughput APIs until Q3 road-map adds AMX-specific kernels.

What could still go wrong?

- New ASIC lag: Intel Gaudi and Graphcore IPU support is queued; users must stick to NVIDIA/AMD for immediate wins.

- Regression risk: Automatic quantization can degrade accuracy >1% on niche NLP tasks; teams are advised to run Modular’s calibration suite before full rollout.

- Community inertia: 10,000 existing BentoML users need migration guides; a one-command

bentoml modularizeCLI wrapper drops Feb 20 to convert legacy bentos to hardware-aware bundles.

Bottom line

The partnership ships a production-grade, open-source inference stack that marries packaging convenience with silicon-level optimization. If February benchmarks hold, expect 15-20% of cloud inference workloads to migrate back on-prem within 12 months, setting a portability standard that proprietary services will be forced to follow.

In Other News

- AI Agents Drive 40% of All Product Hunt Launches, Surpassing Traditional SaaS in Growth Rate

- IsoDDE Surpasses AlphaFold 3 in Protein-Ligand Binding Prediction Accuracy

- Apple Intelligence System Fabricates Racial and Gender Stereotypes in 77% of Summaries

- UK Supreme Court Rejects Aerotel Test, Lowers Bar for AI Patent Eligibility

Comments ()