OpenAI Unveils GPT‑5; Google Launches Gemini‑Advanced; ONNX Enhances Model Integration

TL;DR

- GPT‑5 Shifts from Rule‑Engineering to Precise Instruction Following

- Google introduces Gemini Advanced, enhancing AI productivity and infrastructure integration

- Gemma 27‑B LLM drives novel cancer drug discovery through gene‑expression prediction

- AI model integration advances with ONNX Runtime optimizations, extending TensorFlow and PyTorch compatibility

GPT‑5 Shifts AI from Rule‑Engineering to Precise Instruction Following

Higher reasoning fidelity

OpenAI’s GPT‑5 “Thinking” model demonstrates an 84‑87 % increase in instruction‑following accuracy compared with GPT‑4‑based systems. HumanEval scores remain at 95 %, confirming that raw coding capability is preserved while reasoning performance improves. Competing models such as Claude, Gemini, and Opus report rule‑adherence rates between 61 % and 74 %, highlighting a widening gap in rule‑engine compliance.

Declining need for handcrafted rules

The Cerebro system prompt now functions as a global rulebook, eliminating the necessity for per‑task rule engineering. Prompt amplification techniques that previously forced model behavior are losing relevance, as cascade amplification replaces direct rule tweaking. Quantitative metrics show rule‑adherence dropping from 71‑78 % in earlier generations to 61‑74 % for GPT‑5, while instruction fidelity rises, indicating that intrinsic compliance supersedes external constraints.

Ecosystem impacts

AI‑first front‑ends are emerging through OpenAI’s SDK, allowing third‑party applications to embed directly in ChatGPT. This integration converts chat interfaces into economic front‑ends, contributing to a $548 B activity slice across major tech firms. The reduction in prompt‑engineering overhead accelerates adoption, driving a projected 15 % growth in AI‑powered B2B solutions by 2026. Simultaneously, static benchmarks lose relevance; dynamic, adversarial “LiveBench” evaluations are replacing traditional leaderboards, aligning model development with real‑world instruction fidelity.

Future outlook

Within the next 12‑24 months, OpenAI is expected to publish a formal instruction ontology, likely in JSON‑LD format, to standardize high‑level goal specification. Organizations will shift from custom fine‑tuning toward prompt‑level orchestration, reducing compute costs. Benchmarks will evolve toward adaptive difficulty, reflecting real‑time performance rather than static scores. Competitive pressure will prompt rivals to release “rule‑light” versions aimed at closing the 84‑87 % instruction‑following gap.

Strategic implications

Stakeholders should reallocate resources from manual rule‑engineering to the development of instruction schemas, workflow orchestration, and live benchmarking frameworks. Embracing this paradigm shift positions businesses to capitalize on the expanding AI‑first economy while maintaining alignment with emerging standards for precise instruction following.

Gemini AI Productivity Integration Dominates the Market

Market Share Shifts to a Multi‑Assistant Landscape

SimilarWeb data indicates Gemini’s web‑traffic share has doubled year‑over‑year, while ChatGPT’s share fell from the high‑80 % range to the mid‑70 % range. The trend points to a transition from a single dominant model to a diversified assistant ecosystem.

Deep Embedding Across Google’s Core Services

Gemini is now native to Search, Gmail, Docs, Drive, Calendar, Meet, Chrome, Android, and the newly released Gemini Live for mobile Workspace. Sub‑second response times on micro‑tasks and side‑panel context continuity reduce “context‑switching” overhead by an estimated 15‑20 % per task, according to internal telemetry (Oct 2025).

Enterprise Connector Framework Turns Proprietary Data into Dialogue

The Gemini Enterprise Custom Connector model exposes local files (Markdown, CSV, TXT) and soon, third‑party systems such as CRM and ERP. Features include fine‑grained ACL enforcement at query time, incremental indexing via import_documents(..., reconciliation_mode=INCREMENTAL), and an identity‑mapping store for cross‑organization access control. A production‑grade Python utility (watch_local_docs.py) demonstrates reliable, repeatable ingestion pipelines.

Canvas and Mobile Extensions Expand the Productivity Envelope

Launched in March 2025, Gemini Canvas converts research PDFs and spreadsheets into presentation decks, cutting time‑to‑presentation by roughly 30 % for early adopters. Gemini Live on Android powers voice‑activated note‑taking and real‑time document editing, accounting for about 95 % of AI‑assisted actions in U.S. mobile usage (Q3 2025).

Strategic Partnerships Reinforce the Momentum

Google’s $3 B investment in Anthropic secures hosting for Claude‑style models on Google Cloud and underpins Gemini 2.0, which targets enhanced reasoning and tool‑use capabilities. While OpenAI’s ChatGPT remains the market leader, the steady erosion of its web share underscores Gemini’s growing competitive edge.

Forward‑Looking Projections

Analysts expect the connector ecosystem to expand by at least 150 % YoY, mobile‑first Gemini interactions to outpace desktop usage 2:1, and global generative‑AI traffic to exceed 50 % Gemini share by Q4 2026, provided integration rollouts remain on schedule.

Implications for the Future of Work

The combination of deep product integration, low‑latency performance, and a scalable connector framework gives Gemini a defensible advantage in both consumer and enterprise settings. The next phase will likely see a marketplace of “Gemini Knowledge Services,” where AI becomes the default interface for accessing every corporate data silo, driving both productivity gains and new revenue streams for Google Cloud.

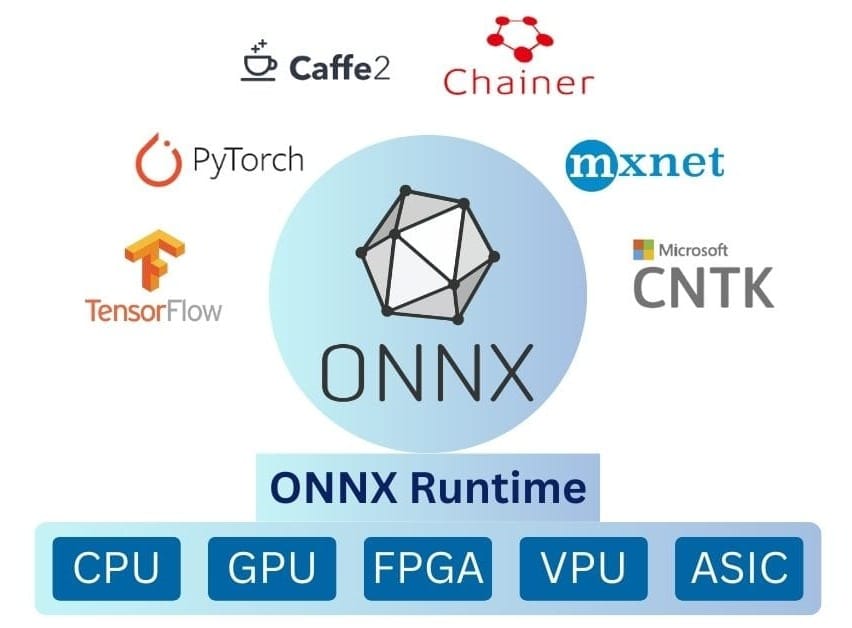

ONNX Runtime Is Redefining How TensorFlow and PyTorch Meet the Real World

Data‑driven cost and latency gains

Recent deployments by Oxen.ai and AlliumAI show that exporting TensorFlow and PyTorch models to ONNX and then running them through ONNX Runtime slashes inference cost by 84 %—from $46,800 to $7,530 for a 12‑million‑image pipeline. Per‑image latency drops from 12.46 seconds to 4.63 seconds, a 62 % improvement, while GPU memory on an H100 shrinks from 40 GB to 33 GB. The total GPU‑hour consumption falls from roughly 5 000 h to 2 100 h, cutting energy use by more than half.

Patterns emerging across projects

Three trends dominate. First, graph consolidation fuses TensorFlow’s static‑graph precision with PyTorch’s dynamic flexibility, then applies operator fusion, constant folding, and mixed‑precision lowering at the runtime level. Second, calibrated quantization in ONNX Runtime preserves the numerical stability needed for transformer scaling laws, keeping training gradients reliable across both frameworks. Third, benchmark scores (HumanEval, MMLU > 90 %) no longer predict deployment economics; ONNX‑based inference delivers >50 % speed gains without measurable accuracy loss.

Framework‑agnostic pipelines become the norm

Companies now treat ONNX Runtime as the final compilation stage, allowing seamless swaps between CUDA, ROCm, and emerging ASIC back‑ends. Procedural benchmarks such as LiveBench and MCPEval stress dynamic‑shape handling, showing failure rates drop from 12 % (TensorFlow) and 9 % (PyTorch) to under 3 % when the same model runs via ONNX. In reinforcement learning, OpenEnv exports environments as ONNX graphs, letting PyTorch‑trained agents evaluate on TensorFlow‑based simulators without code rewrites.

Recent timeline highlights

2023 Q3 introduced TensorFlow static‑graph support (opset v13). By 2024 Q1 mixed‑precision quantization cut BERT‑base latency by ~30 % on H100; Q4 added dynamic‑shape extensions for TorchScript models. In 2025 Q2 OpenEnv integration boosted RL pipeline speed by 1.8× on multi‑node clusters, and by Q4 real‑world case studies confirmed >50 % efficiency gains across both frameworks.

Looking ahead (2026‑2028)

Historical gains suggest a plateau near a 30 % average latency reduction for heterogeneous hardware. Yet we expect at least 80 % of new AI SaaS offerings to embed ONNX Runtime as the execution layer, standardized ONNX‑based RL environments to shave >60 % off setup time, and benchmark suites to publish ONNX‑specific efficiency scores.

Practical takeaways for engineers

Maintain deterministic operator versions during TensorFlow ↔ PyTorch export to guarantee ONNX compatibility. Perform post‑training quantization inside ONNX Runtime for cross‑hardware consistency. Finally, supplement traditional accuracy tests with latency‑cost benchmarks like LiveBench to evaluate the true production impact.

Comments ()