OpenAI GPT‑5 Claims vs GPT‑4 Retrieval: AI’s Widespread Impact on Jobs, Data Centers, Windows

GPT‑5 vs. GPT‑4: Retrieval or Creation?

OpenAI announced that GPT‑5 “surfaced” solutions to ten Erdős problems, while GPT‑4 was credited with locating complete proofs for ten other problems via massive query batches. Independent verification by Thomas Bloom, Mark Sellke, and Sébastien Bubeck confirms that the ten “solved” problems already existed in the literature; GPT‑5 acted as a high‑throughput retrieval engine rather than a generator of novel mathematics.

Both claims rely on the same underlying capability: large‑scale literature mining. The divergence lies in marketing language (“solved” vs. “retrieved”). This pattern illustrates a broader risk: headline‑driven narratives can exaggerate LLM creativity, prompting premature expectations from investors and the public.

Key take‑away: rigorous, community‑driven audits are now essential for any AI‑generated claim of scientific breakthrough.

Labor Market Shift: Automation, Security, and Reskilling

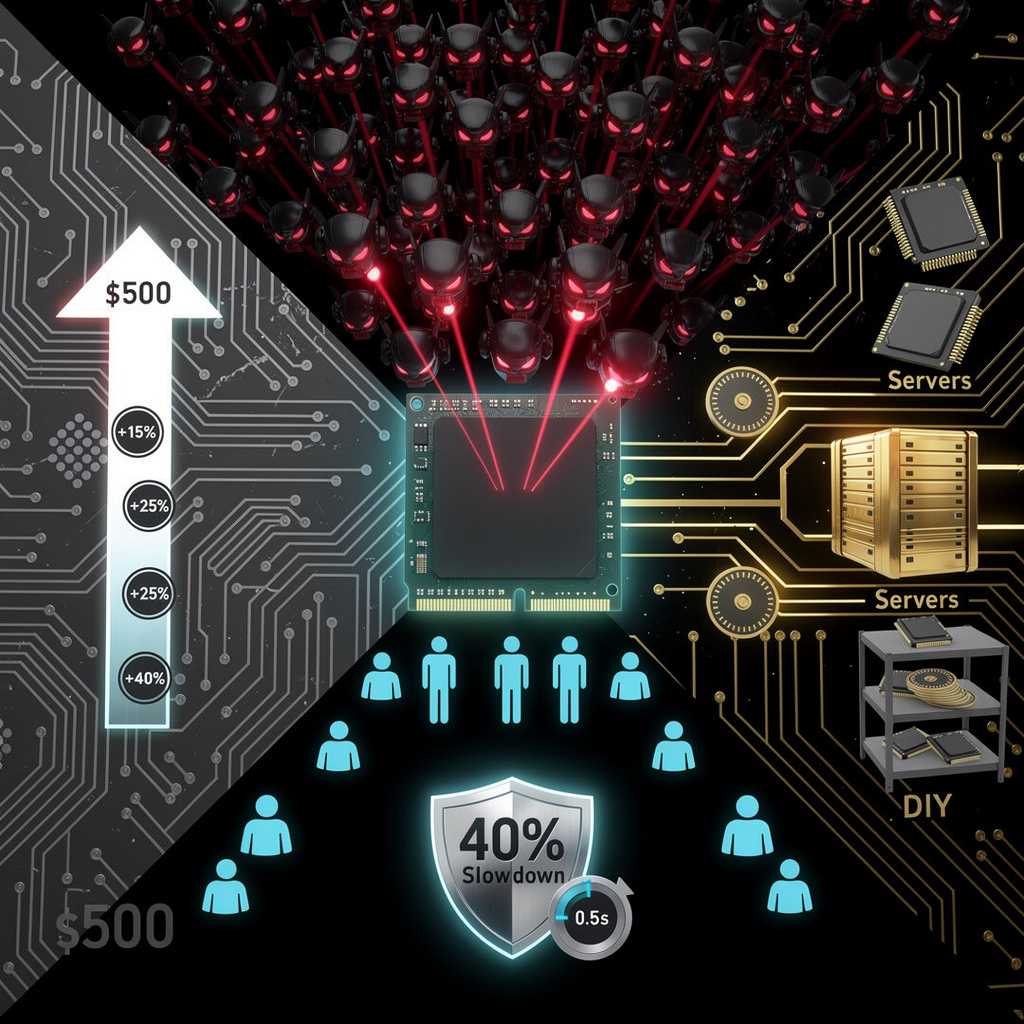

AI‑enabled automation is driving headline layoffs across consulting (Accenture – 11 k), finance (Goldman Sachs, JPMorgan), and major cloud providers (Microsoft, Amazon, Google). The IMF identifies ~40 % of global jobs as AI‑exposed; entry‑level white‑collar roles face a projected 50 % loss by 2025‑30.

Simultaneously, AI‑specific hiring is rising: “AI operations” job ads have increased 230 % YoY, and firms report >150 k employees regularly using AI tools. Security concerns are mounting, with 72 % of S&P 500 firms disclosing material AI‑related risks and a 95 % AI pilot failure rate (Gartner, 2025). The principal security vectors include synthetic media generation (200+ incidents recorded by Microsoft in July 2025) and opaque credit‑underwriting models.

Emerging job categories—AI risk engineers, prompt safety auditors, synthetic‑media detection specialists—are expanding 30‑40 % YoY, offsetting some headcount reductions. Companies that pair disciplined reskilling programs with transparent AI‑risk reporting achieve a 3‑5 % lower cost‑of‑capital relative to peers.

AI‑Focused Data‑Center Buildouts as GDP Engine

Private financing (> $70 bn) and corporate‑backed nuclear power are driving a cumulative > 10 GW of AI‑ready capacity. Meta’s $30 bn Louisiana campus (5 GW) and Amazon’s 960 MW SMR‑powered facility exemplify the energy‑anchored model. Hardware commitments (AMD Instinct GPUs, Broadcom custom silicon) lock in $300 bn of spend through 2029.

These investments contributed 0.9 pp to Q4 2025 GDP growth; total AI sector impact reached 4 % QoQ. Construction activity generated 439 k jobs, but skilled‑trade shortages (2 M unfilled) are inflating labor costs by 20‑30 %.

Energy demand is rising: AI workloads now consume 4‑6 kW per rack, pushing projected data‑center electricity share to 12‑14 % of national consumption by 2028‑2030. Grid stress, water‑intensive cooling, and emerging regulatory scrutiny (EU AI Act) constitute the primary risk vectors.

Consolidated Outlook

- LLMs excel at large‑scale retrieval, not autonomous theorem creation, mandating external validation for any claimed scientific breakthrough.

- Automation‑driven labor reductions are offset by rising demand for AI‑risk and security specialists; transparent governance reduces capital costs.

- Infrastructure expansion (AI data centers, AI‑PC hardware, OS‑level agents) fuels macro‑economic growth but introduces energy, water, and regulatory constraints that must be managed through policy and engineering innovations.

Comments ()