HPE Slashes LLM Latency 1000×: 1TB Cache Loads in 120ms, Not Hours

TL;DR

- HPE unveils Alletra Storage MP X10000 for AI workloads with KV cache and shared object storage

- Scale Computing Acquires Adaptiv Networks to Integrate SD-WAN/SASE into Managed Network Services

⚡ HPE MP X10000: 120ms KV-Cache Offload Cuts LLM Prefill From Minutes to Milliseconds

1 TB KV cache loads in 120ms—not 2+ minutes. HPE's new MP X10000 slashes LLM prefill latency 1000× by offloading GPU memory to shared object storage. 15% less GPU burn, 27× faster TTFT. 🔥 The catch? Your AI factory now lives or dies on flash density, not just TFLOPs. Who's upgrading storage before buying more GPUs?

HPE's Alletra Storage MP X10000, unveiled February 19, 2026, targets a critical bottleneck in enterprise AI: the movement and scaling of Key-Value cache data that currently throttles large language model inference across distributed clusters.

How Does KV-Cache Offload Transform AI Storage Architecture?

The platform repositions object storage as an active compute tier rather than passive archive. Traditional deployments trap KV states—roughly 39 GB per million tokens of context—within local GPU memory or single-host NVMe. This creates a hard scaling ceiling: prefill latency on DGX-B200 servers exceeds two minutes for 100,000-token contexts, rendering real-time inference impossible.

HPE's architecture introduces a shared KV-cache accelerator between GPU DRAM and persistent storage. NVIDIA's Dynamo extensions and RAG frameworks enable direct offload, cutting average load times to approximately 120 milliseconds post-initial compute. Solidigm's 30–60 TB eSSD drives, scaling to 122 TB, provide the QLC flash density required to host multi-terabyte caches without breaching rack power budgets.

What Performance Gains Does Quantified Data Reveal?

- Latency: KV-cache offload reduces token retrieval from minutes to sub-second levels, with 27× faster time-to-first-token demonstrated in Solidigm-validated configurations

- GPU efficiency: ~15% reduction in CPU-memory loading cycles, translating to measurable tokens-per-watt improvements

- Scalability: Shared object semantics eliminate per-node KV replication, enabling horizontal cluster expansion without data movement penalties

Where Do Technical Responses and Gaps Remain?

| Strength | Vulnerability |

|---|---|

| NVMe-oF backbone delivers ~1 TB/s aggregate bandwidth per gigawatt-class AI deployment | KV-cache consistency across distributed nodes requires versioned snapshots and atomic commit protocols |

| Global deduplication reduces storage footprint for repetitive inference patterns | Proprietary extensions (NVIDIA Dynamo, OpenAI KV-API) risk vendor lock-in without open-source alternatives |

| Liquid-cooling compatibility addresses thermal density of high-capacity SSDs | Enterprise adoption hinges on LINPACK-style benchmark validation currently pending |

What Does the Adoption Timeline Project?

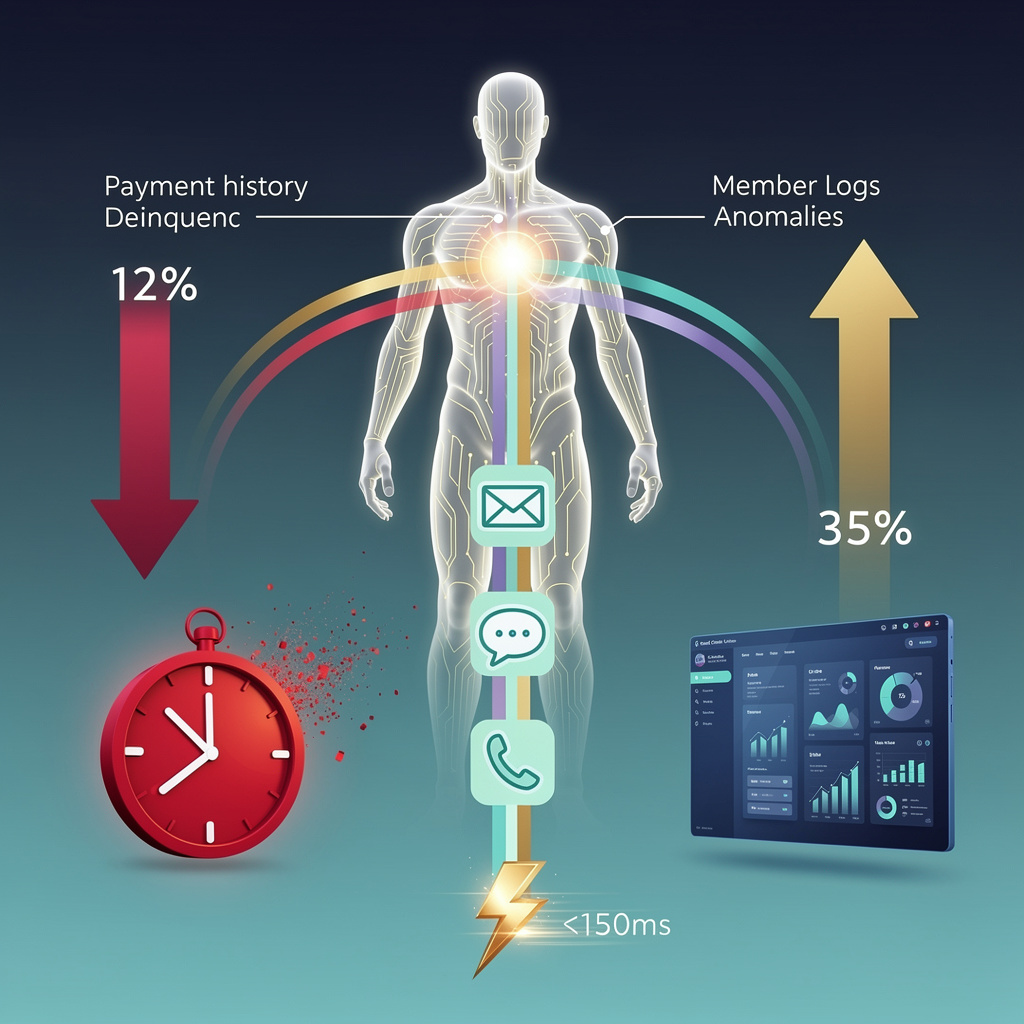

Q3–Q4 2026: ~12% capture of new AI-focused storage contracts as enterprises pivot from compute-heavy budgets toward data-movement optimization; HPCG and AI-Perf benchmarks expected to show 1.5–2× latency reductions for KV-heavy workloads

2027–2028: Integration with exascale system roadmaps as storage bandwidth requirements scale proportionally with >1 EW AI compute targets; convergence toward standardized KV-offload APIs

2029–2031: Potential emergence of storage-native interfaces as foundational layer for exascale inference tiers, with QLC-based shared object stores becoming default architecture

The MP X10000 signals a structural shift in HPC infrastructure design: storage latency, not processor count, increasingly governs AI system economics. For hyperscalers and research labs managing billion-token inference services, this rebalancing of the memory hierarchy—from GPU-centric to storage-distributed—offers a measurable path to sustainable scaling.

🔒 Scale Computing Absorbs Adaptiv: 9,000 Sites Face Single-Vendor Network Stack

9,000 enterprises just got their network & security fused into one platform. Scale Computing's Adaptiv acquisition cuts VMware costs and drops WAN latency 13%—but at what cost to flexibility? North American IT leaders: are you ready to trade legacy MPLS for a hyper-converged lock-in?

Scale Computing finalized its purchase of Adaptiv Networks on February 19, 2026, embedding SD-WAN and SASE capabilities directly into its SC//AcuVigil platform. The move targets 9,000+ enterprise customers across the United States and Canada who face mounting pressure from VMware licensing costs and fragmented network security stacks.

How Does the Technical Integration Work?

The combined architecture unifies three previously separate domains. SC//AcuVigil serves as the central management plane, now extended to orchestrate network policies through REST and GraphQL APIs. Adaptiv's SD-WAN engine provides dynamic path selection across MPLS, broadband, and LTE links with sub-10-millisecond latency for traffic steering. The SASE framework delivers Zero-Trust Network Access, Cloud-Web-Security, and Firewall-as-a-Service with 20 Gbps TLS-inspection capacity per appliance.

A jointly developed Edge Connector—requiring just 2 vCPUs and 4 GB RAM—terminates SD-WAN tunnels and enforces security policies locally on Scale's hyper-converged nodes. Control-plane communication flows through secure gRPC channels, while telemetry feeds into Scale's existing Prometheus and Grafana monitoring stack for real-time SLA verification.

What Are the Measurable Impacts?

Performance: Pilot deployments across 200 sites averaging 150 GB daily traffic demonstrate 13% WAN latency reduction and 25% faster firewall rule processing.

Cost Structure: Consolidated licensing eliminates separate network and security contracts; VMware VM-scale cost pressures ease through integrated infrastructure.

Security Posture: Continuous compliance reporting replaces periodic audits; threat intelligence feeds integrate directly at edge nodes.

Scalability: Architecture supports 10 Gbps per node and hierarchical hub-spoke topologies exceeding 5,000 sites.

Market Position: Immediate cross-sell opportunity to 30% of existing customers projects $45 million ARR uplift by 2027-2028.

Where Do Risks and Responses Align?

| Dimension | Observed Gap | Mitigation Deployed |

|---|---|---|

| Migration complexity | Temporary over-provisioning risk | Phased rollout with sandbox environments and automated rollback |

| Resource contention | Edge connector competition on shared nodes | Resource reservation policies; optional dedicated NIC offload |

| API security | Expanded attack surface from gateway misconfiguration | Strict schema validation; mutual TLS for all control-plane traffic |

| Bandwidth saturation | High-density interconnect limits | Adaptive traffic shaping; InfiniBand fabric integration where available |

What Does the Rollout Timeline Indicate?

Q1 2026: SD-WAN control plane integration into SC//AcuVigil UI complete.

Q2 2026: 200-site pilot cohort operational; early telemetry validates latency and processing improvements.

Q3-Q4 2026: Full customer base deployment; SC//AcuVigil 2.0 releases with native SASE policy templates.

2027-2028: European expansion into UK and German markets; subscription upgrade revenue materializes.

Does This Position Scale for What's Next?

The $60.25 billion projected network transformation market by 2033—growing at 13.2% annually—reflects enterprise demand for exactly this consolidation. With 82.9% of organizations reporting measurable latency and threat-detection improvements post-SASE deployment, and 44% of mission-critical infrastructure nearing end-of-life, the replacement window aligns with Scale's timing.

More critically, data-center networking revenues hit $46 billion in 2025 and project to exceed $103 billion by 2030, driven by AI-enhanced traffic engineering. Scale's integrated stack prepares its 9,000-customer base for workloads demanding ultra-low latency and secure edge processing—positioning the company not merely as infrastructure vendor, but as full-stack hybrid-IT provider against competitors still relying on third-party networking partnerships.

In Other News

- AMD announces Ryzen 10000 series 'Olympic Ridge' with up to 52-core Nova Lake SKU and Zen 6 architecture

- Microsoft’s Windows 11 26H1 release is hardware-gated, limited to Snapdragon X2 and Arm64 devices with Bromine platform baseline

- U.S. data center boom drives 2.3 GW power infrastructure buildout with $500M in turbine warehouse financing

- Taalas unveils custom silicon platform achieving 10x lower power and $100M cost savings vs. software-based LLM inference

Comments ()