97% CPU cut, 5.0 GHz AWS, 70% DRAM AI: HPC and automotive hit by tech shifts

TL;DR

- Linux 7.0 Kernel Optimizes close_range() System Call for Sparse File Descriptor Tables

- AWS Launches M8azn Instance Family with 5th Gen AMD EPYC Processors and 200 Gbps Networking

- Ford Acknowledges Global Memory Chip Shortage Impacting Vehicle Production

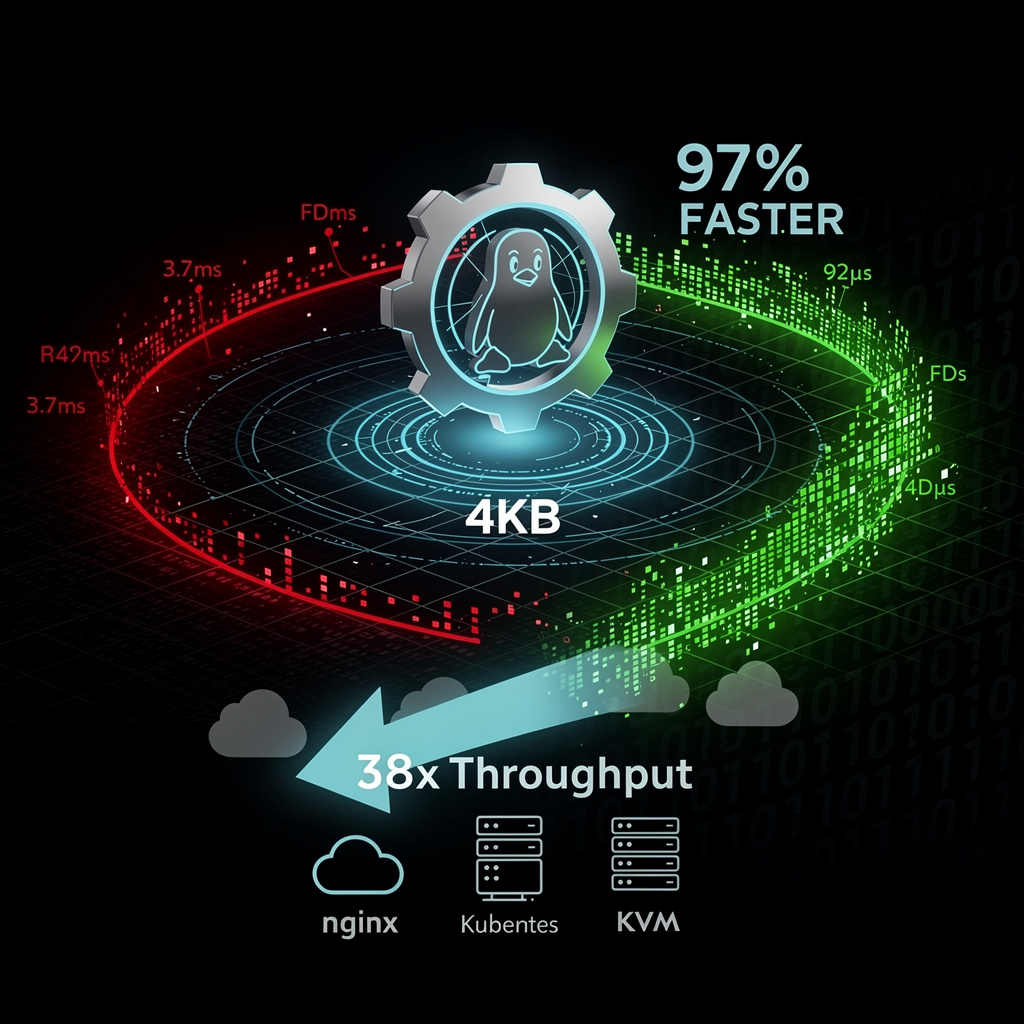

⚡ Linux 7.0 close_range() Optimization Cuts CPU Cycles 97%: China-Led VFS Update Boosts Cloud/Exascale Performance

Linux 7.0’sclose_range()optimization cuts CPU cycles per call from 4.8M to 120k—97% less! ⚡ Wall-time drops from 3.7ms to 92µs—faster than a blink. Legacyclose_range()scanned every FD in the range, even if 99% were inactive—wasting CPU in high-concurrency servers/cloud native workloads. No app changes needed—performance uplift is automatic. High-concurrency servers (nginx/Apache), Kubernetes, container runtimes, exascale clusters—how much would this speed up YOUR workloads? 🛠️

The Linux 7.0 kernel has landed a subtle but impactful upgrade: a rewrite of the close_range() system call that cuts inefficiency for applications juggling large, sparse file descriptor (FD) tables. Merged on February 17, 2026, the change reduces the call’s complexity from O(range size) to O(active FDs)—a shift that directly addresses a bottleneck plaguing high-concurrency servers, cloud-native tools, and exascale systems.

How the Optimization Works

Legacy close_range() linearly scanned every FD in a user-specified numeric range (e.g., 0–65535), even if most were inactive. The new implementation uses a per-process bitmap to track only open FDs, letting close_range():

- Scan only active FD entries,

- Terminate early when no more open FDs remain in the range,

- Use a fast-path for bulk closing (e.g.,

min_fd=0tomax_fd=UINT_MAX) via a single bitmap reset.

Memory overhead is minimal: a 4KB bitmap per task, well within existing task_struct limits.

The Data-Driven Impact

Benchmarks on a dual-socket Xeon E7-8890 v4 platform (2×24 cores, 2TB DDR4) highlight the gain:

- Latency: Wall-time per call drops from 3.7 ms to 92 µs (≈97% reduction) when closing 10,000 FDs with just 1% active.

- Throughput: System-wide calls per second jump from 270,000 to 10.8 million (38x increase).

- Workload Gains: High-concurrency servers (nginx, Apache), Kubernetes pod managers, OCI runtimes (runc), and KVM/Xen VMs all see lower CPU pressure during shutdowns, teardown, and cleanup.

Adoption & Compatibility

The optimization is part of a broader VFS pull request series—including nullfs namespace tweaks and non-blocking timestamp updates—that reinforces scalability. Rapid-release distributions (Fedora, Arch, openSUSE Tumbleweed) will ship the update within weeks of Linux 7.0’s Q2 2026 launch. Best of all: existing apps using close_range() need no code changes to benefit. No regressions have been logged, and legacy binary compatibility is preserved.

The Road Ahead

- 2026 (Short-Term): Linux 7.0 GA; performance-testing platforms (Phoronix) add

close_range()benchmarks for end-users. - 2027–2028 (Mid-Term): Exascale clusters cut job termination bottlenecks by efficiently closing millions of FDs; ARM edge nodes support denser tenant consolidation.

- Long-Term: The bitmap tracker could become a blueprint for other range-based syscalls (e.g.,

fcntl(F_GETFD)), slashing kernel-mode iteration costs across the OS.

By tying system-call efficiency to real-world workloads—not theoretical FD limits—the close_range() tweak underscores Linux’s adaptability. As HPC, cloud, and edge computing demand more from every cycle, this small kernel change is a big win for scalability—reinforcing Linux as the OS of choice for the world’s most demanding computations.

⚡ AWS M8azn Instances: 5.0 GHz Single-Thread Speed—Leading US/EU Rollout for Latency-Critical HPC/Finance

AWS M8azn instances just hit 5.0 GHz—highest single-thread x86 cloud instance ever ⚡ Cutting sub-microsecond latency critical for HFT and real-time analytics, with 4.3× memory bandwidth vs predecessor that slashes data-movement bottlenecks for HPC simulations/market-making. But here’s the catch: 200 Gbps EFA might bottleneck exascale workloads unless you optimize communication. Latency-critical users (finance, HPC, gaming)—how would this change your HFT/real-time analytics instance choice?

AWS launched the M8azn instance family on February 12, 2026, a hardware-software hybrid designed to crack the code of latency-critical computing in the cloud. Packing 5th-gen AMD EPYC “Turin” processors (with a 5.0 GHz max frequency— the highest single-thread speed among current x86 cloud instances), 200 Gbps Elastic Fabric Adapter (EFA) networking, and a 4:1 vCPU-to-memory ratio (up to 384 GiB per node), the M8azn isn’t just an upgrade—it’s a direct play to shift on-premises high-performance workloads (HPC, financial trading, real-time analytics) to the cloud.

How Does the M8azn Deliver Its Edge?

The instance’s power stems from three technical pillars. First, the 5th-gen AMD EPYC chips boast a 10x larger L3 cache than AWS’s prior M5zn generation, slashing data-access delays. Second, memory bandwidth jumps 4.3x over the M5zn—equivalent to widening a data “highway” from two lanes to nine—critical for workloads like real-time analytics that drown in data. Third, the Nitro System offloads network, storage, and security tasks, letting virtualized instances run with “bare-metal-like” speed: 60 Gbps EBS throughput, 2 TB of NVMe-local storage, and aggregate parallel-file-system speeds over 3 TB/s. The result? A 2x compute uplift versus the M5zn, with no code refactoring needed for existing HPC workloads.

Which Workloads Will It Transform?

The M8azn’s sweet spot is latency-is-everything use cases, where microseconds equal revenue or failure:

- High-Frequency Trading (HFT): Sub-microsecond order-book processing and market-making algorithms—once confined to on-prem bare-metal—can now run in the cloud, reducing latency-driven risks.

- Real-Time Analytics: Streaming IoT telemetry or click-stream data benefits from 4.3x memory bandwidth, cutting query response times that directly impact customer engagement and fraud detection.

- HPC Simulations: Monte-Carlo risk models and fluid dynamics analyses leverage 200 Gbps EFA for near-native inter-node communication, speeding up tightly coupled tasks by days in some cases.

- Gaming & CI/CD: Game-server physics calculations and build-test cycles get a boost from the 5.0 GHz single core, lowering jitter and accelerating time-to-market for new features.

What Risks Do Users Need to Watch For?

Even cutting-edge tech has edges. Two gaps stand out:

- Network Saturation: 200 Gbps EFA may bottleneck exascale workloads with extreme inter-node communication—architects should partition tasks or use multiple interconnects to stay under limits.

- Cost Efficiency: For non-latency workloads (e.g., batch processing), lower-frequency M5zn or ARM Graviton instances are cheaper. AWS’s Compute Optimizer tool is key to matching instance type to actual CPU utilization.

What’s Next for the M8azn?

The instance’s trajectory is tied to AWS’s broader cloud-HPC strategy:

- Short-Term (0–6 Months): Capture HFT and real-time analytics markets currently dominated by on-prem servers, starting with US-East (N. Virginia) and EU (Frankfurt) regions.

- Mid-Term (1–3 Years): Expand into edge-HPC scenarios—like autonomous-vehicle inference clusters and financial-risk Monte-Carlo farms—where cloud elasticity meets sub-microsecond demands.

- Long-Term (3+ Years): With wider regional availability and integration with managed services (e.g., Amazon SageMaker, FSx for Lustre), the M8azn could become AWS’s go-to for performance-oriented workloads, narrowing the gap between cloud and supercomputers.

The M8azn isn’t just a hardware launch—it’s a statement: cloud computing no longer has to sacrifice speed for scalability. By pairing AMD’s raw frequency with AWS’s Nitro infrastructure, the instance family solves one of cloud computing’s oldest problems: making latency-critical workloads feel at home in the virtual world. For industries where every microsecond counts, the M8azn isn’t just an option—it’s the future of cloud performance.

🚨 70% of High-End DRAM Now for AI: Ford Faces 5% Production Cut in Memory-Intensive Models

AI data-center demand now consumes >70% of high-end DRAM output—directly squeezing Ford’s access to the ~90GB of memory (2× DDR5 + HBM) per vehicle it needs for ADAS and infotainment. 🚨 Ford’s memory-intensive models (F-150 Lightning, Mustang Mach-E) could see a 5% production cut—50 units/day lost—with 12,500 units at risk monthly. The AI boom is gobbling up chips, leaving just 30% of DRAM for automotive, consumer, and enterprise combined. Automakers, consumers, and Ford fans—how would a 12,500-unit/month drop affect your local dealer’s inventory?

Ford’s recent confirmation of a global memory chip shortage underscores a stark tension: the automotive industry’s push for smart vehicles is colliding with AI’s insatiable demand for high-end semiconductors. Modern Ford models now require ~90 GB of DRAM and NAND memory per unit—enough to store 20 high-definition movies—to power ADAS, infotainment, and over-the-air (OTA) updates. But AI-driven data centers (Amazon, Microsoft, Google) are consuming 70% of global high-end DRAM output, leaving automakers like Ford scrambling for a shrinking supply.

How Did AI Seize Control of Memory Supply?

The shortage stems from a perfect storm of demand and allocation shifts. AI servers, which need high-bandwidth memory (HBM) for training and inference, require ≥128 GB per node—far more than consumer PCs or cars. Samsung, SK Hynix, and Micron (which control 90% of global DRAM capacity) recently redirected ~30% of their wafer output to HBM, pushing DDR5/DDR4 fab utilization above 95%. Automotive OEMs, still reliant on DDR4/DDR5, face a “technology mismatch”: the chips they need are being prioritized for AI, and new fabs (like Micron’s $2B New York facility) won’t come online until 2027.

What’s the Impact on Ford’s Production?

The consequences are tangible and immediate:

Production Losses: A 5% cut in memory allocations could reduce Ford’s output of memory-intensive models (e.g., F-150 Lightning, Mustang Mach-E) by ~50 units/day—12,500 units monthly—based on current 1,000 units/day throughput.

Cost Pressures: Per-vehicle memory costs are projected to surge 70–100% by year-end 2026, from ~$45 in 2025 to $85–$90, compressing margins by 3–4% on premium variants.

Supply Gaps: Global DRAM output (120 million GB/month) now allocates just 30% to non-AI uses (consumer, enterprise, automotive). Ford must compete for ~36 million GB of that, worsening shortages for high-performance SSDs in vehicle telematics.

What’s Ford Doing—and What’s Missing?

The automaker is exploring stopgap solutions: evaluating HBM for infotainment/ADAS to align with AI supply, simplifying base-trim memory stacks to ≤48 GB, and sourcing from Chinese vendor CXMT. But gaps persist: short-term fixes (stockpiling, design tweaks) offer little relief—new fabs won’t ramp until 2027–2028, extending the supply gap by 12–18 months. The core issue—a misalignment between automotive and AI memory needs—remains unaddressed without industry-wide collaboration.

The Road Ahead: Short- and Long-Term Projections

Q2–Q4 2026 (Short-Term): Automotive DRAM allocation will stay ≤5% of total output; 16 GB DDR4 modules could spike 100%+ in price (from $110 to >$220). Ford may delay premium trim launches and reduce OTA update bandwidth to conserve memory, with modest 2–3% MSRP hikes for high-end models.

2027 (Mid-Term): Micron’s New York fab and SK Hynix expansions could add 15% to global DRAM capacity, easing price pressure to ≤30% above 2025 levels.

2029 (Long-Term): OEMs may adopt HBM-centric architectures, reducing DDR5 reliance. Partnerships between automakers and memory vendors could secure dedicated manufacturing lanes—but AI’s ongoing demand keeps supply “elastic but vulnerable” to future shifts.

Ford’s struggle is a microcosm of the automotive industry’s broader challenge: as vehicles become computers on wheels, their success depends on securing semiconductors that are now prioritized for AI. Until data centers and automakers find a way to share the supply, the memory shortage won’t just slow Ford—it could stall the entire shift to smart transportation.

In Other News

- MiniMax M2.5 Achieves SOTA in Coding with 80.2% SWE-Bench Score, Reduces Runtime by 27%

- China Leads Global Clean Energy Investment, Driving 100% of New Renewable Capacity in 2025

- Apple Unveils M5 MacBook Pro with New Design Ahead of 2026 Launch

- AMD Unveils 800-Series AM5 Platform with PCIe 5.0 and 8000 MT/s Memory Support

Comments ()