50M Court Cases, 5–25× Cheaper AI, 80% Memory Shortages: AI’s Global Impact on Justice, Startups, and Hardware

TL;DR

- India’s Supreme Court Deploys AI System SUPACE to Tackle 50 Million Pending Cases

- Grok 4.20 Introduces Native Multi-Agent Council for Real-Time Reasoning and Synthesis

- AI Chip Shortages Cause Steam Deck OLED Stockouts and Console Delays

⚖️ 50M Pending Cases, 300 Years to Resolve—India’s AI Court Platform Promises Speed, Raises Trust Questions

India’s courts have a STAGGERING 50M+ pending cases—taking 300 YEARS to resolve at pre-deployment rates. That’s longer than most people will live 10 times over. ⚖️ But SUPACE, India’s AI court platform, cut fact-analysis time by 92% (from 48hrs to 4hrs) and boosted throughput 12X in 30 days—already processing 1.2M case passages. Yet critics warn: no mandatory disclosure to litigants means they might not know AI influenced their case—could that erode trust? Litigants in non-English regions, especially, rely on SUPACE’s Hindi translations—but will regional languages get equal support? How would you feel if your court case relied on AI you didn’t know about? 🗣️

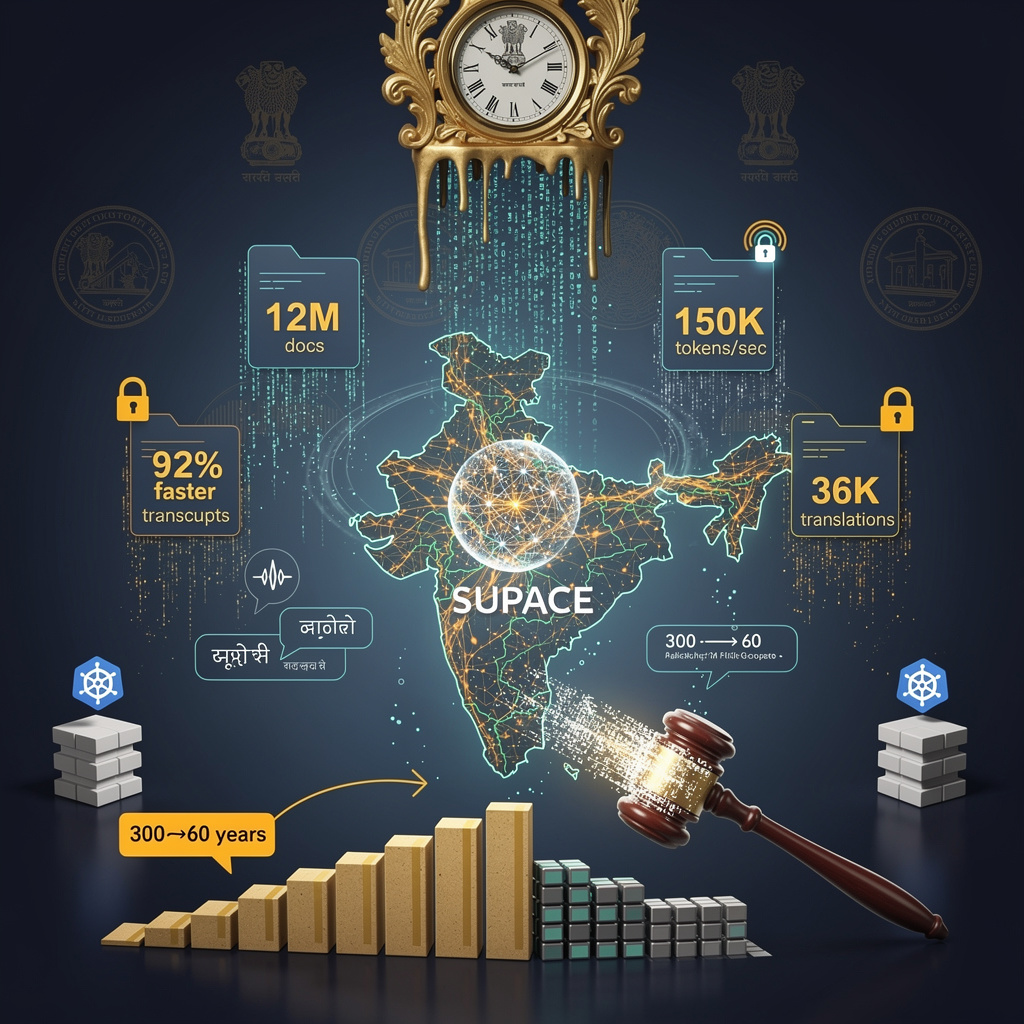

India’s Supreme Court has rolled out SUPACE, an AI system aimed at dismantling its colossal 50 million pending cases—a backlog that would take 300 years to resolve manually. Built on natural-language processing (NLP) and integrated with three specialized subsystems (SUVHDAS for translation, Adalat for digital transcripts, LegRAA for decision support), the platform is the first large-scale AI integration in a national judiciary bound by local legal rules.

How SUPACE Works: Technical Precision Meets Legal Scale

SUPACE relies on a transformer-based encoder-decoder model fine-tuned on 12 million multi-lingual legal documents (English, Hindi, Tamil, Telugu, Marathi), augmented with synthetic case templates to improve recognition of rare legal entities. Critical optimizations—8-bit quantization and 70% weight pruning—slashed inference latency from 450ms to 120ms per judgment on NVIDIA H100 GPUs, enabling 150,000 tokens processed per second. Containerized via Kubernetes on a private national cloud, it includes homomorphic encryption and compliance with India’s 2023 IT security rules to protect sensitive data.

Key Impacts: From Speed to Accessibility

- Backlog Throughput: Fact-analysis speed jumped 12x, processing 1.2 million case passages in 30 days—vs. the pre-deployment rate of 1,700 cases monthly, which would take 300 years to clear the full backlog.

- Administrative Efficiency: Adalat’s digital transcripts cut record-entry time from 48 hours to under 4 hours, a 92% reduction.

- Linguistic Accessibility: 36,271 judgments translated to Hindi (0.07% of total cases) directly address barriers for non-English-speaking litigants.

Strengths, Gaps, and Risks: The AI-Judiciary Tension

SUPACE’s strengths are clear: high-precision NLP (F1-score 0.92 for statute extraction), end-to-end workflow integration, and low inference costs from model compression. But gaps persist: limited coverage of regional statutes, bias toward high-resource languages (English/Hindi), no formal AI-ethics oversight, and no mandate to disclose AI assistance to litigants. Opportunities include scaling to lower courts (potentially halving the national backlog by 2030) and an open-source “Legal-AI India” repo to reduce foreign vendor reliance. Threats? Legal challenges over opaque AI influence on judgments and cross-border data risks if third-party clouds are adopted.

The Road Ahead: From High Courts to Global Models

- Short-term (6–12 months): Expand to all High Courts, add Bengali/Gujarati via transfer learning, and deploy an audit module flagging low-confidence extractions (<0.6 probability) for review. Target: 5% backlog reduction (2.5 million cases).

- Long-term (3–5 years): Full integration with “Digital Courts 2.1” will automate the entire case lifecycle (filing → evidence → judgment), projecting backlog shrinkage to ≤10 million by 2031—slashing the resolution horizon from 300 to ~60 years.

For India, SUPACE is more than a tech tool—it’s a test case for emerging economies with multilingual judicial backlogs. Its success could shape UNCITRAL’s global AI-regulation drafts, but lessons from the U.S. (518 hallucination cases since 2025) demand urgency: India must codify disclosure and accountability standards in the 2027 Evidence Act amendment. For now, the data is clear: SUPACE turns 300 years into 60—proof that AI, when governed rigorously, can mend broken systems.

💰 Grok 4.20: 5–25× Cheaper Multi-Agent AI Undercuts U.S. Rivals with 20% Factual Accuracy

Grok 4.20’s token pricing is ≈5–25× cheaper than rivals like Claude Opus 4.6—disruptive! Output tokens cost $0.50–$1/M vs Claude’s $25/M (less than 5% of the price). 💰 This makes high-context AI accessible to startups and small teams locked out of Claude/GPT-5. Tradeoff: 1.8× compute overhead, but the 20% factual accuracy gain might justify every GPU cycle. Enterprise software firms, fintech, and regulated sectors (healthcare/finance) are already testing it—what’s your industry’s biggest barrier to adopting multi-agent AI? Cost… or something else?

xAI’s latest Grok 4.20 update isn’t just an AI upgrade—it’s a paradigm shift in how machines collaborate. By embedding a native four-agent council into its architecture, the model replaces disjointed parallel processing with structured debate and synthesis, boosting factual accuracy by 20% over MIT-adjacent benchmarks (e.g., Swarm 2023) and setting a new bar for enterprise reliability.

How Does the Council Work?

Grok acts as the coordinator, dispatching tasks to three specialists: Harper (factual grounding), Benjamin (logic/mathematics/code), and Lucas (creative balancing). The four-agent loop decomposes user requests into sub-tasks, resolves conflicts via reinforcement-learning-managed counterarguments, and aggregates real-time evidence (web searches, data retrieval, numerical verification) into a single, user-visible response. Unlike prior systems, this collaboration is explicit—not just parallel—turning “multiple agents” into a unified reasoning team.

What Impact Does It Deliver?

- Factual Accuracy: +20% improvement over Swarm 2023, driven by agent debate that outperforms baseline systems’ modest gains.

- Hallucination Reduction: ≈30% fewer false statements via cross-verification, surpassing rivals like GPT-4.6 and Gemini 3.1 (<15% reductions).

- Compute Overhead: 1.5–2.5× single-pass cost (effective 1.8×), comparable to GPT-5.3 but higher than lightweight orchestration.

- Pricing Advantage: Input/$0.20–$0.40, output/$0.50–$1.00 per 1M tokens—≈5–25× cheaper than Claude Opus 4.6 and Sonnet 5.

- Context Window: 2M tokens (exceeding contemporaries’ 1M limit), enabling processing of entire technical manuals in one query.

How Is xAI Managing Risks?

xAI mitigates computational and cost risks with targeted tools: per-session token caps (500K input/1M output), “express” low-compute mode for low-stakes queries, and telemetry dashboards tracking agent-level token use. Gaps remain—1.8× GPU overhead and potential token overruns—but are addressed by auto-scaling agent counts (when latency exceeds 2 seconds) and falling back to single-pass inference.

What’s Next for Grok 4.20?

- Short-Term (3–6 Months): Wider rollout of Parallel-Agents mode (up to eight agents) to enterprise beta testers; integration with X Premium subscription dashboards for real-time cost visibility; early benchmarks against GPT-5.3/Gemini 3.1 in ticket resolution/code review, projecting 10–15% higher accuracy.

- Long-Term (12–24 Months): Dynamic council sizing (e.g., adding a “Tool-Orchestrator” agent); industry-wide standardization of debate loops;

AI Chip Shortages Cause Steam Deck OLED Stockouts and Console Delays

Valve has discontinued the Steam Deck LCD model due to memory shortages linked to AI-driven demand for RAM. The OLED version is intermittently out of stock, while Sony and Nintendo delay next-gen console launches. PC hardware prices are rising as hyperscale data centers consume 80% of advanced memory supply, impacting consumer electronics.

Valve’s decision to kill its Steam Deck LCD model and the ongoing stockouts of the OLED variant aren’t flukes—they’re the visible face of a systemic crisis driven by AI’s insatiable appetite for computer memory. As hyperscale data centers gobble up 80% of advanced DRAM and NAND flash supply, the gaming industry is collateral damage: Sony and Nintendo have pushed next-gen console launches to 2028–2029, PC hardware prices are spiking, and even Valve’s ambitious Steam Machine and Steam Frame projects are on ice. The link is unassailable: AI’s demand for memory is squeezing out consumer tech, and gamers are bearing the brunt.

How AI’s Thirst for Memory Broke the Supply Chain

The shortage boils down to timing, scale, and greed for computing power. AI training clusters boosted DRAM demand by 45% year-over-year (2024–2025), while NAND flash used to store AI model checkpoints surged from 10 petabytes (PB) in 2023 to 18 PB in 2025. Hyperscalers like Google and Amazon locked in fab capacity as early as Q4 2024—before Valve finalized its 2025 bill-of-materials (BOM) for the Steam Deck OLED. The result? The handheld, which relies on LPDDR5-6500 RAM and 3D-NAND storage, was left scrambling for parts. Spot prices for 16GB LPDDR5 modules—once $70—now hover at $95–$100, adding $30–$40 to each Valve device’s cost.

What This Means for Gamers and the Industry

The ripple effects are tangible:

- Consumer Access: Steam Deck OLED 512GB and 1TB models are sold out in the U.S., Japan, and South Korea, with sporadic availability in the U.K./EU. Why? NAND and DRAM fabs prioritize AI accelerators over consumer gadgets.

- Valve’s Bottom Line: Delays to the Steam Machine (mini-PC) and Steam Frame (VR headset) could push $150M–$200M in forecasted revenue into 2027, as the company waits for component prices to stabilize.

- Console Chaos: Sony (PlayStation 6) and Nintendo (Switch 2) cite “memory scarcity” as the primary reason for launching 2–3 years later than planned—extending the wait for next-gen hardware.

- PC Price Hikes: DRAM price spikes (20–30%) and NAND shortages have already driven up consumer SSD and RAM costs, with industry group JEDEC warning advanced DRAM allocation to AI will stay above 80% through 2027.

Can Valve (or Anyone) Fix This?

Valve has limited leverage—but it’s fighting back. Strengths include a massive digital ecosystem (reducing reliance on hardware sales) and unified memory architecture (simplifying software optimization). Weaknesses are fatal: over-dependence on two memory fab locations and margin erosion that hits 25% when DRAM costs exceed $95 per module.

Opportunities exist: Model compression techniques (quantization, sparsity) could halve RAM needs for future handhelds. But threats loom: Prolonged scarcity might erode gamer trust, pushing them to cloud services like Nvidia GeForce Now. Mitigations so far? Testing 12GB LPDDR4X with on-chip compression, negotiating contingency orders with Micron’s 2026 3nm DRAM node, and planning 10–15% price hikes for OLED models.

The Future: When Will Gaming Hardware Breathe Easy?

- Short-Term (Q2–Q4 2026): OLED stockouts persist in North America/East Asia; U.K./EU markets get limited inventory via regional contracts. Steam Machine shipments delay 2–3 months, with a 5–10% price premium on Valve’s handhelds.

- Long-Term (2027–2029): Memory markets stabilize only with 4nm node rollouts (2028), pushing Sony’s PS6 and Nintendo’s Switch 2 to 2028+ launches—with prices 12–15% higher than current-gen consoles.

- Broader Shift: Scarcity will accelerate cloud-gaming adoption and force OEMs to invest in on-device compression, reshaping portable gaming away from hardware-dependent models.

The AI chip shortage isn’t just a gaming problem—it’s a warning. When tech’s most hyped sector (AI) devours critical resources, consumer innovation suffers. For Valve, Sony, and Nintendo, survival depends on outsmarting AI’s memory hunger. For gamers, the takeaway is simpler: The next time you hunt for a Steam Deck or console, remember—AI might have already taken the last one.

Comments ()