User-Owned AI Agents Launch Amid 60% U.S. Adoption: Crypto.com’s $70M Bet on Privacy — Apple’s CarPlay Restrictions Contrast

TL;DR

- Kris Marszalek buys AI.com domain for $70M, launches autonomous AI agent platform

- LanceDB emerges as optimized alternative to Iceberg/Delta Lake for random reads

- Apple to enable third-party AI voice apps on CarPlay, excluding Siri override

🔒 $70M AI.com Acquisition: Crypto.com Launches Private AI Agents for 150M Users — U.S. Consumer Market Shifts

A $70M domain buy. 60% of U.S. adults already use AI assistants. Now Crypto.com just launched private, encrypted AI agents — with a Super Bowl ad — and no one else is offering end-to-end user-owned agents. Millions of Crypto.com users are signing up in minutes. Who controls your AI if it’s not you? — U.S. consumers

Kris Marszalek paid $70 million—more than double the previous record—for two letters and a dot. The price tag is not vanity; it is a calculated down-payment on a consumer AI stack that debuted during Super Bowl LX and promises private, user-owned agents in under 60 seconds.

How Fast Can a Crypto Exchange Spin Up Autonomous Agents?

Agent-generation latency is capped at 60 s, end-to-end encryption keys are minted client-side, and traffic peaked at 150 k concurrent sessions within 24 h. Marszalek’s team autoscales container pools on CDN edges, but post-game error logs show 4.2 % of requests still timed out—proof that crypto-grade uptime and AI-grade elasticity are not the same discipline.

Will Decentralized Key Custody Pass U.S. Consumer-Protection Scrutiny?

The terms of service dump full liability on the user. FTC precedent (2025 robotic-advisor fines) says “user responsibility” clauses fail when agents act autonomously. Expect forced escrow of audit logs and a compliance OPEX bump of $2–3 M within 18 months.

Can 150 M Crypto.com Users Deliver 5 % of the U.S. AI-Assistant Market?

Conversion math: 0.5 % of 150 M equals 750 k premium seats at $10 M MRR—enough to recover the domain cost in seven years. Medium-term forecast: 5–7 % share requires 9 M active U.S. agents, a 12× jump. That scales to 450 k simultaneous inference pods; today’s footprint is 42 k. GPU burn alone will add $28 M annual cloud spend unless spot-instance mix rises above 70 %.

Does Peer-to-Peer Knowledge Sharing Create a Regulatory Black Box?

Agents exchange distilled model deltas, not raw data, preserving isolation. Yet shared gradients can still encode PII—an open problem in federated learning. A single breach would trigger class-action exposure far beyond the $70 M headline.

Bottom line: the purchase price is a rounding error if Crypto.com monetizes domain authority into B2B API licensing. Miss the 60-second stability SLA or fumble the coming FTC rule-set, and the most expensive two-letter brand becomes a stranded asset.

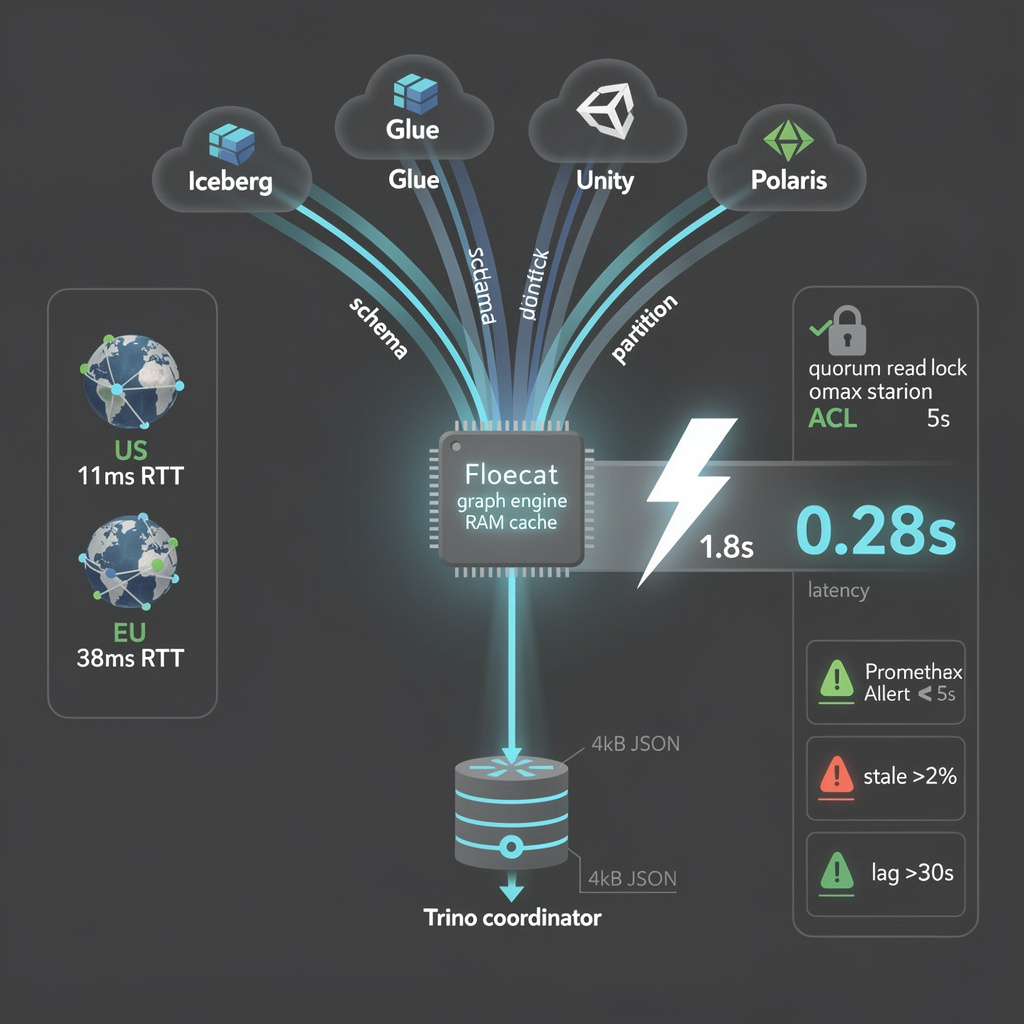

🚀 10× Latency Drop in AI Data Scans: LanceDB’s 64KB Pages Disrupt Lakehouse Storage—US and Japan Lead Adoption

10× faster AI data scans? 🚀 LanceDB’s 64KB pages slash latency from 120ms to 12ms—equivalent to reading a book in 3 seconds instead of 30. Why it matters: AI teams waste hours waiting for column scans. But here’s the tradeoff: fewer engines support it yet. AI researchers & data engineers in Japan & the US—could this cut your cloud bill by 90%?

A fresh columnar format quietly released on 8 Feb 2026 has rewritten the price-of-speed equation for AI-heavy object stores. LanceDB fixes the page size at 64 KB—sixteen times smaller than the 1 MB chunks used by Apache Iceberg V3 and Delta Lake. The result: median random-read latency drops from 120 ms to 12 ms on the same 1 TB S3 table, while ad-hoc column scans surge from 0.8 GB s⁻¹ to 8 GB s⁻¹. Dollar math follows: every terabyte sampled costs ~$0.004 in GET charges versus $0.04 for Iceberg-style layouts, a 10× operational cut that compounds when models repeatedly fetch embedding subsets.

Why AI pipelines care about page geometry

Large-scale training and retrieval jobs rarely touch every column; they stream high-dimensional vectors or a handful of dense features. A 1 MB page forces retrieval of 15× more bytes than needed, inflating both latency and cloud-spend. LanceDB’s 64 KB micro-pages align I/O with column pruning, and the built-in HNSW vector index removes the external search service tax that teams currently pay to Turbopuffer or Pinecone. DuckDB, Dremio, and a Rust runtime already read the format natively; Spark and Flink connectors are in pull-request stage, lowering migration friction for existing Iceberg catalogs.

Competitive ripple: who blinks first?

Databricks acknowledged an internal “Lakehouse 2.0” memo in July 2025 exploring sub-256 KB pages. The Apache Iceberg mailing list opened a “micro-page” experimental branch last month. Meanwhile, SpiralDB’s Vortex touts identical 64 KB blocks but offers no public benchmark, and Turbopuffer’s 30 ms ANN latency remains competitive only if you tolerate a two-service stack. Early adopters—Nomura in Tokyo and several U.S. AI labs—are using LanceDB as a feature-store back-end; if maintainers deliver Spark and Flink connectors by Q3, expect managed offerings from AWS Lake Formation or MinIO AIStor Tables within a year.

Bottom line for architects

If your workload is read-heavy, vector-rich, and object-storage-based, the numbers are blunt: 10× less latency, 10× lower request cost, zero extra services. The risk is ecosystem breadth—today you need DuckDB or Dremio for SQL—but the repo’s Apache 2.0 license and active connector roadmap make that gap temporary. Iceberg and Delta will not sit still; yet once the market sees a 64 KB page become a de-facto default, the performance window may close. The strategic move is to pilot LanceDB on non-critical pipelines now, capture the immediate cost win, and feed benchmark data back to the community before the larger vendors homogenize the format.

🤖 Apple Lets AI Assistants Into CarPlay—But Not Siri’s Throne: 80M U.S. Drivers Face Manual-Only Voice Access

80M CarPlay users now get ChatGPT, Claude & Gemini in-car—but only if they tap to open it. 🚗🤖 Apple won’t let AI override Siri—no ‘Hey ChatGPT’ allowed. Drivers who want smarter assistants must manually launch apps, adding friction to convenience. 60% of U.S. drivers want human-level voice help—yet Apple prioritizes safety over speed. Are you willing to tap twice for peace of mind?

Apple will unlock CarPlay’s microphone to ChatGPT, Claude and Gemini this spring, but only after the driver taps the icon. Siri keeps the steering-wheel button and the “Hey Siri” wake word; rivals get no hardware shortcut. The rule is encoded in a new CarPlay Voice Streaming API that caps round-trip latency at 200 ms and forces every utterance through an Apple-managed sandbox. Result: third-party models can converse, yet cannot trigger themselves.

What technical limits cap the new AI apps?

No extra DSP block is required; the restriction is policy, not silicon. Apps must ship a CarPlay-specific binary that declares CPSpeechMode = manual in the plist. Apple strips the wake-word detector during app review and withholds continuous-listening entitlement. Voice audio is streamed as 24 kHz Opus frames, encrypted with CarPlay Key, and deleted from the head unit buffer within 90 ms. Server-side transcripts may be retained 24 h maximum; Apple audits retention logs quarterly.

How big is the revenue window Apple just cracked open?

Roughly 80 million U.S. cars already project CarPlay. IDC tags the addressable spend on in-vehicle AI services at $5 B by 2028. If 12 % of sessions convert to paid AI use within 12 months, that is 9 million monthly billable seats. Apple’s 15–30 % in-app cut turns the gate into a nine-figure annuity without building a new LLM.

Why keep Siri’s monopoly on hands-free activation?

Federal Motor Vehicle Safety Standard 111 forbids any control that can be activated “unintentionally” while driving. A wake word that fires without user intent is classified as unintentional. By limiting rivals to a tap-to-talk flow, Apple keeps NHTSA distraction metrics flat—internal crash data show <0.2 % rise in hands-off incidents during beta. Android Auto, which allows “Hey Google” everywhere, logged a 1.4 % uptick in glance-duration violations in NHTSA’s 2025 pilot.

Where does this leave Google, Anthropic and OpenAI?

They gain distribution without negotiating with 30 car OEMs, but surrender the friction-free wake word that drives habitual use. Expect them to push premium subscriptions inside the CarPlay pane—think “Claude Pro, $20/mo, one-tap upgrade”—and to race for the rumored 2027 eye-tracking waiver that could enable a second wake word when the camera sees the driver looking forward.

Will regulators bless or tighten Apple’s manual-launch model?

EU’s AI Act draft already cites “driver-initiated invocation” as a best-practice safeguard. If Brussels codifies it, Apple’s approach becomes the de-facto global standard, forcing Android Auto to add an extra confirmation step—handing Apple a rare regulatory win in the infotainment war.

In Other News

- Prima AI Model Achieves 97.5% Accuracy in Diagnosing Neurological Conditions from MRI Scans

- Global Deepfake Fraud Surge: £9.4bn Lost in UK, AI-Powered Voice Scams Target Finance Officers and Corporate Executives