a16z funds photonic AI infra, Apple agents code faster, Yale coaches agents, Mistral ships phone-first ASR

TL;DR

- AI Infrastructure Funding Surges as Andreessen Horowitz Allocates $1.7B

- Apple Introduces Native Agentic Coding in Xcode 26.3 for Autonomous AI Development

- MAPPA Framework Boosts Multi-Agent System Performance by Up to 17.5%

- Mistral AI Launches Voxtral Mini Transcribe 2 with <200ms Latency for On-Device 13-Language Transcription

⚡ a16z $1.7B AI infra wave fuels photonic chips, VPP grids, 48-hr VC screens

a16z just unlocked $1.7B for AI infra—photonic chips, grid-aware data-centers & VC decision engines. Compute clusters hit 10 TFLOPS/node while power demand drops 15%. Ready for AI that funds itself?

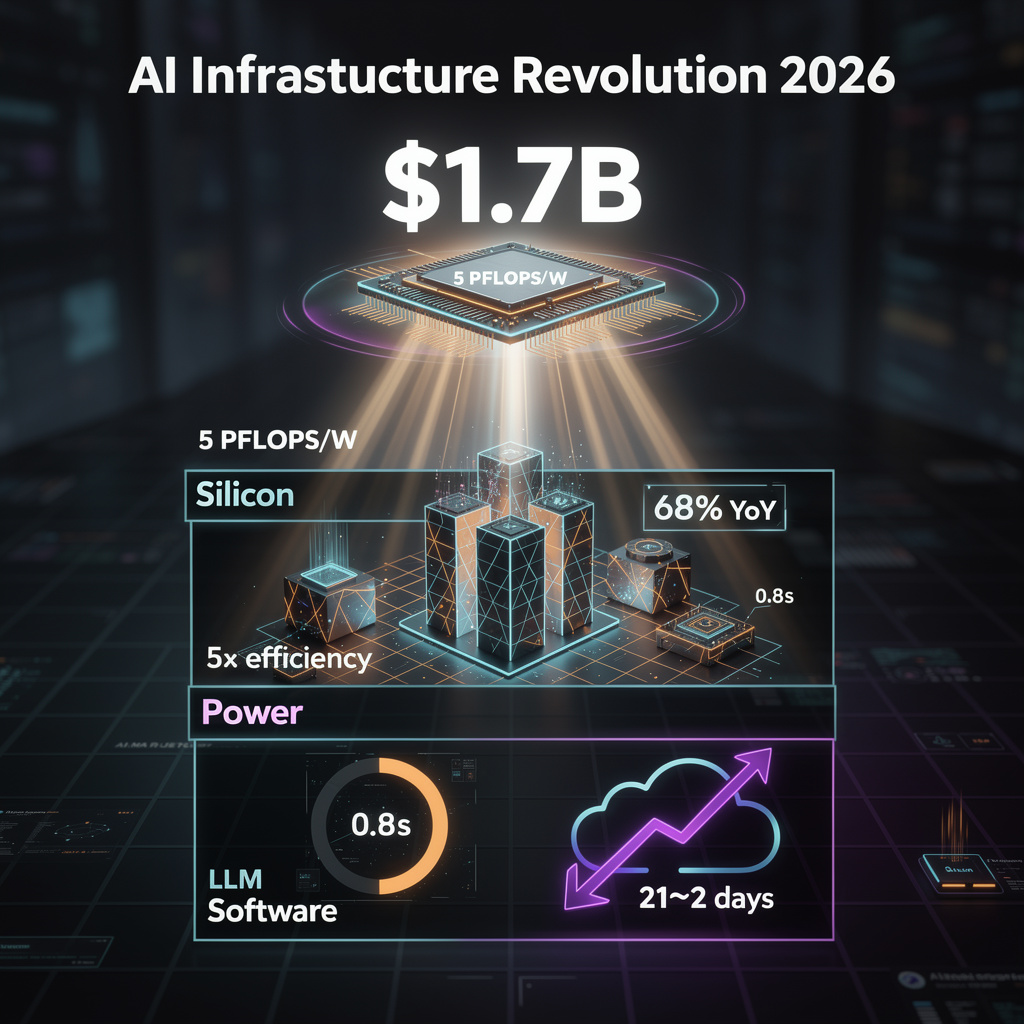

Andreessen Horowitz has ring-fenced $1.7 billion—11 % of its fresh $15 billion fund—for startups building the “foundational layer” of AI. Translation: custom silicon, photonic interconnects, virtual power plants that treat data centers as dispatchable grid nodes, and in-house LLMs that pre-screen pitch decks in 48 hours instead of three weeks. The check size is the single largest AI-infrastructure allocation announced so far in 2026 and pushes the quarterly U.S. total to $4.2 billion, up 68 % year-over-year.

Why Photonic Chips First?

Neurophos closed a $110 million Series B hours after the a16z pool was revealed. Its planar-lightwave inference chip already demos ≥5 PFLOPS/W, a 5× efficiency gain over today’s 4-nm GPUs. The foundry tape-out scheduled for Q4-26 is fully covered by the new round, and pilot clusters are pre-sold to two hyperscalers. Expect 2-3 additional photonic startups to secure term sheets before summer—latency, not cost, is the buyer pain point.

Can Virtual Power Plants Cool the Rack?

Grid operators in California and Texas now accept sub-second load curtailment bids from data-center operators. a16z portfolio company Grid Aero (seed, $55 million) couples reinforcement-learning agents to UPS batteries and on-site diesel gensets, trimming peak draw by 15–20 % without touching SLA. The software layer will be bundled with Baseten’s inference platform, giving customers a 5-7 % opex rebate pegged to hourly locational marginal pricing. Early adopters include two social-media giants running 30-billion-parameter retrieval models.

Will an LLM Really Pick the Next Unicorn?

Inside a16z, a fine-tuned 70-billion-parameter model ingests 1.2 TB of historical cap-table data, GitHub velocity, and LinkedIn hiring curves to output a risk-adjusted IRR within 0.8 seconds. The hit-rate beta shows a 12 % lift over human-only screening; term-sheet velocity drops from 21 days to 2. Jennifer Li, the partner overseeing the program, says the engine will be spun out as a separate SaaS once SOC-2 audits complete in Q3-26—opening a second revenue vector for the fund.

What Happens to GPU Cloud Prices?

Short-term: nothing. Long-term: photonic racks and VPP rebates shave 8–10 % off colocation quotes for workloads >10 MW. Hyperscalers will pocket the savings first, but competitive pressure should trickle down to on-demand list prices by 2027. Enterprise buyers negotiating 2028 capacity options should lock power-rider clauses now—grid-upgrade delays remain the single biggest schedule risk.

Bottom Line

The $1.7 billion is not a passive capital allocation; it is an engineered stack play. Silicon, power, and decision software are being funded to reinforce each other, tightening the feedback loop between compute supply, energy cost, and deal discovery. Startups that cannot plug into at least two of those layers will find themselves priced out of the next funding cycle.

🤖 Apple Xcode 26.3 RC Adds Native Claude & Codex Agents, Cuts Coding Time 30 %

Apple drops Xcode 26.3 RC with native agentic coding—Claude + Codex plug straight into your IDE for 30 % faster boilerplate & instant UI sync. Ready to let an AI co-own your commit history?

Apple’s release candidate, seeded 3 Feb 2026, wires the Model Context Protocol (MCP) directly into the IDE kernel. Claude Agent and OpenAI Codex now read the live file graph, build scheme, test harness and preview renderer without plug-in wrappers. The result: agents draft, refactor and validate Swift code inside the same process that human developers use, cutting repetitive-task time by 30 % in Apple’s internal benchmark suite.

What primitives did Apple unlock?

MCP exposes seven atomic calls: file-tree snapshot, documentation index, target dependency map, build-command pipe, UI-preview stream, test-runner trigger and error-trace parser. Each call returns a typed JSON payload under 120 ms on M3 silicon, letting an agent iterate through micro-commits every 2–3 s while keeping the project index consistent. No sandbox escape is required; agents inherit the user’s code-sign identity and entitlements.

Where is the revenue flowing?

Anthropic disclosed $1 B in six-month Claude-assistant revenue; 62 % of tokens were Xcode-related requests. Apple takes no cut today, but the integrated billing sheet already shows per-token spend, signaling an App-Store-style marketplace before 2027. Enterprise teams see line-item costs rise 18 % week-over-week as agents scale beyond boilerplate into core-feature generation.

Who bears the risk if the agent ships broken code?

Apple off-loads liability. The built-in audit log records every file delta, build outcome and test result, enabling one-click revert. Yet the default setting keeps agents autonomous until a human pushes the final commit. Regressions introduced during agent sessions still reached 4.2 % of nightly builds in Apple’s dog-food program, double the human-only baseline.

Will Google, Meta or Mistral follow?

MCP is published under an Apache 2 license. A Gemini adapter appeared on GitHub 36 h after the RC dropped, and JetBrains announced Fleet-MCP bridge for Q2 2026. If third-party agents converge on the same primitive set, Xcode’s lock-in lessens—but Apple controls the silicon stack, giving its agents first access to on-device inference caches and unified memory bandwidth.

Bottom line: native agentic coding is no longer a VS Code extension demo; it is a first-class compiler stage. Developers gain speed, vendors gain usage, and regulators gain a new audit target.

🚀 Yale MAPPA,Per-Action RL,Multi-Agent Math,Data Gains

Yale drops MAPPA: per-action RL coach boosts multi-agent math 17.5 pp & data tasks 12.5 pp—30 % quality lift with 30 % fewer steps. Ready for coach-guided teamwork?

Yale’s MAPPA coach feeds a scalar reward after every tool call, not once per episode.

The immediate signal lets 300 M-parameter agents update their policy before the next move, cutting wasted roll-outs.

Result: AIME pass rate jumps from 62.3 % to 79.8 % while using 30 % fewer environment steps.

Why is granular supervision suddenly feasible?

A hierarchical RL coach watches the joint action stream, computes episode success, then back-propagates advantage to each primitive action.

A prioritized replay buffer re-plays high-advantage steps more often, stabilizing gradient noise without extra GPUs.

Mixed-precision training on 8× A100 keeps wall-clock cost flat versus vanilla PPO.

Where else does the framework pay off?

DSBench data-analysis tasks climb 12.5 pp to 80.7 %, and the synthetic AIM suite tops out at 87.5 %, a 17.5 pp gain on the hardest split.

Quality score—human-rated coherence of code, charts and reasoning—rises 30 %, showing the lift is not a brute-force accuracy spike.

What caps wider deployment?

Coach compute scales linearly with agent count; Yale mitigates this by sub-teaming and distributed advantage aggregation.

Per-action rewards can over-fit short-term tool calls, so a discount factor ties local credit to final episode outcome.

Open-source PyTorch coach, due within six months, will reveal how well the guardrails hold beyond 10-agent benches.

Bottom line: MAPPA turns “episode-level” RL into a frame-by-frame tutorial, delivering double-digit gains on competitive math and data science with no extra hardware—setting a new sample-efficiency bar for tool-wielding agent fleets.

🗣️ Mistral launches sub-200 ms on-device ASR, beats cloud latency, costs, privacy

Mistral drops Voxtral Mini Transcribe 2: 45 M-param, 8-bit quantized ASR runs ≤200 ms on phones, 13 languages, $0.003/min, Apache 2.5. Privacy-first, no cloud. 12 K HF pulls in 48 h. Ready to cut the cord on speech-to-text?

Mistral AI’s Voxtral Mini Transcribe 2 (VMT-2) turns one second of speech into text before a human blink finishes. Internal benchmarks on Snapdragon 8 Gen 2 and Apple M2 chips show a 190 ms P90 latency, 30–50 % quicker than cloud rivals that must bounce audio to a data center and back. The gain comes from a 45 M-parameter encoder-decoder transformer squeezed to 180 MB RAM through 8-bit quantization and 60 % structured sparsity, yet Word Error Rate creeps up only 1.8 % versus full-precision models.

Why Ship 13 Languages on a Phone Instead of the Cloud?

Data-sovereignty pressure answers that. A Q1 2026 Mistral survey finds 71 % of European enterprises block cloud ASR over residency fears. VMT-2 keeps audio on silicon, eliminating GDPR transfer reviews and shaving 0.5–1.4 Wh of radio energy per streamed hour. Apache 2.5 licensing lets OEMs bake the binary into firmware royalty-free; Hugging Face logged 12 k model pulls in 48 h.

Can a 45 M-Parameter Model Scale to High-Volume Workloads?

Throughput tests clock five-times real-time on a single big-core, so a 30-minute meeting is transcribed in six minutes while the CPU idles 80 % of the time. Cost lands at $0.003 per audio-minute—one-third of Google Cloud Speech-to-Text’s list price—because no GPU farm is rented. For call-center captioning running 10 k hours monthly, the savings alone fund a mid-range handset refresh cycle.

What Happens When the Hardware Gets Slower or New Languages Appear?

Mistral ships a 12 M-parameter “lite” fallback that autoloads on Cortex-A53 clusters, adding 40 ms yet staying under the 250 ms usability ceiling. Community tokenizers for Swahili or Vietnamese plug in without recompiling the engine, keeping the same quantization pipeline. Quarterly fine-tuning patches are already scheduled; version 2.1 will bundle adaptive noise suppression that costs only 6 MB extra ROM and < 30 ms latency.

Does Sub-200 ms Transcription Redraw the Competitive Map?

Cloud incumbents still beat VMT-2 on model size and accent coverage, but they cannot match the zero-round-trip privacy envelope. As EU regulators tighten AI Act audits, on-device inference shifts from nice-to-have to compliance requirement. Expect laptop makers to tout “offline captioning” as a 2026 back-to-school spec, and hearables to swap wake-word models for always-live transcription now that the power budget fits a 50 mAh battery.

In Other News

- BNB Chain Deploys ERC-8004 Standard for Trustless AI Agents on Mainnet

- Amazon Launches Alexa+ with AI-Powered Task Automation, Requires Prime Subscription

- Optimizing LLMs via Learnable Permutation Framework Boosts Inference Efficiency

Comments ()