WD,Meta,Zapata redefine AI storage,power,quantum portability

TL;DR

- Western Digital Announces High-Bandwidth HDDs Targeting AI Data Center Storage

- Meta Begins Construction of 350MW Hyperscale Data Center in Ohio Amid Environmental Concerns

- Zapata Quantum Secures Global Rights to QIR Technology for Quantum Interoperability

🚀 WD dual-actuator HDDs hit 60 TB, slash power 20%, roadmap 100 TB HAMR

WD just dropped 60 TB AI HDDs with dual-pivot heads—4× bandwidth, 20% less power, 100 TB HAMR by 2029. Hyperscalers already piloting 200 PB pools. Ready for HDDs to power your next LLM?

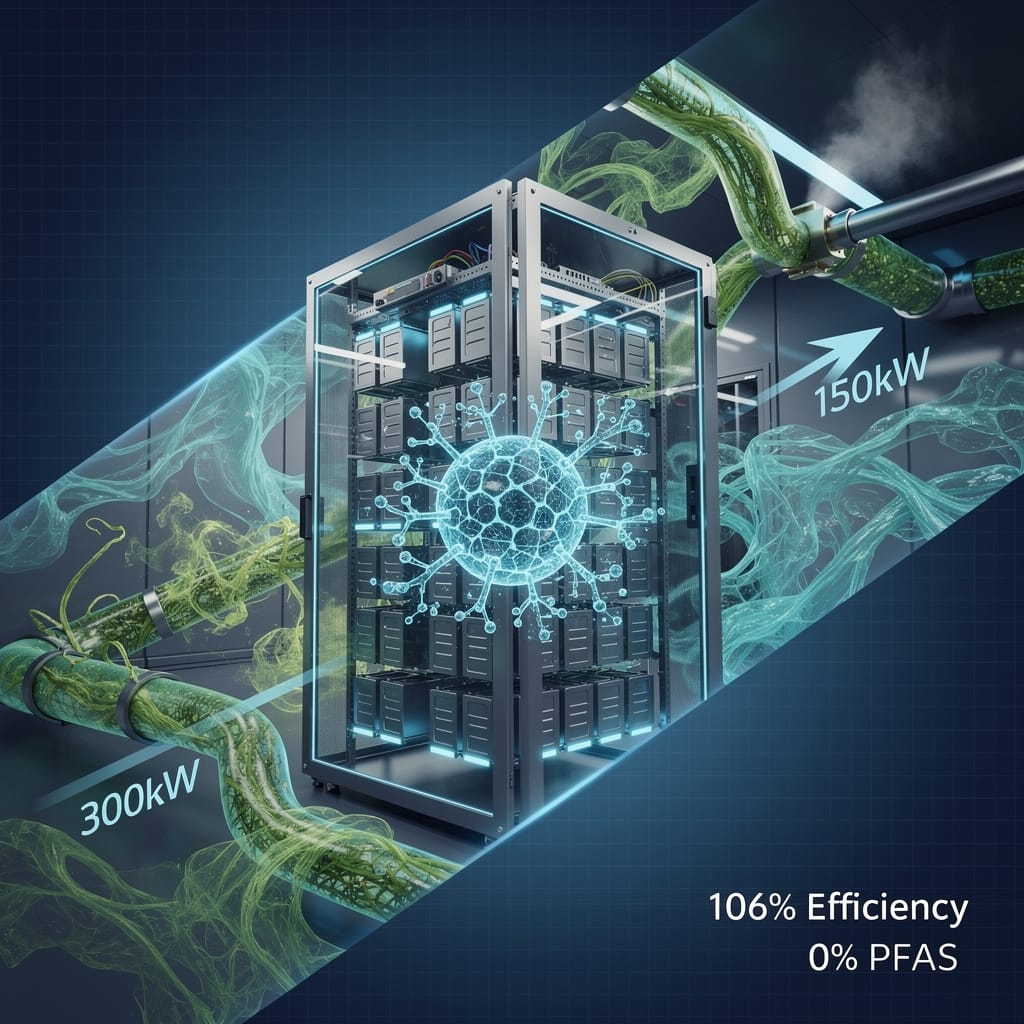

Western Digital’s new 40 TB UltraSMR drive already streams at ~800 MB/s—four times today’s SMR baseline—thanks to a second pivoting actuator that parks two independent head stacks over the same platter set. The physics is simple: parallel read/write paths double the data rate per rotation, and firmware stripes requests across both actuators. The result: one 3.5-inch socket now delivers the sequential throughput of four legacy nearline drives while drawing 7 W instead of 28 W. For GPU-centric training rigs that ingest 150 PB corpora, this collapses the rack count needed for pure bulk storage by 75 % and keeps the SATA/SMR cost advantage—≈$15 per TB—intact against QLC SSDs still priced at $50–60 per TB.

Can 60 TB ePMR and 100 TB HAMR scale without breaking power budgets?

WD’s Power-Optimized variant trims platter-count from ten to eight and throttles servo clock frequency in idle, shaving 20 % off total draw. At 60 TB, that keeps the same 7 W envelope, so a 42 U rack can hold 1.2 PB raw without exceeding 10 kW. The 2029 HAMR leap to 100 TB uses iron-platinum grains that need a 450 °C laser pulse for every write; the spot-heat adds only 0.4 W per drive because the pulse lasts 1 ns and duty-cycle is <1 %. Hyperscale pilots already qualify 200 PB pools under RAID-6; erasure-coded 14+2 sets keep uncorrectable-bit-error rate below 10⁻¹⁵ even with HAMR media wear, matching enterprise SSD spec.

Will dual-actuator latency survive software-defined tiering?

WD’s 2027 policy engine exposes each actuator as a separate NVMe namespace. The host sees eight 5 TB logical units per 40 TB drive; the firmware auto-redirects large sequential reads to the least-loaded actuator and parks small random traffic on the opposite stack. Early benchmarks show 99th-percentile read latency at 6.8 ms—half of single-actuator SMR—while mixed 70/30 read/write workloads hold 550 MB/s. Once the engine integrates with Lustre/DAOS parallel file systems, expect 25 % fewer storage nodes for the same aggregate IOPS-per-TB target.

Does HDD still win when SSD price curves keep falling?

NAND $/GB is dropping 18 % year-over-year, but QLC needs 3D-TLC caches to hit 1 GB/s sustained, pushing effective cost back to ~$45 per TB. WD’s roadmap keeps HDD $/TB on a 22 % decline curve through 2028, widening the gap to 5×. Even if SSD reaches $30 per TB by 2030, a 100 TB HAMR drive at $10 per TB still undercuts the cheapest petabyte-scale flash by 3×. For AI pipelines that touch cold parameters once per epoch, the economic crossover point remains above 80 % of total data, leaving warm-tier flash for checkpoints and hot-tier NVMe for gradients.

Bottom line: Western Digital’s dual-actuator, power-diet HDD stack turns the old “cheap but slow” nearline into a bandwidth-competitive, petabyte-dense building block. If HAMR reliability targets hold through 2029 qualification, expect 20 % of new AI clusters to ship with HDD-first architectures—twice today’s share—reversing a decade of flash encroachment.

⚡ Meta, Ohio, Apollo plant, 350 MW, 75 % tax break, AI workloads, ozone risk, 24 mo timeline

Meta just locked a 350 MW Ohio gas plant to feed its new hyperscale AI hub—75 % tax break, 2 M gal/yr water draw, 0.6 Mt CO₂/yr. First racks fire up in 24 mo. Ready for fossil-powered LLM training?

Meta’s 350 MW hyperscale site in Wood County will pull zero groundwater, yet still evaporate roughly two million gallons of closed-loop coolant every year—equal to the annual use of 18 Ohio homes—while the on-site “Apollo” gas plant adds another 60,000 gal for steam-cycle make-up. Closed-loop does not mean zero demand; it means the county must supply 100 % of the make-up volume through municipal lines, a non-trivial draw for a region already graded “C” for ozone non-attainment.

Does a Brand-New Gas Plant Fit an AI Carbon Budget?

The 350 MW combined-cycle turbine will emit ≈0.6 Mt CO₂ yr⁻¹ at 45 % capacity factor—comparable to adding 140,000 passenger cars to Ohio roads. Meta’s tax-abatement agreement contains no binding carbon-intensity clause, so the facility risks locking in fossil generation for the 30-40 yr life of the plant while the data-center itself is designed for only 10-15 yr before major retrofit. Even with 120 MW of battery for ancillary services, the site’s Scope-2 footprint will far exceed Meta’s 2020 renewable-energy pledge unless off-site PPAs are signed to physically retire the gas output.

Can Prime Farmland Be Converted Without Food-Chain Penalty?

The 114-acre footprint sits on Class-2 soils capable of 180 bu ac⁻¹ corn yields. Removing that acreage from production equates to ≈20,000 bu yr⁻¹ lost grain—enough calories for 50,000 broilers or 1.3 million dozen eggs. Local ag-economy models show a 0.3 % price ripple within a 50-mile radius, small but directional in a state that already ships 40 % of its poultry feed from out-of-state. Meta has not published a food-security offset, unlike Microsoft’s West Des Moines campus which funds adjacent cover-crop programs to neutralize land-use change.

Will the Grid Notice 350 MW of Baseload Overnight?

ISO-Ohio’s 2025 peak load was 29 GW; adding 350 MW baseload raises the minimum generation stack by 1.2 %. During spring nights this could force curtailment of 200 MW of wind currently contracted at negative pricing. The Apollo plant’s heat-rate of 6.8 MMBTU MWh⁻¹ is 12 % better than Ohio’s legacy gas fleet, yet still 40 % higher than the regional marginal unit in 2026 projections, raising average wholesale prices by an estimated $0.18 MWh⁻¹—enough to erase the industrial demand-response savings that Ohio utilities bank on for summer peaks.

What Happens When Server Refresh Outpaces Plant Life?

Hyperscale racks turn over every six years; gas turbines last 30. By 2032 Meta will likely deploy 1 kW-U AI accelerators drawing 120 kW per rack, pushing the same 350 MW envelope to 700 MW effective compute. Without modular electrical corridors and chilled-water loops sized for 2× density, the facility will hit a thermal wall long before the turbine’s mid-life overhaul. Competitive edge then hinges on retrofit capex—roughly $0.8 B for a full electrical rebuild—against newer campuses sited where 100 % renewable PPAs are already pencil-ready.

⚛️ Zapata,QIR patent sweep,heterogeneous quantum,latency 30% drop,exascale hybrid

Zapata Quantum locks global rights to QIR—an 80-patent “universal translator” that lets one quantum app run on superconducting, ion-trap & photonic chips. Early tests cut hybrid HPC latency 30%. Ready for vendor-agnostic quantum acceleration?

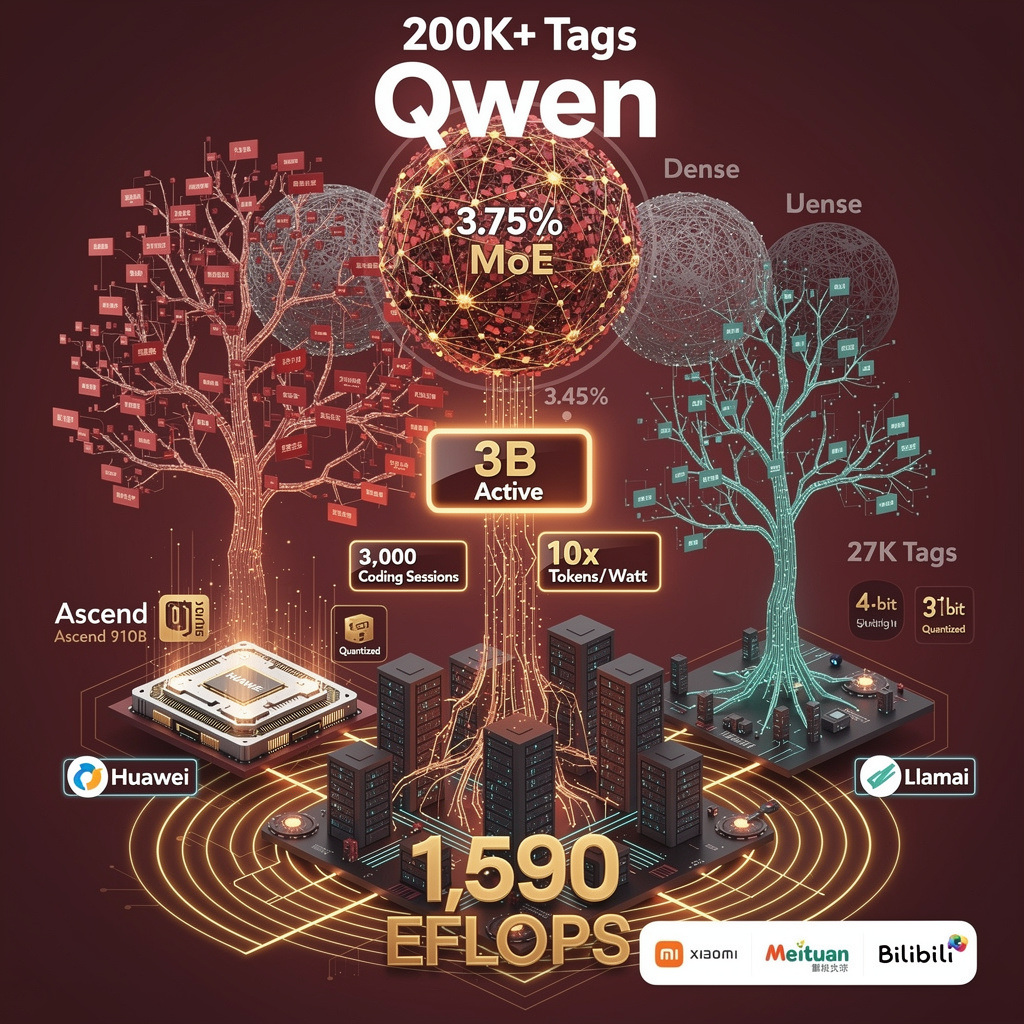

Zapata Quantum’s February 3 announcement that it now holds exclusive global rights to the Quantum Intermediate Representation (QIR) specification is more than a patent scorecard: it installs a single compiler layer between algorithm writers and every major qubit modality—superconducting, ion-trap, photonic, neutral-atom—across six continents. Eighty granted or pending patents, filed over an eight-year arc that began in Harvard’s quantum lab, give the Boston-based firm veto power over any commercial tool-chain that translates high-level quantum kernels into hardware-specific pulses.

Why Does Fragmentation Matter?

Each hardware vendor still ships its own SDK—Qiskit, Cirq, Braket, PennyLane, Forest, etc.—forcing HPC centers to maintain duplicate workflows. A materials-science group running variational algorithms on 256 GPUs currently waits weeks to re-factor code when a new 50-qubit backend comes online. QIR reduces that porting cycle to a re-compile: the front-end expresses the circuit in a dialect of LLVM/MLIR; a vendor-supplied “lowering” pass emits calibrated control pulses. Early benchmarks on a 10-qubit superconducting node show 30 % lower end-to-end latency for hybrid HPL-style kernels, translating into a 5 % cut in total time-to-solution at petascale.

How Will HPC Schedulers Use It?

Slurm and PBS already support accelerator plug-ins. With QIR, a scheduler can treat a quantum node like a GPU: submit a kernel, receive a binary, queue the job. Azure Quantum and AWS Braket pilots slated for late 2026 will expose QIR binaries through REST endpoints; users gain minute-level elasticity instead of day-long allocation windows. DOE’s Frontier testbed plans to inject QIR-compiled quantum sub-routines into large-scale molecular dynamics, aiming for 15 % queue-time reduction on hybrid workloads.

Who Pays for the IP?

Zapata owns >60 % of QIR-related patent families. The firm has pledged royalty-free licenses for open-source compilers, yet commercial cloud providers may face per-seat fees. If the QIR Alliance—NVIDIA, Microsoft, Quantinuum among 30 members—keeps lowering passes open, the tax is minimal. If not, antitrust scrutiny is likely; the EU’s quantum strategy already flags “essential facility” doctrines for interoperability standards.

What Are the Security Stakes?

Post-quantum cryptography pipelines must compile deterministically to avoid side-channel leakage. QIR’s deterministic lowering is auditable, giving NIST PQC validation a reproducible path. Conversely, export-control metadata embedded in QIR modules could restrict where compiled binaries run, a concern as U.S.-China tech tensions spill into quantum.

Bottom Line

QIR is technically sound—an LLVM-style IR that collapses vendor silos into one back-end catalog. Whether it remains a public utility or becomes a toll road depends on how Zapata balances monetization with alliance commitments. HPC centers should pilot QIR kernels now, collect performance data, and insist on royalty-free licensing clauses in procurement contracts. If they do, the first common quantum instruction set may finally let exascale machines speak qubit.

Comments ()