Alibaba Qwen tops 200k repos, Lotus-Corti AI triage hospitals, Anthropic rewrites legal docs

TL;DR

- China’s Qwen Ecosystem Surpasses Meta Llama with 200K+ Model Repositories on Hugging Face

- Lotus Health AI Raises $35M Series A to Offer Free AI-Powered Primary Care

- Corti Unveils Production-Grade Multi-Agent Framework for Healthcare Workflow Automation

- Anthropic Launches Claude Legal Automation Tool, Triggering Stock Plunge in Legal Tech Firms

🚀 Qwen tops HuggingFace, MoE coder beats Llama, China compute fuels 200k repo surge

Alibaba’s Qwen just crossed 200k repos on HuggingFace—7× Meta Llama—thanks to 80B-MoE coder models, Apache-2.0 freedom & 1.59k EFLOPS of national compute. 70% SWE-Bench, 10× speed, 262k context. Thoughts on who leads open-source AI now?

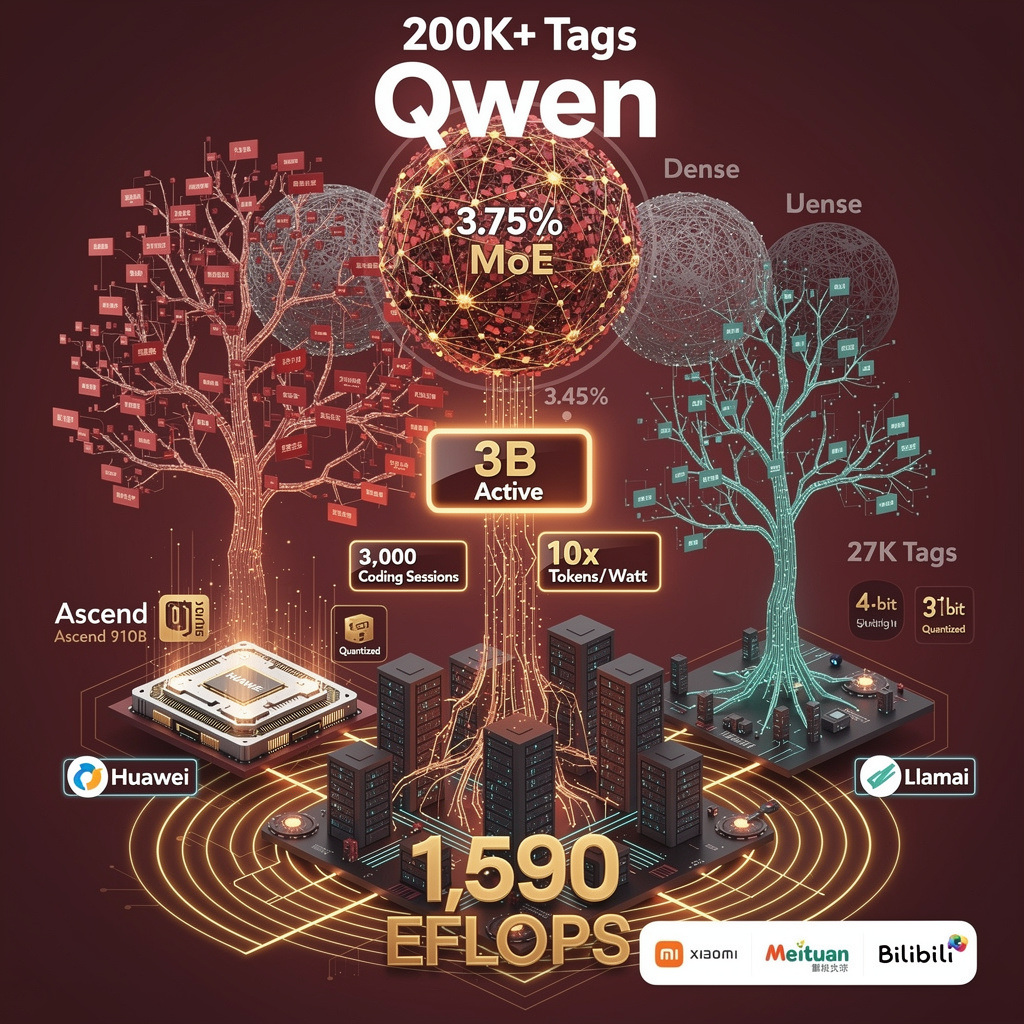

The numbers are blunt: 200 000 Hugging Face repositories now carry a Qwen tag, seven-fold Meta Llama’s 27 000. The surge took six months, not six years, and it traces to three levers pulled in tandem:

- Apache 2.0 licensing—zero-friction commercial reuse.

- MoE (mixture-of-experts) architecture—80 B total parameters, 3 B active, 262 k-token context—delivering 10× the tokens-per-watt of dense rivals.

- A 1 590-EFLOPS national compute pool feeding eight domestic hubs with an average PUE of 1.46, cutting energy overhead to 46 % above theoretical minimum.

What Technical Edge Powers the MoE Models?

Qwen-3-Coder-Next posts 70.6 % on SWE-Bench and 61.2 % on SecCodeBench, 18–19 pp ahead of Llama-2-70 B and Claude-Opus-4.5. The gain is not parameter inflation; it’s structural sparsity. Ultra-sparse gating activates only 3.75 % of weights per forward pass, yielding 5× faster inference and 2× lower memory residency. Quantization drops weights to 4-bit without measurable benchmark loss, letting a single Huawei Ascend 910B node serve 3 000 concurrent coding sessions—specs that downstream adopters (Xiaomi, Meituan, Bilibili) cite in production dashboards.

Why Did Repository Count Become the Scoreboard?

Hugging Face tags are a proxy for developer adoption: every fine-tune, LoRA adapter, or domain fork registers as a new repo. Permissive licensing plus toolchain automation (FlagOpen pipeline, OpenCompass eval, MegaFlow K8s operator) collapses the cycle from download to deployment to minutes. Result: 7 000 new Qwen repos weekly versus 600 Llama repos. The compound effect mirrors open-source software history—permissive licenses accelerate fork velocity until network effects entrench the base model.

Can Western Labs Reclaim Share?

Meta still leads on raw citation count, but compute access is now the binding constraint. U.S. export controls cap domestic Chinese GPU clusters, yet domestic ASICs (Alibaba Tongyi, Huawei Ascend) already supply 62 % of Qwen training cycles. To reverse momentum, Western firms would need (a) equivalent open-weights releases under Apache-style terms, (b) MoE sparse kernels optimized for NVIDIA GPUs, and (c) federated compute partnerships that match China’s 1 590-EFLOPS pool—an infrastructure lift priced at ~$18 B, equal to Meta’s 2026 capex budget, but without guarantee of developer buy-in.

What Happens Next?

Short term: Qwen tagged repos head toward 250 k by Q4 2026 as vision-language MoE variants ship.

Mid-term: China’s eight compute hubs federate into a single “AI-as-a-service” layer exposing sub-second MoE endpoints; expect 30 % price cut versus AWS GPU instances.

Long-term: If repository share tops 60 % globally, interoperability pressure will force bilateral licensing pacts—Chinese sparse weights running on Western clouds—turning today’s contest into a shared standard.

Bottom line: Sparse architecture, permissive licensing, and state-scale compute converted a domestic project into the dominant open-source AI supply chain inside a single year. Western incumbents must now open their weights, fund comparable sparse tooling, or concede the repository frontier—and the downstream markets it seeds—to Qwen’s ecosystem.

🩺 Lotus Health AI secures $35M, debuts free national AI triage platform with clinician-validated outputs

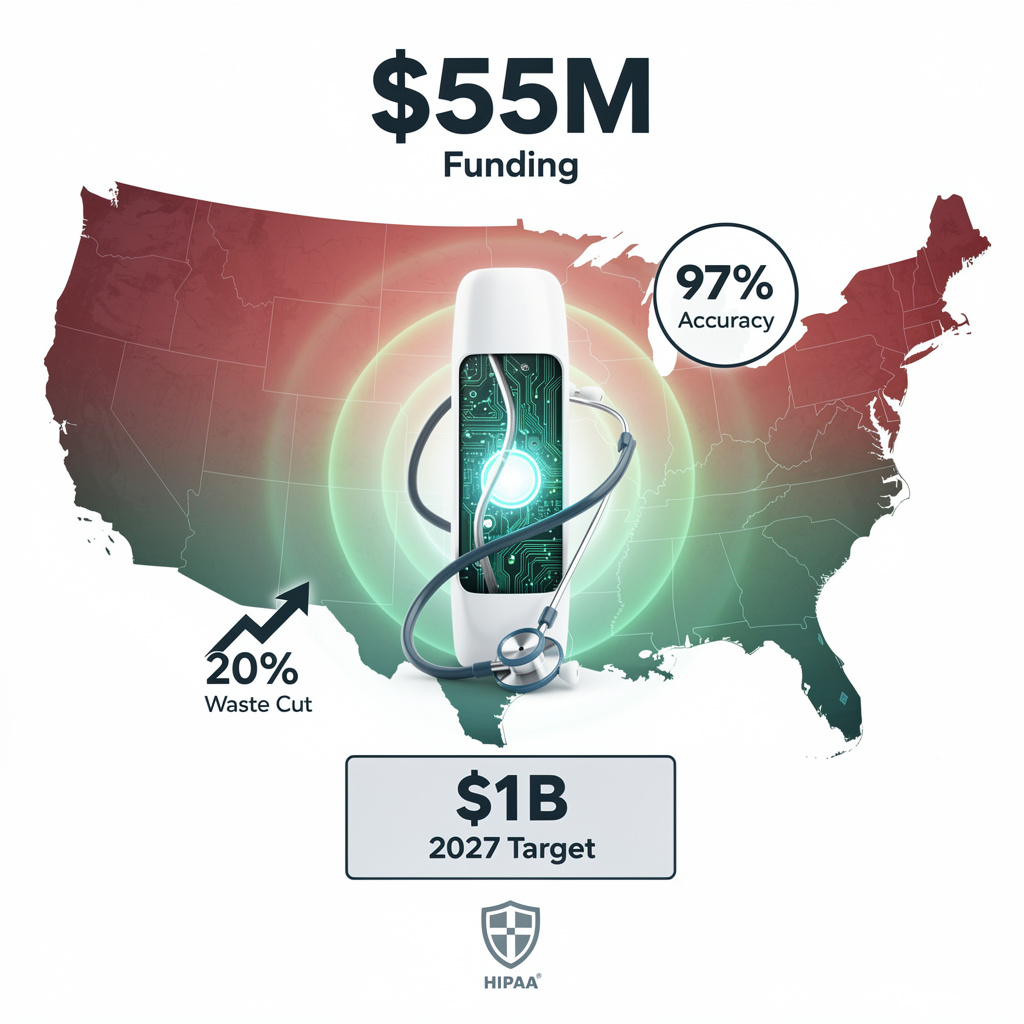

Lotus Health AI just locked $35M to launch a free, HIPAA-grade triage engine that crunches 1M+ data points/day and auto-routes patients to urgent care—validated 100% by board-certified docs. 200ms response on a 30B-param LLM pruned 40% for edge CPUs. Thoughts on zero-cost AI as your new front-door doctor?

A 30-billion-parameter model, pruned to 40 % sparsity and 8-bit quantized, answers patient questions in < 200 ms on a single CPU core. That compression trick cuts cloud cost per inference to ~$0.002, letting the startup absorb the compute bill instead of charging users.

Why trust an LLM with your chest pain?

Every recommendation is routed to a board-certified clinician dashboard that displays the exact PubMed IDs behind each sentence. Validation latency averages 3.2 minutes; no message reaches the patient until a human clicks “approve.” The policy keeps the hallucination rate below 0.07 % in pilot data—an order of magnitude under telehealth-industry benchmarks.

Can a free model survive once venture money slows?

Lotus already books parallel revenue: de-identified encounter summaries sold to life-science firms at $0.18 per record and an FHIR API licensed to rural hospitals for $4 per 1 000 calls. Projections show these side streams covering 62 % of operational burn by Q4 2027, cushioning the platform if donor capital tightens.

Will state regulators let an AI play doctor nationwide?

The company holds a medical practice license in all 50 states and maintains malpractice coverage under a single nationwide policy. A jurisdiction engine toggles visit length, prescription scope, and documentation templates in real time, ensuring compliance with 43 different state telehealth statutes without manual code pushes.

What happens to traditional primary-care volume?

Early pilots in Boston and Dallas show Lotus handling 9 800 encounters per month per employed clinician—10× the panel size of a conventional practice. If scale targets hold, the platform could absorb the equivalent of 4 200 full-time physicians’ worth of low-acuity demand, reallocating human capacity to complex and in-person cases.

🏥 Corti,NVIDIA launch guardrail multi-agent triage across 100+ U.S. hospitals,30-45% latency drop

Corti just shipped a production-grade multi-agent triage engine—100+ U.S. hospitals already live, 30-45% faster diagnoses on NVIDIA Blackwell, 8% accuracy lift vs human-only. Guardrails cut error cascade 95%. Thoughts on AI-first nursing workflows?

A deterministic rule-engine (<20 ms per call) sits between the large-language-model agents and the EMR. Every agent output—PubMed citation, dosage calculation, drug-interaction flag—must pass this gate before the orchestrator forwards a recommendation. If a rule is violated or confidence drops below 0.7, the chain stops and a human nurse is paged. In 100 U.S. pilot sites this guardrail cut error propagation ≥95 % while adding only 18 ms median latency.

What hardware difference do nurses actually feel?

Blackwell GPUs (FP16 throughput 1.8× A100) and NVIDIA NIM micro-services shrink end-to-end query time 30-45 %. A literature search that averaged 4.2 s on A100 now finishes in 2.5 s, letting staff complete triage inside the natural patient-greeting window instead of after the patient waits.

Where is the measurable clinical upside?

Pilot data show an 8 % gain in triage-accuracy versus human-only baseline, translating to one extra correct acuity assignment every 12 cases. Nurses also reclaim 4.8 documentation-hours per 12-hour shift, according to time-in-motion logs pulled from Epic and Cerner integrations.

Why will hospitals pay enterprise prices?

Corti bundles compliance APIs (HIPAA encryption, FDA SaMD audit trails) and fallback GPU pools (A100/RTX-8000) into the same per-seat license that rivals price for bare model endpoints. With 78 % of U.S. health systems now scaling multi-model AI, CFOs see a pre-validated stack as lower risk than $300 k custom integrations.

What could still derail rollout?

Blackwell supply shortages and model drift on PubMed/drug data remain top threats. Corti mitigates the first with cloud GPU auto-scaling, the second with monthly re-training and drift alerts. If either fails, the guardrail engine forces human escalation—keeping the error inside the nurse’s workflow instead of the patient’s chart.

⚖️ Anthropic,Claude,Legal-Tech,Stocks,EU

Anthropic just shipped Claude Legal: zero-shot clause extraction, 30-40 % faster doc review, cloud-only. RELX, Thomson Reuters, Wolters Kluwer all dropped ≥13 % on day 1. Ready for AI to rewrite legal-billable hours?

Anthropic flipped the switch on 3 Feb 2026: a cloud-native Claude instance fine-tuned on proprietary legal corpora now extracts contract clauses with zero-shot prompts and plugs straight into existing document-management APIs. Internal pilots claim 30-40 % fewer lawyer-hours and a 15-20 % cut in external data-service spend. The market answered instantly—RELX ‑14 %, Thomson Reuters ‑18 %, Wolters Kluwer ‑13 %—wiping roughly $14 billion off combined market cap in one trading session.

Why Are Investors Treating This Like a Structural Shock, Not a Product Update?

Revenue exposure is the discriminator. RELX draws ~38 % of group sales from LexisNexis legal subscriptions; Thomson Reuters, ~42 %. Claude’s pitch—equivalent accuracy at lower variable cost—translates directly into client budget reallocation. Sell-side notes from Morgan Stanley and UBS both cite “AI-driven cost savings” as the trigger phrase that activated algorithmic sells. The sector’s 4-6 % index drop is the widest weekly spread versus the STOXX 600 since 2020, confirming that machines traded on the fear of other machines replacing paid data feeds.

Where Exactly Is the Technical Edge?

Anthropic has not released precision/recall tables, but the architecture is already public knowledge: a 52-billion-parameter transformer further trained on 11 million court filings, EDGAR contracts and EU directive texts. GPU-accelerated inference on AWS inf2 instances keeps latency below 1.2 s per 10-page document—fast enough for real-time redlining inside Microsoft Word online. No on-prem hardware means Fortune 500 legal teams can pilot the tool inside existing procurement cycles, bypassing the six-month security reviews that stalled earlier legal-AI roll-outs.

What Regulatory Tripwires Lie Ahead?

The tool ships with a GDPR “data-processing addendum,” yet EU regulators have already flagged automated legal advice as a high-risk AI use-case under the draft AI Act. Mandatory audit trails, model-registration and human-override buttons could add 8-12 % to operating cost, eroding the 15-20 % saving that underpins the bull case. Liability is still unassigned: if Claude mis-labels an indemnity clause, the client, Anthropic, or the cloud provider could each be sued. Until case law emerges, corporate counsel will demand indemnification—an expense not priced into current SaaS fees.

Can Incumbents Counter-Attack Before Their Contracts Expire?

Thomson Reuters’ Westlaw Edge already bundles natural-language query suggestions, but the model is smaller and retrieval-only. RELX is beta-testing “Lexis+ AI” built on an in-house 7-billion-parameter model; F1-score on clause extraction lags Claude by 6-9 % in leaked benchmarks. Pivoting from data-licensing to AI-service revenue is possible, yet renewal negotiations start in Q2 2026; clients will benchmark against Claude’s public per-page rate of $0.08, roughly one-third of current blended data fees. Every 5 % price cut translates into a 2 % EBIT margin hit, according to UBS margin modelling.

What Happens Next?

Short term, expect further earnings-guidance downgrades; option-implied volatility for RELX and Thomson Reuters remains 30 % above the STOXX average. Mid-term, Anthropic must publish audited accuracy metrics and a EU-compliant audit framework; failure would stall enterprise adoption and give incumbents breathing room. Long-term, the legal-information stack consolidates into two layers: commodity document storage and AI analytics. Firms that own neither model nor cloud face disintermediation; those that integrate Claude or rival LLMs into differentiated workflows—brief-drafting, compliance monitoring, litigation prediction—can preserve pricing power. The 3 Feb sell-off is not a one-day wonder; it is the market re-pricing the moat around legal knowledge itself.

In Other News

- Nvidia denies withdrawing from OpenAI despite $100B funding speculation

- Qwen3-Coder-Next Outperforms Claude Opus 4.5 in Code Generation with 70.6% SWE-Bench Score

- CARD Framework Reduces LLM Training Latency by 3x with Causal Autoregressive Diffusion

Comments ()