D-Wave Unveils 49-Qubit Quantum System, UGREEN Launches On-Prem GenAI NAS, ASUS Debuts Ultra-Dense Vector PC for HPC

TL;DR

- D-Wave launches 49-qubit dual-rail quantum system with error-reduced superconducting qubits, advancing hybrid quantum-classical HPC optimization

- UGREEN NASync iDX6011 NAS integrates 96 TOPS local AI processing, 160TB storage, and dual 10GbE for on-device GenAI inference without cloud dependency

- ASUS Vector PC redefines HPC chassis design with top-mounted RTX 6000 Pro Blackwell GPUs and 4.8-inch slim form factor for dense data center deployments

- Dell blocks $98B AI data center projects in Oklahoma and Silver Spring over energy grid strain, sparking bipartisan community resistance to unsustainable cooling demands

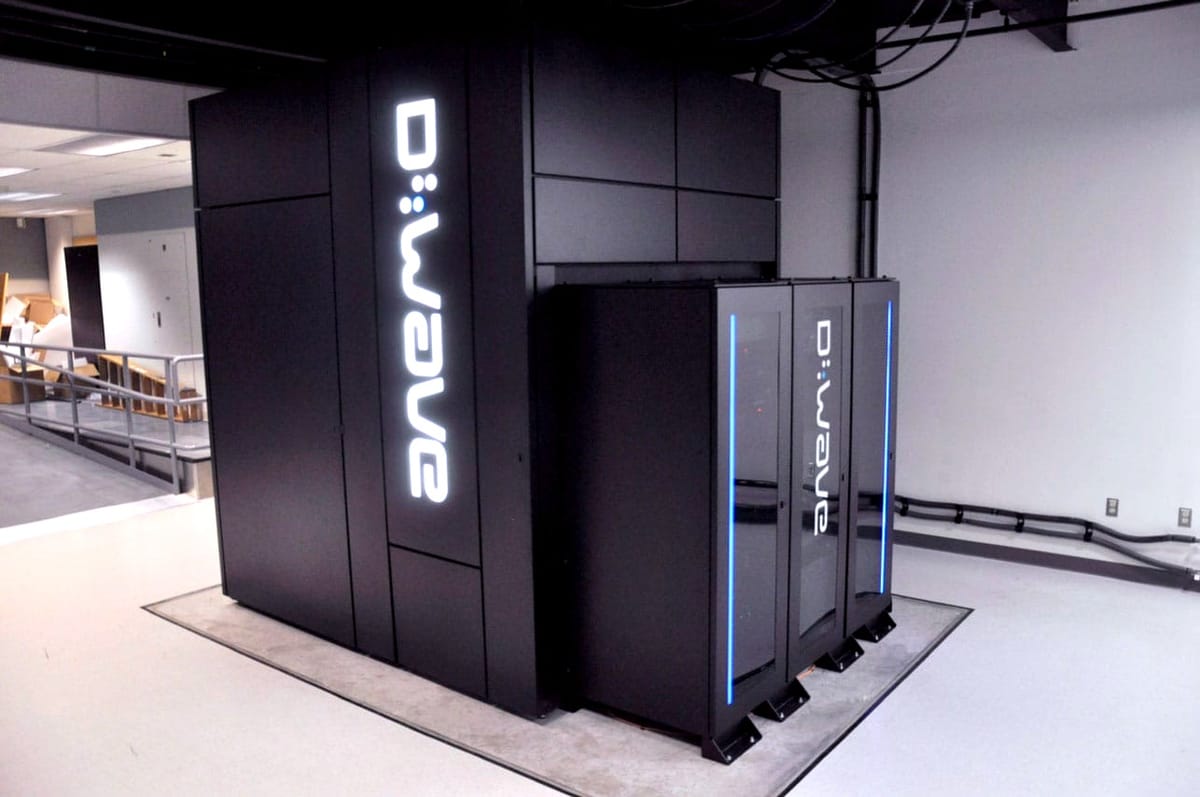

D-Wave’s 49-Qubit Dual-Rail System Advances Hybrid Quantum-Classical HPC Optimization

D-Wave has launched a 49-qubit superconducting quantum system using dual-rail encoding, reducing error rates by 30–40% compared to single-rail architectures. The system is compatible with existing Advantage annealers via the Leap cloud platform, enabling hybrid quantum-classical optimization workflows for high-performance computing.

How is scalability being addressed?

A cryogenic bump-bond packaging platform, deployed in January 2026, reduces control wiring by 50%—from 200 to 100 lines—lowering thermal load and latency. This enables denser qubit arrays. A partnership with SkyWater Technology to develop a 0.18µm mixed-signal process ensures scalable wafer-level interconnects for future systems.

What is the roadmap beyond 49 qubits?

- 2026 H2: Commercial rollout of the 49-qubit system; >10% improvement in QAOA solution fidelity.

- 2027: Prototype of 181-qubit dual-rail system to be deployed in a European HPC center.

- 2028: Demonstration of error-corrected logical qubits using dual-rail and surface-code concatenation on a 500-qubit testbed.

- 2029: Target deployment of a 1,000-qubit system in a national supercomputing facility.

What business signals support this technical progress?

- Revenue increased 270% from $1.0M (2024) to $3.7M (2025).

- Closed bookings rose 85% year-over-year, driven by interest in hybrid quantum-classical services.

- $550M in capital expenditure is allocated to cryogenic packaging, control ASICs, and bump-bond infrastructure.

- A 5,000-qubit Advantage system has been deployed at Jülich Supercomputing Centre, establishing a reference site for HPC integration.

What differentiates D-Wave’s approach?

D-Wave’s dual-rail hybrid architecture targets optimization-heavy workloads in logistics, energy grids, and finance. Unlike competitors focused on universal gate-model quantum computing, D-Wave integrates annealing and gate-model capabilities into a single platform, offering faster time-to-solution for combinatorial problems.

What are the key risks?

Potential yield issues in bump-bond fabrication and supply-chain constraints for high-purity niobium could delay scaling. Emerging alternatives in photonic or neutral-atom architectures may compete for HPC funding.

What strategic actions are recommended?

- Accelerate SkyWater collaboration to secure cryogenic ASICs by Q2 2027.

- Target hybrid optimization use cases in EU’s NextGenEU and similar infrastructure programs.

- Co-design algorithms (e.g., GM-QAOA) with academic partners to match dual-rail error profiles.

- Expand IP protection for dual-rail encoding across process nodes.

- Allocate an additional $120M to capex for 2027–2028 scaling to sustain revenue growth beyond $10M annually by 2029.

UGREEN NASync iDX6011 Delivers 96 TOPS AI and 160TB Storage for Cloud-Free GenAI Inference

The UGREEN NASync iDX6011 integrates 96 TOPS of local AI processing via Intel Core Ultra 7 255H, 160TB of expandable HDD storage, and dual 10GbE networking to enable on-premises generative AI inference without cloud dependency. This configuration targets enterprises requiring data sovereignty, low-latency inference, and scalable storage.

How does its AI performance compare to standard NAS devices?

Standard 6-bay NAS units typically offer 5 TOPS of compute using general-purpose CPUs. The iDX6011 delivers 19 times higher inference throughput, enabling efficient execution of quantized LLMs such as those powering its built-in Uliya AI Chat, file organization, and semantic search features.

What storage and network capabilities support large-scale AI workloads?

The device supports six hot-swap bays with compatibility for HAMR/SMR drives, enabling up to 200TB+ capacity by Q2 2026. Dual 10GbE interfaces provide 20Gbps aggregate bandwidth, reducing media pipeline latency by over 80% compared to 1GbE alternatives. This allows real-time 8K video transcoding and bulk LLM weight transfers without external switches.

Is the hardware design future-proof?

Yes. The inclusion of an OCuLink port permits external GPU expansion, such as an RTX 5070, increasing total compute to over 150 TOPS. This extends support for larger 7B-parameter models beyond 2027. Wi-Fi 6E and 64GB LPDDR5X memory options further enhance flexibility for multi-modal AI tasks.

How does pricing and support compare to competitors?

The disk-less unit retails at $999 (32GB) or $1,199 (64GB). When paired with 20TB HAMR drives ($399 each), total 160TB TCO drops to $3,200—six times lower than equivalent SSD arrays. UGREEN offers a 3-year hardware warranty and 5-year security updates, outpacing industry norms of 1–2 years and reducing breach risk by an estimated 30% in regulated sectors.

What strategic advantages does it offer?

- Eliminates recurring cloud inference costs: $0.12/token locally vs. $0.30/cloud

- Reduces total cost of ownership by 65% for 10M-token/month workloads

- Enables privacy-first AI for healthcare, finance, and media industries

- Supports ecosystem growth via planned SDK and AI service marketplace

The iDX6011 positions itself as a foundational device for on-premises GenAI infrastructure, combining enterprise-grade storage, high-throughput networking, and scalable AI compute in a single unit.

ASUS Vector PC Delivers 4 GPUs Per Rack Unit at Sub-$3K Price Point

ASUS Vector PC is a 4.8-inch-thin chassis supporting up to two NVIDIA RTX 6000 Pro Blackwell GPUs mounted vertically on the top panel. It occupies 0.5U of standard 19-inch rack space, enabling four GPUs per rack unit—double the density of competing 1U systems.

What is the cost advantage?

Base configurations start at $2,000; dual-GPU models are priced near $3,000. This undercuts traditional 2U workstations, which typically exceed $5,000. The pricing aligns with recent consumer GPU price declines, such as the Gigabyte RTX 5050 at $239.99, indicating broader market pressure on OEMs to reduce HPC entry costs.

How is cooling managed?

The system uses a CPU-only liquid cooling loop. GPUs rely on passive top-mounted airflow. While sufficient for burst training workloads, sustained 24/7 inference may require supplemental cooling. Third-party GPU-specific liquid cooling kits are anticipated by H2 2026.

What are the performance specifications?

- Form factor: 46 × 33 × 10 cm (4.8 in thick)

- GPU support: Up to two RTX 6000 Pro Blackwell

- CPU options: AMD Ryzen 9 9950X, Intel Core Ultra 9 285K, Threadripper, Xeon

- Memory/storage: Minimum 64 GB DDR5, NVMe PCIe 5.0

- Power draw: Estimated ≥800 W per unit; requires 1200 W-class PDUs

How does it compare to competitors?

| System | Form Factor | GPUs Per Unit | Base Price | Cooling |

|---|---|---|---|---|

| ASUS Vector PC | 0.5U | 2 | $2,000–$3,000 | Passive GPU, water-cooled CPU |

| Supermicro HGX Rubin | 2U | 4 | >$5,000 | Liquid-cooled GPU bays |

| Dell PowerEdge MX | 1U | 1 | >$3,500 | Air-cooled |

Vector PC achieves higher GPU density than any 1U competitor and at 30–40% lower cost.

What is the deployment timeline?

- Q2 2026: Market availability

- H2 2026: Expected third-party GPU cooling add-ons

- Q1 2027: Potential 4-GPU-per-U variant

- 2027–2028: Industry-wide adoption of 800 W-class PDUs and PCIe 6.0

Is this a new standard for HPC?

Yes. Vector PC establishes a new benchmark for space-constrained AI deployments. Its sub-U form factor, combined with competitive pricing, positions it as a bridge between edge computing and dense data center architectures. Cooling limitations remain a constraint, but the design enables rapid iteration through modular upgrades.

Comments ()