CES 2026: Nvidia Open-Source AV Hits Tesla Stock; GenieSim Scales Robots; Razer Motoko Launches

TL;DR

- Mobileye’s EyeQ6H-based ADAS systems now deployed in 19M vehicles, with 9M new systems acquired in 2025 to support global OEM partnerships

- Tesla faces new lawsuit in Idaho after Model X crash kills four, following Florida jury’s $243M liability ruling over Autopilot safety claims

- CES 2026 showcases Genie Sim 3.0 AI-powered robot simulation platform with 100K+ scenarios, enabling training for 5,000 mass-produced humanoid robots

- Razer unveils Project Motoko AI headphones with dual 3K cameras and Gemini/ChatGPT integration, enabling real-time translation and contextual navigation

Nvidia's Open-Source AV Platform Triggers Market Repricing of Tesla's Software Advantage

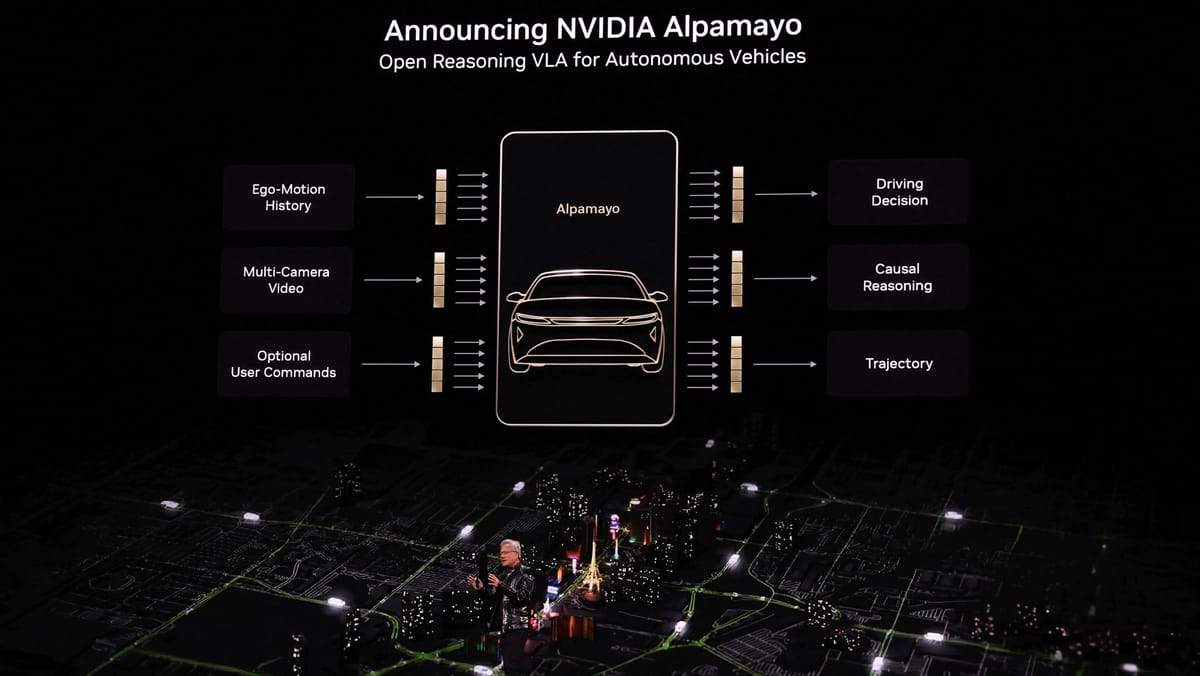

Nvidia’s release of Alpamayo-R1, an open-source Vision-Language-Action stack built on the Vera Rubin platform, coincided with a 5% decline in Tesla’s stock price, erasing approximately $10 billion in market capitalization. The timing and nature of the announcement suggest investors are revaluing Tesla’s Full-Self-Driving (FSD) software as less uniquely defensible.

How are hardware and software ecosystems evolving?

At CES 2026, Nvidia, AMD, and Intel simultaneously unveiled AI hardware optimized for autonomous driving, including the AMD Ryzen 7 9850X3D and Intel Panther Lake AI chips. These developments, paired with Alpamayo-R1’s permissive licensing, enable OEMs to assemble modular, interoperable AV systems without proprietary software lock-in. Industry estimates indicate this reduces AV stack development costs by 20–30%.

Is Tesla responding strategically?

Tesla CEO Elon Musk publicly signaled willingness to license FSD to other automakers shortly after Nvidia’s announcement. This move appears defensive, aiming to monetize existing IP as open-source alternatives gain traction. Tesla’s Q4 2025 deliveries fell 16% year-over-year, amplifying pressure to offset declining volume with software-driven revenue.

What are the implications for OEMs and regulators?

Mid-tier automakers such as Mercedes and Nissan are expected to begin pilot integrations of Alpamayo-R1 on Vera Rubin hardware within six months. Open-source stacks may accelerate Level-4 robotaxi development, with Nvidia targeting commercial deployment by 2027. Greater software transparency could streamline regulatory safety certification under NHTSA guidelines.

What future scenarios are likely?

Within 12–24 months, over 30% of new robotaxi deployments may run on open-source stacks, directly challenging Tesla’s FSD licensing revenue potential. Tesla’s path forward involves either expanding FSD licensing with transparent terms or pursuing a hybrid software partnership with Nvidia. The latter could preserve market share but dilute the open-source advantage.

What actions are recommended?

Investors should consider rebalancing away from Tesla toward OEMs with flexible AV architectures. OEMs should benchmark Alpamayo-R1 against FSD on latency and perception accuracy. Regulators should establish audit and version-control standards for open-source perception systems to ensure safety without stifling innovation.

CES 2026 Highlights GenieSim 3.0 as Catalyst for Mass-Produced Humanoid Robots

GenieSim 3.0, unveiled at CES 2026, enables training for 5,000 mass-produced humanoid robots using over 100,000 simulated scenarios. The platform integrates NVIDIA Isaac Sim’s physics engine and supports automated evaluation, scene randomization, and asset generation in a unified workflow.

What performance gains does GenieSim 3.0 deliver?

- Synthetic data generation exceeds 10,000 hours per week

- Scenario coverage spans 200+ robot tasks across terrain, lighting, and payload conditions

- Simulation time per scenario reduced from 15 seconds to under 3 seconds

- Physical prototype cycles cut by approximately 70% using simulation-only training

How has production scaled since deployment?

| Date | Milestone |

|---|---|

| Dec 2025 | AGIBOT A2 deployed at Hyundai Savannah plant using GenieSim-trained policies |

| Jan 2026 | GenieSim 3.0 launched alongside NVIDIA’s 5× AI compute GPUs and AMD MI455 processors |

| Mid 2026 | 5,000th humanoid robot produced, marking transition from prototype to series manufacturing |

Is the industry converging around a common standard?

Yes. GenieSim Benchmark has become a reproducible performance yardstick adopted by Hyundai, Boston Dynamics, and DeepMind. The shared API with NVIDIA Isaac Sim ensures binary compatibility, reducing integration costs for OEMs. This alignment enables cross-vendor skill certification, making benchmark compliance a procurement requirement.

What infrastructure enables this scale?

NVIDIA’s next-gen GPUs and AMD’s MI455 AI processors deliver fivefold AI compute gains over the A100, reducing cloud compute costs by 40%. This hardware-software alignment supports sub-second latency for high-fidelity simulation workloads.

What is the projected trajectory?

| Year | Projection |

|---|---|

| 2026 H2 | Scenario library expands beyond 500,000 configurations |

| 2027 | Annual humanoid production exceeds 20,000 units |

| 2028–2030 | Integration with YottaScale compute (10 YottaFLOPS) enables real-time simulation-in-the-loop training |

| 2030 | Over 100,000 humanoids operational in industrial and service environments |

GenieSim 3.0 establishes simulation as the dominant paradigm for humanoid robot development, replacing incremental physical testing with scalable, data-driven training. The convergence of simulation, hardware, and benchmarking standards accelerates mass production and interoperability across the robotics ecosystem.

Razer's Project Motoko AI Headphones Redefine Audio-First Wearables with Real-Time Translation and Camera Integration

Razer's Project Motoko integrates dual 3K cameras, on-device Snapdragon X2-Elite compute, and native support for OpenAI, Google Gemini, and other LLMs into an ear-mounted form factor. The device enables sub-second real-time translation and contextual audio navigation, validated during CES 2026 demonstrations.

How does its architecture differ from competing smart glasses?

Unlike visual AR glasses from Meta and Google, Motoko prioritizes audio output with camera inputs for environmental context. Its hybrid compute model runs lightweight tasks locally and offloads heavy LLM inference to paired devices via Wi-Fi 7, aligning with industry trends in edge-AI design.

What is the market potential for audio-centric AI wearables?

The global voice-AI market is projected to grow from $14.57B in 2025 to $63.38B by 2035. Motoko targets a niche within this expansion—audio-AR—where users require hands-free interaction without visual obstruction. Early adoption is expected among gaming streamers and travel influencers.

What are the key technical specifications?

- Imaging: Dual 3K 60fps FPV cameras with optical stabilization

- Compute: Qualcomm Snapdragon X2-Elite (80 TOPS), on-device inference

- AI Stack: Native SDK for OpenAI, Gemini, Perplexity, Grok

- Interaction: Voice commands, contextual overlays, turn-by-turn navigation

- Battery: 30Wh; 12h AI streaming, 18h audio-only playback

- Weight: 380g; detachable camera modules

What risks must Razer address?

- Latency: Heavy LLM reliance on tethered devices may cause delays; mitigation includes on-device NPU upgrades targeting Q4 2026.

- Privacy: Continuous camera capture raises GDPR/CCPA concerns; hardware shutter and on-device encryption are recommended.

- Ergonomics: Weight exceeds open-ear earbuds; lighter materials and interchangeable ear-cups could improve adoption.

- Pricing: Projected at $549; a lower-cost "Motoko Lite" variant (audio-only, ~$349) is advised to broaden appeal.

What is the roadmap for ecosystem growth?

- Q2 2026: Developer kits shipped, enabling third-party plugins (translation, overlays, logistics alerts)

- Q3 2026: Consumer pre-orders open

- Q4 2026: Snapdragon X3 NPU integration enables standalone operation

- H1 2027: Enterprise pilots in logistics and remote medical assistance

- End 2027: Version 2.0 with dual-mic array and privacy-by-design shutter

Razer’s strategy hinges on accelerating on-device AI, ensuring privacy compliance, and expanding developer access through open SDKs and hackathons. Success will depend on execution speed and ecosystem openness.

Comments ()