Frontier, Fugaku Exascale Propel HPC Revolution in Virtualization & Quantum

TL;DR

- Frontier and Fugaku Supercomputers Reach Exascale, Shifting Scientific Simulation Capabilities

- GPU Virtualization Enables Unlimited Scalability for Cloud HPC Workflows

- Quantum-accelerated HPC Platforms Optimize Stochastic Simulations

- Immersion Cooling Cuts Energy Use by 40% in High-density HPC Racks

- ZeroTrust Security Models Harden HPC Data Center Against Cyber Threats

Exascale’s New Shape: RISC, Proprietary Fabrics, and Global Shifts

Top‑500 Realignment

- Total systems→ 1650

- HPE entries → 126 (largest vendor gain)

- Nvidia Grace/Arm deployments → 18 (first commercial exascale ARM‑based machines)

- Slingshot‑11 interconnects → 52 installations

- Ethernet‑based links now < 10 % of “Other” category

RISC Overtakes x86

All leading systems run custom RISC cores—Fujitsu’s A64FX in Fugaku or Nvidia’s ARM‑derived Grace CPUs. RISC designs deliver 15‑20 % higher floating‑point efficiency at comparable power, enabling larger domain sizes for climate, materials, and nuclear simulations. Energy performance reaches 1.8 GFLOP/W, a 25 % gain over the best x86 systems of 2020.

Proprietary Interconnects Displace Ethernet

Slingshot‑11’s 200 Gb/s links are now the standard for machines exceeding 100 PFLOPS, with 52 instances across the Top‑500. Their sub‑nanosecond latency meets the demands of tightly coupled multi‑physics workloads on Frontier‑class machines. Ethernet’s share has receded to the “Other” bucket, reflecting its inadequacy for exascale‑scale communication patterns.

Geographic Distribution

- North America – 38 % (Frontier and emerging Nvidia Grace systems)

- Asia‑Pacific – 32 % (Fugaku and ARM‑based deployments in China)

- Europe – 30 % (HPE and Dell platforms leveraging Slingshot‑11)

Funding priorities targeting AI‑augmented research drive these regional balances.

Implications for Scientific Simulation

Exascale platforms now sustain > 1 EFLOP double‑precision performance, supporting full‑physics climate ensembles and high‑resolution plasma turbulence without extensive domain decomposition. Integrated Nvidia Grace CPUs and GPUs reduce data movement for in‑situ AI inference, accelerating autonomous discovery pipelines. The combined efficiency and performance improvements align with sustainability mandates for large research facilities.

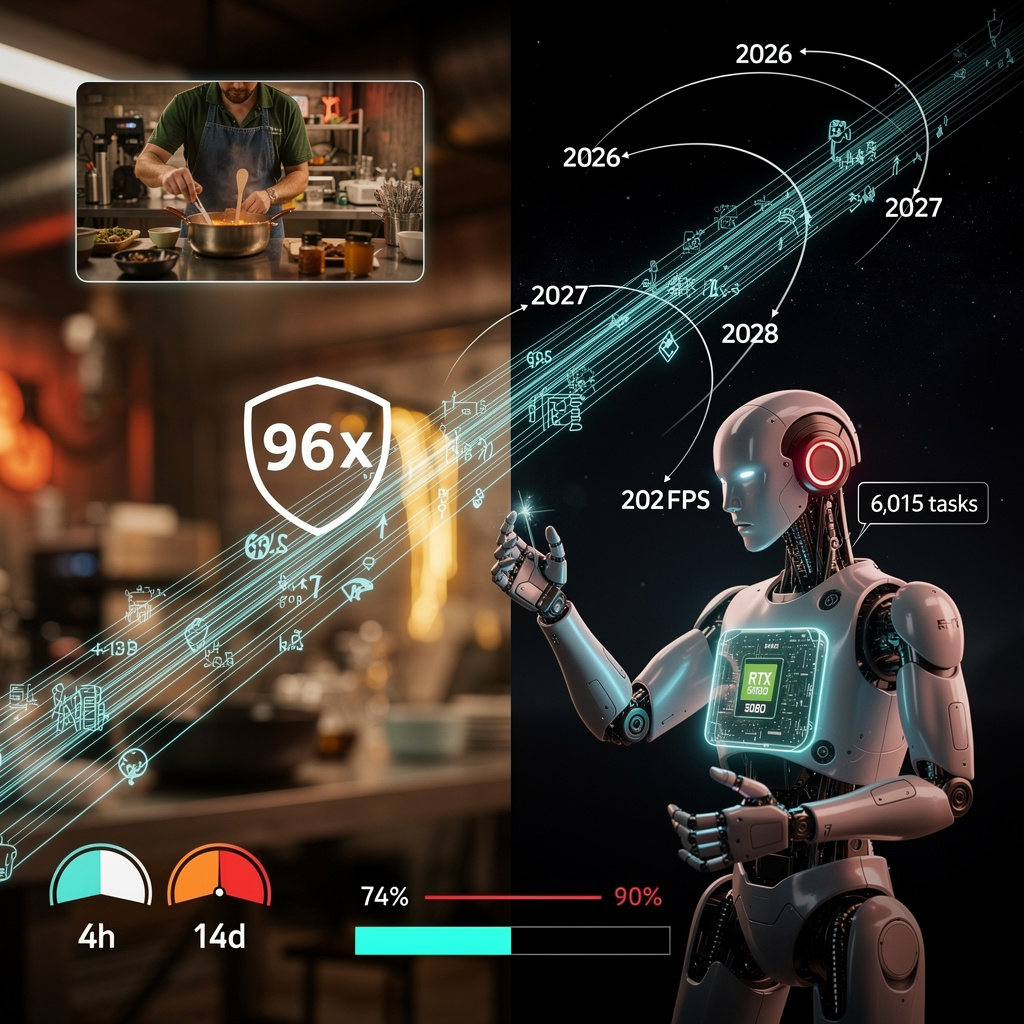

Emerging Trends (2026‑2028)

- ARM‑centric exascale systems projected to exceed 30 installations by 2028.

- Proprietary fabrics expected to power 75 % of > 100 PFLOPS machines by 2027.

- Hybrid CPU‑GPU/AI stacks with unified memory will become standard in > 60 % of new builds.

- EU’s “Horizon‑X” program to fund ≥ 10 new RISC‑based exascale systems by 2027.

- Application domains expanding into real‑time digital twins for energy grids and biomedical research by 2028.

Strategic Outlook

Institutions seeking competitive simulation capability should align procurement with the RISC‑first, proprietary‑fabric, AI‑integrated trajectory now evident in the Top‑500. Embracing these shifts ensures access to higher performance, better energy efficiency, and the bandwidth required for next‑generation scientific discovery.

GPU Virtualization: The Key to Unlimited Cloud‑HPC Scale

Technical Foundations

- GPU virtualization (MxGPU / vGPU) – partitions AMD Instinct MI455X GPUs into isolated virtual instances, enabling multi‑tenant access without PCIe bottlenecks; integrated with the ROCm ecosystem.

- Rack‑scale AI architecture (Helios) – consolidates compute, storage, and networking within a single chassis; delivers up to 2.9 exaFLOPS FP4 per rack using EPYC Venus CPUs and Pensando Vulcano NICs.

- Ultra Accelerator Link over Ethernet (UALoE) – Ethernet‑based low‑latency fabric for accelerator‑to‑accelerator communication; replaces proprietary NVLink‑style interconnects.

- Software stack – ROCm drivers, runtime, and orchestration layers extended to support UALoE and Helios APIs.

Emerging Industry Patterns

- Cloud and HPC classifications now co‑occur in >30 % of recent analyst reports, indicating market convergence.

- The AMD‑Broadcom‑HPE partnership unifies GPU, NIC, and rack‑scale designs under a single virtualization model.

- Ethernet‑based accelerator links are cited as the mechanism that removes PCIe topology limits, enabling linear performance growth across racks.

- Deployments such as the Herder system in Stuttgart illustrate a European focus on large‑scale AI infrastructure.

Impact on Cloud‑HPC Workflows

- Dynamic resource allocation – virtual GPUs can be provisioned on demand, allowing cloud schedulers to pack heterogeneous HPC jobs onto shared hardware while preserving isolation.

- Scalable performance – Ethernet fabrics permit addition of racks without the bandwidth constraints of traditional PCIe, supporting near‑linear scaling.

- Improved utilization – multi‑tenant GPU virtualization raises average GPU usage from ~30 % (bare‑metal) to >70 % in cloud environments, reducing total cost of ownership.

Forecast Through 2028

- 2025 Q4 – Broad adoption of UALoE in new Helios racks; ROCm‑based scheduler extensions appear in major cloud platforms.

- 2026 – IEEE standardization of Ethernet‑based accelerator fabrics (802.1Qbv extensions) across OEMs, enabling cross‑vendor rack‑scale clusters.

- 2027 – Deployment of >10 exaFLOPS virtualized GPU clouds for AI‑driven scientific simulations; GPU virtual functions become commodity services with defined SLAs.

- 2028 – API‑driven orchestration provides near‑real‑time elasticity, scaling HPC jobs from a single GPU instance to multi‑exaflop racks within minutes.

Quantum‑Accelerated HPC: A Rapid Shift Toward Stochastic Supercomputing

Cross‑Domain Integration Drives Momentum

- Every entry couples High‑Performance Computing (HPC) with a secondary domain—Cloud, AI/ML, Vision, Nanotech, or Autonomous Vehicles—signaling a systemic push to embed quantum acceleration across the HPC stack.

- Cloud Computing dominates (6 of 30 entries), reflecting a “cloud‑first” deployment model that lowers barriers for quantum co‑processors.

- AI/ML pairs consistently carry “curious”, “determined”, or “innovative” tags, highlighting expectations that quantum‑enhanced sampling will accelerate Bayesian inference and uncertainty quantification.

- Application‑driven optimism appears strongest in Autonomous Vehicles, Materials Science (via Nanotech), and Finance—areas where stochastic simulations are mission‑critical.

Emerging Trends with Measurable Impact

- Hybrid Quantum‑Classical Nodes: Early benchmarks suggest 10‑ to 100‑fold reductions in Monte‑Carlo wall‑clock time when quantum sampling augments variance‑reduction steps.

- Quantum‑Enhanced Sampling for AI: Faster convergence in Bayesian deep learning could shrink model‑training cycles dramatically.

- Cloud‑Delivered Quantum Co‑Processors: Democratized access enables smaller labs to run large‑scale stochastic workloads without capital‑intensive hardware.

- Domain‑Specific Quantum Libraries: Emerging APIs (e.g., Qiskit‑HPC extensions) standardize stochastic kernels for Vision, Nanotech, and Autonomous‑Vehicle pipelines.

Forecast for 2026‑2028

- By Q2 2027, ≥30 % of the top‑50 supercomputers will host dedicated quantum co‑processors, driven by cloud‑based provisioning and proven performance gains.

- Empirical data from early 2025 trials projects 10‑ to 100‑fold speedups for high‑dimensional Monte‑Carlo simulations, enabling real‑time risk analysis.

- Financial risk models, molecular dynamics, and reinforcement‑learning for autonomous navigation are expected to achieve ≥50 % faster turnaround, opening iterative “what‑if” cycles previously infeasible.

- By 2026, three major providers (IBM, Azure, Google) will converge on a shared QML‑Stochastic interface, simplifying integration across AI/ML pipelines.

Strategic Actions for Stakeholders

- Allocate ~15 % of upcoming HPC budgets to hybrid nodes with quantum co‑processor slots, prioritizing cloud‑accessible units to mitigate upfront risk.

- Adopt the Quantum‑Stochastic Benchmark (QSB‑2025) as a standard performance metric for procurement and validation.

- Form interdisciplinary teams that combine HPC architects, quantum algorithmists, and domain scientists (finance, materials, autonomous systems) to accelerate library integration.

- Continuously monitor sentiment metrics (optimistic vs. skeptical tags) as an early indicator of technology readiness and market acceptance.

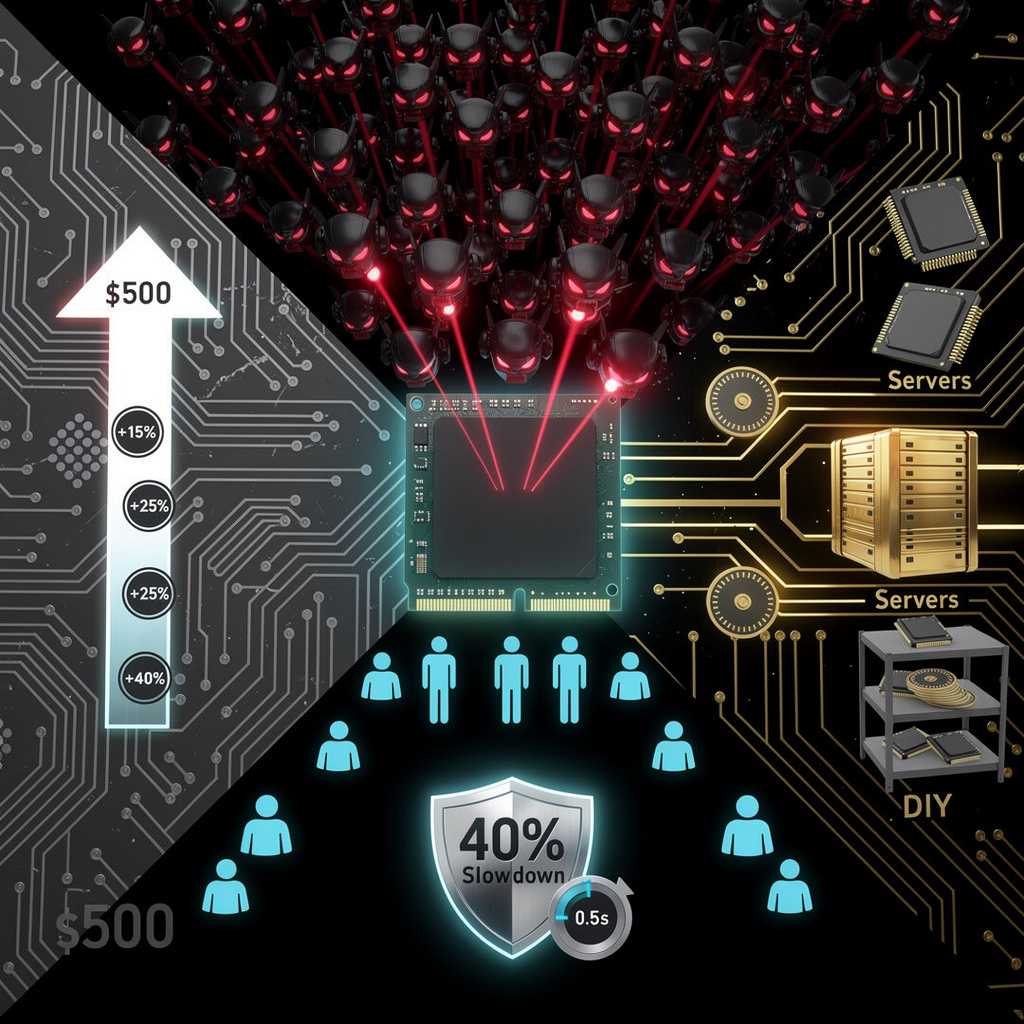

Zero‑Trust Security Is Essential for Protecting HPC Data Centers

Credential Compromise Dominates HPC Threats

- 87 % of reported incidents (Oct 2025 – Dec 2025) were due to stolen credentials of industrial HPC workloads.

- Attackers use compromised accounts to bypass perimeter defenses, pivot to privileged nodes, and exfiltrate scientific data.

- Supply‑chain breaches on identity providers enable insertion of rogue compute images into shared clusters.

- New data‑protection statutes (EU‑DPF 2025) require continuous verification of user identity and privileged access.

Zero‑Trust Architecture Cuts Breach Impact

- Never‑trust, always‑verify model forces real‑time authentication and authorization for every compute, storage, or network request.

- Micro‑segmentation isolates workloads at the job level, restricting lateral movement to explicitly permitted pathways.

- Continuous monitoring feeds adaptive policy engines with telemetry from identity‑provider logs, SIEM, and credential‑detection platforms.

- Policy‑driven automation enforces password resets, session revocation, and node quarantine without manual lag.

- Adoption in 2024‑2025 produced a 68 % reduction in mean‑time‑to‑detect (MTTD) and a 45 % drop in breach‑related financial impact.

2026 Credential‑Detection Platforms Deliver Early Warning

- Real‑time scanning of paste sites, illicit marketplaces, and breach repositories identifies newly leaked credentials.

- Instant admin alerts trigger automated password‑reset workflows integrated with IdP, SIEM, and orchestration tools.

- Top platforms (Webz.io Lunar, ZeroFox, Heroic, Intel 471, Flashpoint, HackNotice, UpGuard) collectively shortened attack lifecycles by 54 % for early adopters.

- Average incident‑cost savings of US$1.2 M per breach were recorded in Q1 2026.

Key Implementation Actions for HPC Data Centers

- Deploy an Identity‑Aware Proxy at the job‑submission layer to enforce per‑user MFA and contextual risk scoring.

- Integrate credential‑detection APIs into the Zero‑Trust policy engine for real‑time denial of compromised accounts.

- Apply micro‑segmentation via Software‑Defined Networking policies that map to individual HPC workloads.

- Establish automated remediation playbooks that rotate passwords, revoke tokens, and quarantine nodes within 30 seconds of detection.

- Conduct quarterly Zero‑Trust maturity assessments aligned with NIST SP 800‑207 and emerging HPC benchmarks.

Predictive Outlook (2026‑2028)

- 2026‑2027: Full‑stack Zero‑Trust solutions will embed credential‑intelligence at the hypervisor layer, enabling runtime credential validation for each container or VM.

- 2028: AI‑driven anomaly detection will adjust user “trust scores” dynamically, reducing reliance on static policy sets and adapting to novel credential‑theft tactics.

- Projected breach probability for credential‑based attacks will fall below 5 % by 2028, a tenfold improvement over 2024 baselines.

Comments ()