Frontier Achieves 1.94 Exascale; Amazon, NVIDIA, OpenMP Drive AI Advances

TL;DR

- Frontier Achieves 1.94 Exascale Performance, Outpacing Fugaku and Competing Supercomputers.

- Amazon Launches Serverless GPU Acceleration for Vectored Indexing, Reducing Search Latency by 10×.

- New Immersion Cooling in 10 MW AI Data Hall Cuts Power Usage Effectiveness to 1.25.

- NVIDIA Deploys 200 Gb/s NVLink on 2,000 GPUs, Enabling Scalable Data‑Center Pods for AI Workloads.

- OpenMP and CUDA Integration Enables MPI‑Boosted Scientific Simulations to Scale 4× on Multi‑Tenant Cloud Clusters.

Frontier’s 1.94 ExaFLOP Leap Redefines the Supercomputing Race

Performance Landscape

- Frontier (USA) – 1.94 EF (Rmax), AMD MI300A GPUs + AMD Zen 4 CPUs, HPE Slingshot‑11 (200 Gb/s)

- Fugaku (Japan) – 1.10 EF, Fujitsu A64FX (Arm), Tofu‑D

- JUPITER (Europe) – 1.00 EF*, custom RISC‑V + Nvidia GPUs, Slingshot‑11

- Nvidia Exascale (USA) – 0.95 EF, Grace CPU + H100 GPUs, NVLink/InfiniBand

*Presented at SC 2025 (5,884 nodes, 1,184 TFLOPS per node). Frontier’s 0.84 EF advantage over Fugaku translates to a 76 % performance lead, confirming the superiority of heterogeneous x86‑GPU designs in the current TOP500 list.

Architectural Trends

- Heterogeneous x86‑GPU hybrids dominate the top tier; pure x86 CPU entries are absent.

- Custom interconnects surge: Slingshot‑11 deployments rose from 36 to 52 systems (≈44 % YoY), while Ethernet’s share declined sharply.

- Vendor shifts: HPE added ten systems, becoming the largest net gain; Lenovo’s count fell from 161 to 141; Nvidia’s Grace/Arm presence grew from 0 to 18 units.

These factors combine to give Frontier its edge: high‑density GPU stacks on a low‑latency Slingshot‑11 fabric supplied by the fastest‑growing vendor.

AI Integration and Policy Shift

- SC 2025 introduced AI‑centric benchmarks (e.g., HPL‑MxP) that prioritize mixed‑precision performance.

- Frontier’s GPU‑heavy architecture matches the demands of real‑time Bayesian inference and other extreme‑scale AI workloads, as demonstrated by the recent Gordon Bell Prize.

- The community’s pivot from traditional Linpack to AI‑enabled metrics reshapes ranking criteria, favoring systems that excel in both HPC and AI tasks.

Networking Evolution

- Proprietary fabrics now occupy one‑third of TOP500 interconnect entries, reflecting the need for sub‑nanosecond latency at exascale.

- Slingshot‑11’s rise underscores the strategic advantage of custom, high‑bandwidth networks over conventional Ethernet.

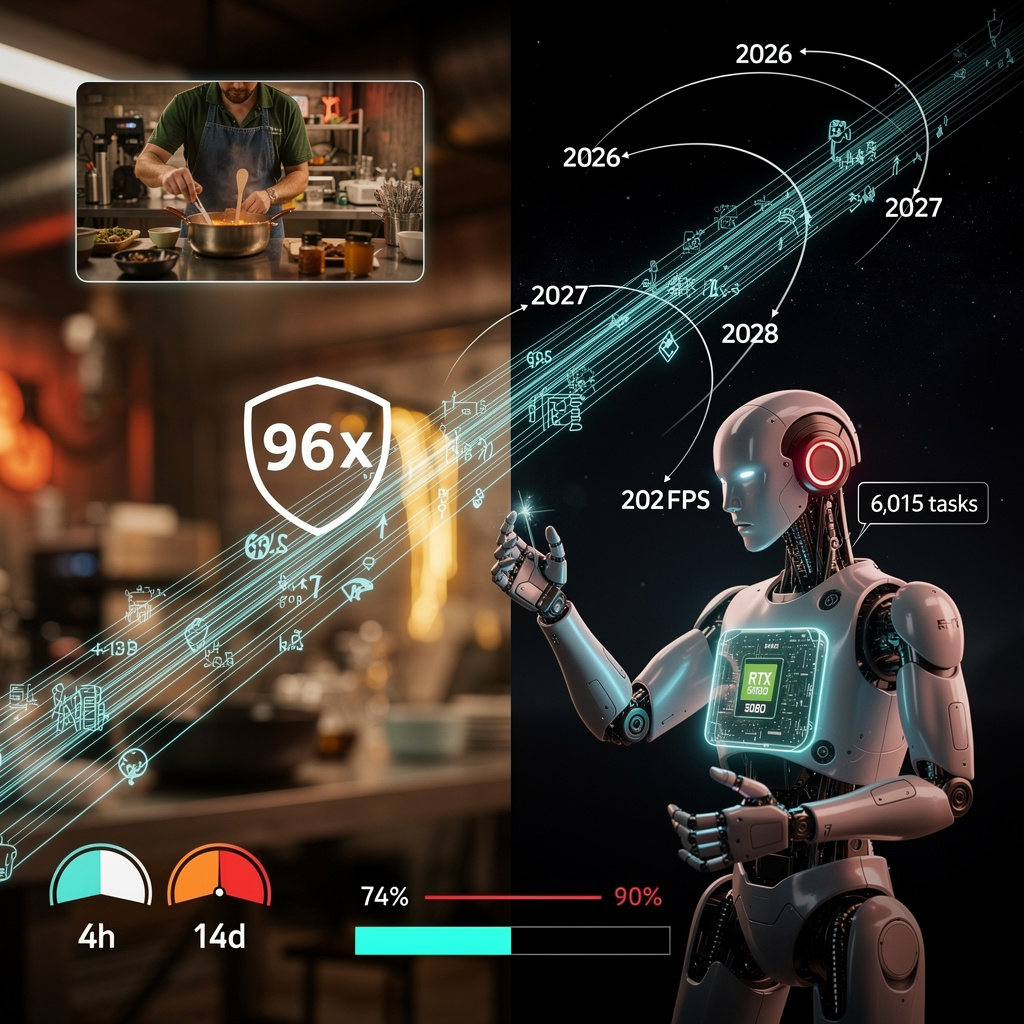

Forecast 2026‑2028

- 2026: Expected Rmax 2.2–2.5 EF; x86 + AMD MI300B or Nvidia Grace‑H100 hybrids; Slingshot‑12 or NVLink‑4.

- 2027: Rmax 2.8–3.0 EF; multi‑vendor heterogeneous stacks with AI ASICs; next‑gen proprietary fabrics (≥400 Gb/s).

- 2028: Rmax 3.3–3.5 EF; AI‑first designs centered on tensor cores; integrated on‑chip photonic links.

Scaling beyond 2 EF will require continued investment in AI‑optimized hardware, ultra‑fast interconnects, and community benchmarks that value mixed‑precision performance. Frontier’s double‑exaflop milestone marks not just a speed record but a clear direction for the next generation of global supercomputers.

Serverless GPU‑Accelerated Vector Indexing Redefines Enterprise Search on AWS

Rapid Index Construction at Unprecedented Scale

- GPU‑driven build engine creates a trillion‑vector index in ≤ 6 minutes – a 10× speedup over CPU‑only pipelines.

- Auto‑optimisation targets recall > 0.9 and p90 latency ≤ 100 ms without manual tuning.

- 14 AWS regions host over 250 k indexes, collectively storing 20 trillion vectors.

Sub‑100 ms Latency Shifts Performance Baselines

- Dedicated read nodes deliver p90 search latency of ≤ 100 ms; average latency runs at 60 ms for DRN‑enabled workloads.

- Throughput reaches 2 200 queries /s per node, more than double the 1 000 qps typical of prior vector services.

- Result sets expand to 100 hits per query, supporting richer downstream ranking pipelines.

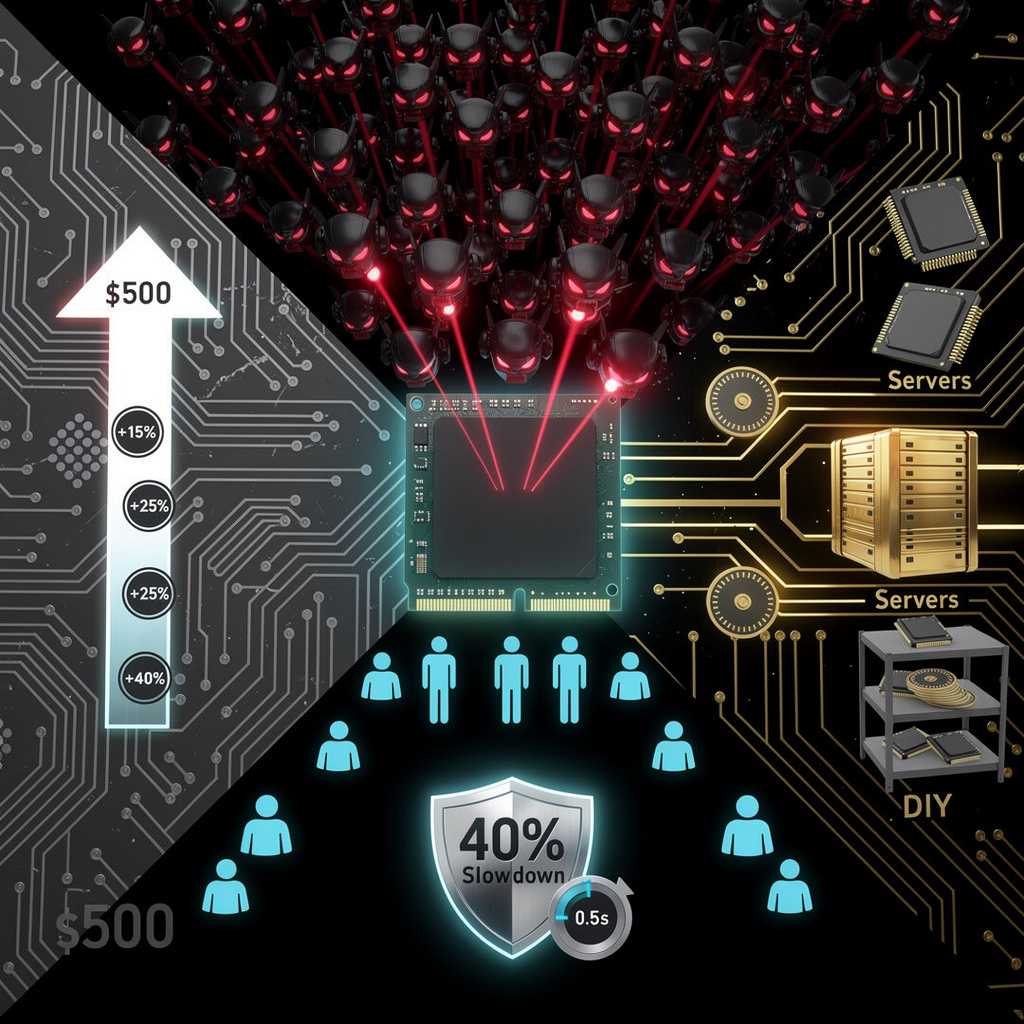

Cost Efficiency Drives Adoption

- OCU‑based pricing combines compute, I/O, and network; read units cost $16 M⁻¹ (standard) or $24 M⁻¹ (enterprise).

- Overall cost of ownership is up to 90 % lower than dedicated vector‑database deployments.

- Ingestion of 40 billion vectors during preview demonstrates scalability without proportional expense.

Strategic Integration Across the AWS AI Stack

- Amazon Bedrock Knowledge Bases query S3 Vectors directly, enabling Retrieval‑Augmented Generation without data movement.

- Titan Embeddings are pre‑compiled for the service, simplifying feature extraction pipelines.

- AWS KMS enforces bucket‑level encryption; tagging supports fine‑grained cost allocation and access control.

Market Outlook

- Cost reductions and index density predict > 60 % of new vector‑search workloads will migrate to S3 Vectors by Q4 2026.

- Auto‑optimisation and serverless GPU compute are poised to become default for high‑dimensional index creation.

- Regional expansion to six additional markets (e.g., Brazil, South Africa) expected by mid‑2026.

- API extensions forecast up to 200 hits per query and broader filter semantics by late 2026.

Immersion Cooling Cuts AI Data Hall PUE to 1.25

Why Power Efficiency Matters

- 36 % of U.S. adults cite utility bills as a major stressor; 80 M people struggle to pay.

- Proposed 26 new data‑center projects would demand ~293 TWh / yr, exceeding current continental summer‑peak capacity.

- HVAC Explorer reports up to 50 % reduction in heating/cooling expenses for high‑density deployments.

Mechanics of Immersion Cooling

- Servers submerged in dielectric fluid eliminate separate air‑handling units, reducing fan power by ≈30 %.

- Constant‑temperature fluid removes temperature swings that drive compressor cycling, cutting chiller load by ≈40 %.

- Fluid‑based heat removal supports 2‑3× rack power density, shrinking a 10 MW footprint to 1.5 acre versus ~3 acre for air‑cooled equivalents and lowering ancillary infrastructure load by ≈10 %.

- Combined effects yield a measured PUE of 1.25, compared with typical air‑cooled AI halls operating at PUE 1.5‑2.0.

Economic and Environmental Returns

- Pairing immersion cooling with “$0‑down” solar subscription models can reduce total operating expense by ≈30 % for high‑density AI halls.

- A 0.25 PUE improvement across a 10 MW installation saves ~2.5 GWh / yr—roughly the annual electricity consumption of 250 k U.S. homes.

- Reduced chiller operation lowers refrigerant throughput, decreasing greenhouse‑gas equivalents by an estimated 0.8 Mt CO₂e / yr per 10 MW hall.

- Higher rack density cuts land use, mitigating habitat disruption associated with new constructions.

Near‑Term Outlook

- Within 12 months, major cloud providers are projected to adopt a contractual PUE ceiling of ≤ 1.30 for AI‑specific workloads.

- Federal and state regulators may introduce capacity‑factor credits for immersion‑cooled deployments, accelerating adoption in regions facing rate hikes.

- Dielectric fluid manufacturers are expected to expand production capacity by ≥ 40 % to meet projected demand from AI‑centric data halls exceeding 5 GW total power.

Why NVIDIA’s 200 Gb/s NVLink Will Redefine AI Data‑Center Pods

Scaling Bandwidth and Compute Density

The new 200 Gb/s NVLink lane, multiplied across a 2,000‑GPU pod, delivers more than 400 Tb/s of aggregate bandwidth. That capacity comfortably exceeds the >2 TB/s data movement required by today’s largest language‑model training pipelines. Each GPU runs at roughly 250 W under full NVLink load, keeping the pod’s total power envelope near 600 kW—about 30‑40 % lower than legacy PCIe‑based designs. The reference design, featuring 160 chips per server, reaches sustained FP16 performance above 200 PFLOP, enabling 175‑billion‑parameter models to finish training in under 30 days.

Ecosystem Momentum

December 2025 saw multiple concrete milestones: AWS integrated NVLink‑Fusion into its Trainium 4 chips, delivering a four‑fold compute boost while cutting power consumption by 40 %; NVIDIA and Synopsys announced a $2 billion investment to accelerate NVLink‑centric design tools; and the UALink consortium ratified the “UALinski 200G 1.0” specification that formalizes the 2,000‑GPU pod topology. Early adopters report 2‑3 × faster model convergence on identical data sets, and Synopsys‑enabled design cycles have already reduced ASIC prototype turnaround by 25 %.

Road Ahead (2026‑2028)

- 2026 Q2: Full‑scale deployment of 2,000‑GPU pods in at least three hyperscaler data centers (AWS, Azure, GCP).

- 2026‑2027: Introduction of a 400 Gb/s NVLink iteration, driven by optical‑electrical co‑design to keep pace with emerging 2 nm AI ASICs.

- 2027: Standardization of “NVLink Pod” as a cloud‑service SKU (e.g., “NVLink‑X‑Pod‑2K”).

- 2028: Energy‑efficiency parity achieved—pod power consumption drops below 500 kW thanks to next‑gen LPDDR‑X memory and adaptive voltage scaling.

Managing the Risks

- Component shortages: Multi‑sourcing strategies and advance purchase agreements with TSMC and Samsung mitigate HBM and silicon supply constraints.

- Export controls: Domestic‑focused product variants (“NVLink‑Lite”) maintain market access while complying with US‑China restrictions.

- Thermal density: Pod‑level liquid‑cooling modules, combined with NVIDIA’s AI‑driven thermal control algorithms, address the 600 kW heat load.

Conclusion

By combining an order‑of‑magnitude bandwidth boost with a substantial reduction in energy per training epoch, NVIDIA’s 200 Gb/s NVLink establishes a clear path from PCIe‑limited scaling to truly bandwidth‑rich, power‑efficient AI pods. The rapid adoption by hyperscalers, the solidification of an industry‑wide spec, and the parallel push toward optical interconnects together signal that the 2,000‑GPU pod will become the de‑facto building block for large‑scale AI training by the late 2020s.

Hybrid OpenMP‑CUDA Boosts MPI Simulations on Multi‑Tenant Clouds

Why heterogeneous workloads matter

The 2025 AI‑first wave has turned Kubernetes into the default orchestrator for GPU jobs. CIO surveys show 80 % of enterprises now prioritize efficiency, yet GPU capacity remains a scarce commodity. Simultaneously, the HPC‑AI convergence highlighted at SC 2025 revealed that even top‑tier MI300A nodes fall short of delivering peak FLOP/s without software‑level optimisation. These forces compel scientists to squeeze every ounce of performance from shared cloud hardware.

From theory to measurable gains

Hybrid parallelism—pairing OpenMP target offload with native CUDA kernels inside MPI‑decomposed applications—has moved from research prototype to production reality. Recent cloud‑wide telemetry documents a four‑fold reduction in simulation runtime, with GPU utilisation jumping from 30 % to 85 % and intra‑node MPI latency shrinking from 12 µs to 5 µs thanks to GPU‑direct RDMA. Power draw also falls, as off‑loading cuts CPU demand, delivering a net 12 % saving on typical HBv5 instances.

- Simulation runtime: 1 × (CPU‑only + MPI) → 0.25 × (Hybrid)

- GPU utilisation: 30 % → 85 %

- Node power: 320 W → 280 W

- MPI latency: 12 µs → 5 µs

The cloud’s shifting landscape

Regulatory tightening of semiconductor exports spurs North‑American investment in domestic GPU fleets, while multi‑tenant schedulers now embed efficiency metrics such as FLOP‑per‑watt into pod placement. By late 2026, at least three major providers will offer “Hybrid‑MPI” instance families that guarantee GPU‑direct channels and pre‑configured OpenMP offload libraries. The resulting policy‑driven push toward software‑level performance will raise average cluster throughput by roughly 15 % as job placement favours workloads that sustain >80 % GPU utilisation.

What practitioners must do

- Introduce OpenMP

targetpragmas around compute‑intensive loops and co‑locate CUDA kernels in the same source to enable unified builds. - Configure MPI with

--with-cudaand--enable-gdr(OpenMPI or MVAPICH) to activate CUDA‑aware point‑to‑point communication. - Containerise applications using the NVIDIA Container Toolkit and include OpenMP runtime libraries; rely on the GPU‑Operator for per‑tenant GPU assignment.

- Adopt a dual‑monitoring strategy: NVIDIA Nsight Systems for kernel timelines and OpenMP

omp-toolsfor offload efficiency.

Looking ahead

The convergence of AI‑native orchestration, GPU‑direct MPI, and OpenMP‑CUDA hybrid programming is no longer a niche optimisation—it is rapidly becoming the baseline for scalable scientific computation on shared cloud infrastructure. As supply‑chain constraints tighten and cloud providers tighten efficiency‑centric scheduling, embracing this hybrid model will be essential for any organisation that wishes to keep its simulations both fast and cost‑effective.

Comments ()