DeepSeek, OpenAI, and Nvidia Move AI Frontiers: New Models, Better Coding, and Open‑Source Infrastructure

TL;DR

- DeepSeek releases two reasoning‑capable AI models V3.2 and V3-2 Speciale

- OpenAI launches GPT‑5.1, setting new coding benchmark record.

DeepSeek’s Reasoning‑Focused Leap: What It Means for the AI Frontier

Release cadence and market positioning

DeepSeek announced a public beta of V3.2 and the V3‑2 Speciale variant on 1 Dec 2025, followed by a press release the next day that highlighted a sparse‑attention architecture designed to halve inference FLOPs versus the prior V3.1‑Terminus. Both models sit at the top of the 685‑billion‑parameter family and are marketed as “reasoning‑capable” successors aimed at high‑accuracy competition tasks.

Technical advances

The core dense transformer now incorporates token‑re‑checking and dynamic sparse attention (DSA), delivering a 50 % reduction in compute cost. Post‑training investment exceeds 10 % of the pre‑training budget, a stark shift from the 1 % norm of earlier releases and focuses on chain‑of‑thought reinforcement learning without intermediate supervision. Each model supports a 128 K token context window, mitigating drift in long‑form reasoning, and the Speciale variant adds redesigned attention routing for deeper logical chains and multimodal (text + image) handling. Full‑scale inference demands a $25 M GPU/TPU cluster comparable to H100‑class systems or emerging domestic Chinese ASICs.

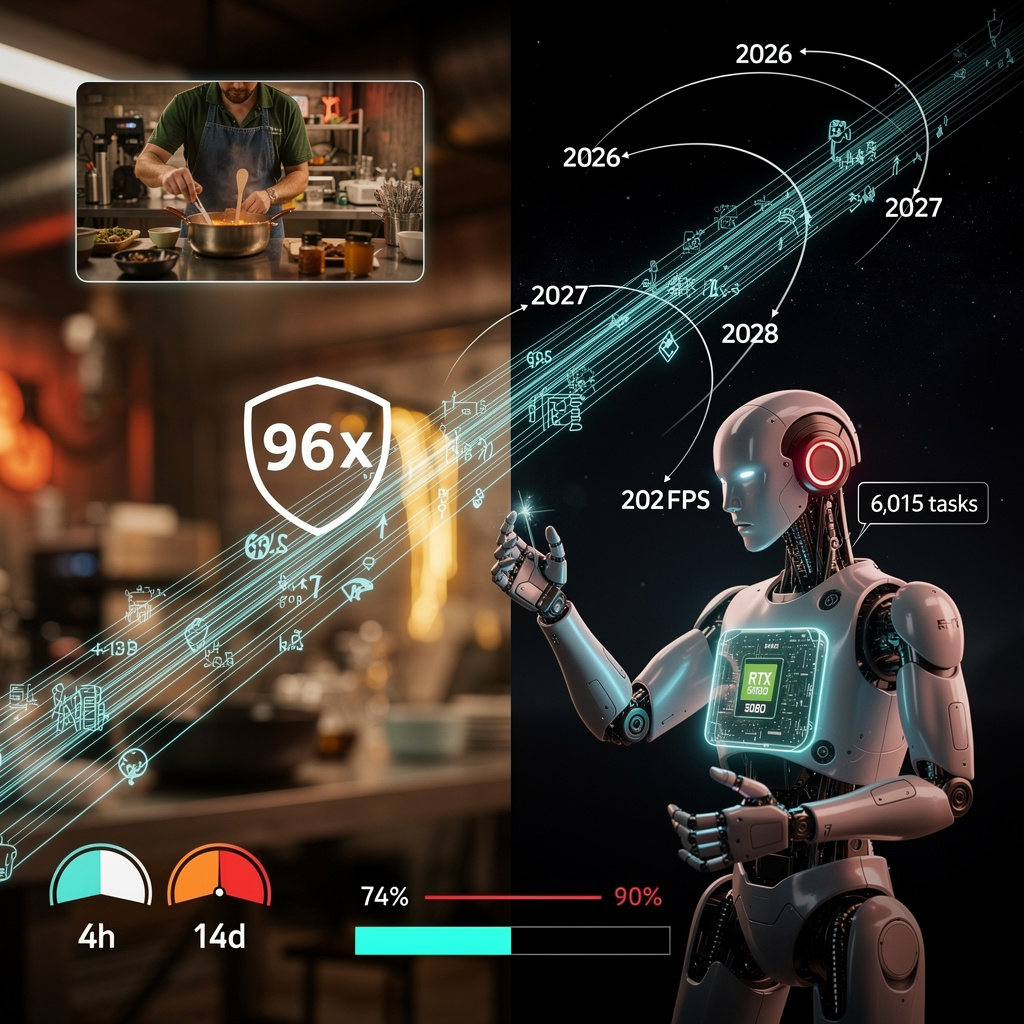

Benchmark performance

On pure reasoning tests, V3‑2 Speciale achieved 96 % on AIME 2025 and a 99.2 % pass‑rate on HMMT 2025, outpacing Gemini 3 Pro’s 95 % and 97.5 % respectively. In code generation (LiveCodeBench) the models lag behind GPT‑5’s 94 % but remain competitive at 84 % accuracy. Both variants secured gold medals in IMO 2025 team challenges through model‑aided solutions.

Competitive landscape

DeepSeek’s open‑source parity with closed‑source leaders marks the first instance where an openly licensed model matches top‑tier reasoning scores. The sparse‑attention breakthrough aligns it with efficiency trends in NVIDIA’s Cosmos‑Reason and Google’s Gemini 3 Pro. The substantial post‑training spend mirrors OpenAI’s shift toward continual task‑specific refinement, signaling a sector‑wide move beyond “pre‑train‑once” paradigms.

Regulatory and market dynamics

EU data‑protection authorities have deemed DeepSeek’s cross‑border transfers unlawful under GDPR, prompting a temporary service block in Italy. In the United States, congressional proposals to restrict the model on national‑security grounds are under committee review. Conversely, China’s domestic ASIC development in Hangzhou promises native support, reducing reliance on foreign GPU supply chains and lowering operating costs for domestic customers.

Emerging trends and near‑term outlook

- Reasoning‑first design – Sparse attention and extensive CoT reinforcement become de‑facto standards for next‑gen LLMs.

- Scaling post‑training budgets – A tenfold increase in refinement spend is now common across leading models.

- Regulatory partitioning – Geopolitical constraints drive hardware diversification and data‑residency modules.

- Open‑source competition – DeepSeek’s benchmark parity forces closed providers to disclose more detailed evaluation metrics.

12‑month forecast

A > 1 T parameter successor (V4) is slated for release within nine months, leveraging the same sparse‑attention core. Domestic ASIC adoption should cut cloud‑hosting costs by roughly 30 % for Chinese users. To satisfy GDPR and export controls, DeepSeek is expected to roll out a “Data‑Residency Module.” Multimodal video‑frame reasoning is projected within six months, aligning with industry moves toward vision‑language integration. By Q2 2026, at least three major SaaS platforms—spanning China, the US, and the EU—are likely to offer DeepSeek‑powered reasoning APIs.

Implications

DeepSeek’s V3.2 and V3‑2 Speciale demonstrate that open‑source models can now contend with the most capable closed systems on core reasoning benchmarks while delivering cost‑effective inference through sparse attention. Their technical trajectory, coupled with escalating regulatory pressures, will shape the high‑performance AI market, compelling both developers and policymakers to adapt to a bifurcated global ecosystem.

GPT‑5.1 Sets a New Coding Benchmark, but the Race Is Shifting

Benchmark Performance

- LiveCodeBench: 94.7 % accuracy, surpassing the previous GPT‑5 high‑95 % result and the DeepSeek‑V3.2 open‑source baseline (≈94 %).

- AIME 2025 (Math): 93.1 % accuracy, just behind Gemini 3 Pro (94.6 %) while remaining ahead of most open‑source competitors.

- Code‑generation throughput: the gpt‑5‑codex variant processes roughly twice as many tokens per second as gpt‑4‑codex, maintaining a 98 % pass‑rate on unit‑test suites.

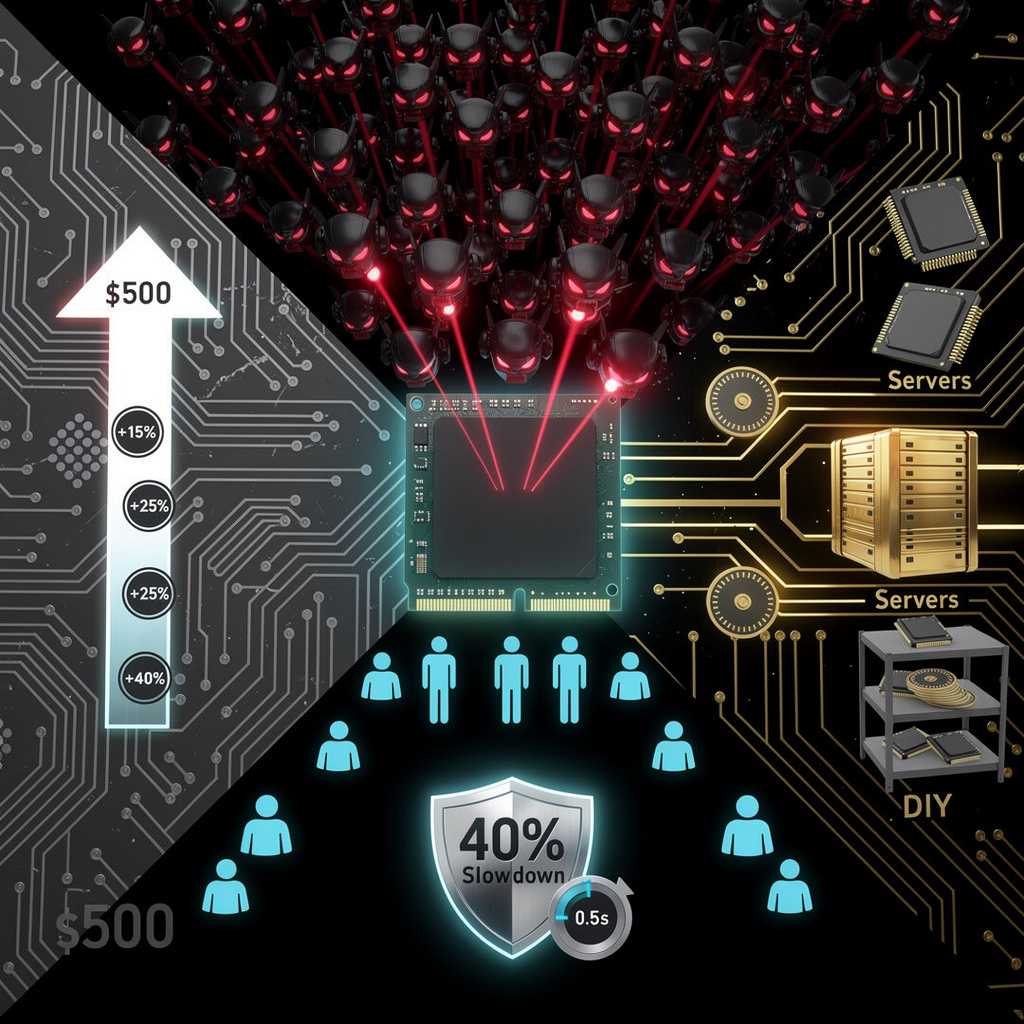

Pricing and Compute

- Input‑token cost: $15 M per 1 M tokens (Sora tier).

- Output‑token cost: $10 per 1 M tokens, with cached tokens at $0.125 per 1 M.

- Prompt cache duration: 24 hours.

- Inference cluster budget: ≥ $25 M for high‑throughput H200‑class GPU deployment.

- Mini variant (gpt‑5.2‑mini): $5 M per 1 M tokens, targeting broader developer adoption.

Market Dynamics

- DeepSeek’s V3.2 and V3‑Speciale models claim parity with GPT‑5 on benchmarks while delivering approximately 50 % lower inference cost through sparse‑attention architecture.

- Toolchain integration is accelerating: Docker Model Runner and Cloudflare’s Python workflow support enable plug‑and‑play deployment of large coding models within CI/CD pipelines.

- Autonomous coding agents are emerging (e.g., OpenAGI’s Lux, MiniMax‑M2). The release of gpt‑5‑codex and gpt‑5.2‑mini aligns OpenAI with this shift toward multi‑step refactoring, debugging, and documentation generation.

- API‑key leakage on GitHub—affecting roughly 65 % of top AI firms—creates pressure for cost‑efficient tiers, prompting the introduction of the mini variant.

Emerging Patterns

- Benchmark saturation is evident; ARC‑AGI scores above 75 % suggest diminishing returns from traditional suites.

- Hardware co‑design is becoming integral: the 6 GB VRAM‑friendly FramePack architecture pairs with H200‑class GPUs, reinforcing a joint scaling trajectory.

- Pricing stratification—Sora, cached, mini—reflects modular consumption based on workload (batch inference versus interactive assistance).

Looking Ahead (Six‑Month Horizon)

- OpenAI is expected to launch a “GPT‑5.1‑Agent” API that bundles gpt‑5‑codex with tool‑use primitives (file system access, build‑system orchestration) to compete directly with autonomous coding agents.

- DeepSeek’s sparse‑attention models will likely undercut GPT‑5.1 on cost, driving OpenAI to introduce a lightweight inference mode comparable to the mini variant for edge deployment.

- New multi‑modal code‑reasoning benchmarks, incorporating documentation retrieval and execution tracing, will emerge, reducing the discriminative power of current coding scores.

Assessment

GPT‑5.1 establishes a performance ceiling for code‑generation LLMs, delivering top‑tier accuracy and doubled throughput. However, the market is pivoting toward cost efficiency, agentic workflows, and richer evaluation metrics. Sustaining leadership will require OpenAI to broaden integration capabilities and introduce cost‑optimized inference paths alongside the current performance focus.

Comments ()