Linux Dominates Supercomputers, Multicloud Service Reduces AWS–Google Latency, Tom's Benchmarks Drive HPC Insights

TL;DR

- Linux variants now power all TOP500 supercomputers, cementing OS as default for HPC workloads

- New multicloud network service slashes AWS–Google latency, enabling HPC workloads to span hyperscalers with lower friction

- Data center electricity demand projected to hit 580 TWh by 2028, driving grid upgrades to meet HPC power needs

- Immersion cooling solutions emerge for data centers, cutting energy use and enhancing HPC thermal efficiency

Linux’s Ascendance as the Default OS for High‑Performance Computing

Zero‑Tolerance Dominance

All 500 entries in the current TOP500 list run Linux‑derived operating systems, confirming Linux as the de‑facto platform for supercomputing. The latest kernel, Linux 6.18 (released 30 Nov 2025), introduced performance‑critical subsystems—exFAT I/O now 16× faster on removable media and XFS online fsck enabled by default—directly benefiting data‑intensive HPC workloads.

Data‑Driven Landscape

- TOP500 OS composition: 100 % Linux variants

- Desktop Linux market share (StatCounter, Dec 2025): stable 3.05 %

- Cloud workload share (Stack Overflow Survey 2025): 49 % run on Linux/Unix

- Kernel 6.18 impact: exFAT I/O ↑ 16×; XFS online fsck default

- Hardware support expansion: Rust DRM drivers for ARM Mali GPUs; Intel‑pstate full‑range P‑states; NVIDIA GSP auto‑selects Turing/Ampere GPUs

- Windows 10 end‑of‑support (14 Oct 2025) catalyzes migration, with 6 % of enterprises now evaluating Linux alternatives

Emerging Trends

- Kernel‑level performance gains: Scheduler revamps and improved NUMA balancing reduce latency across heterogeneous clusters.

- Broader architecture support: Rust‑based drivers for ARM Mali GPUs and Apple M2‑series WMI drivers expand Linux’s usable hardware envelope, eliminating custom firmware layers.

- Ecosystem consolidation: Removal of Bcachefs from mainline signals a focus on stability, reinforcing confidence for production HPC deployments.

- Market‑driven migration: Windows 10’s lifecycle exit triggers measurable spikes in Linux downloads (Zorin OS 18 hit 1 M downloads within weeks), prompting procurement policies to favor open‑source stacks.

- Developer adoption: Survey data consistently rank Linux as the primary development platform, sustaining its suitability for containerized AI/ML pipelines.

Implications for HPC

- Standardizing Linux variants across supercomputers will reduce administrative overhead and streamline procurement guidelines.

- Accelerated adoption of ARM‑based accelerators becomes feasible as kernel‑level Rust drivers lower integration barriers, encouraging energy‑efficient mixed‑architecture nodes.

- Open‑source licensing and transparent firmware align with emerging data‑sovereignty regulations, positioning Linux as the secure baseline for regulated scientific workloads.

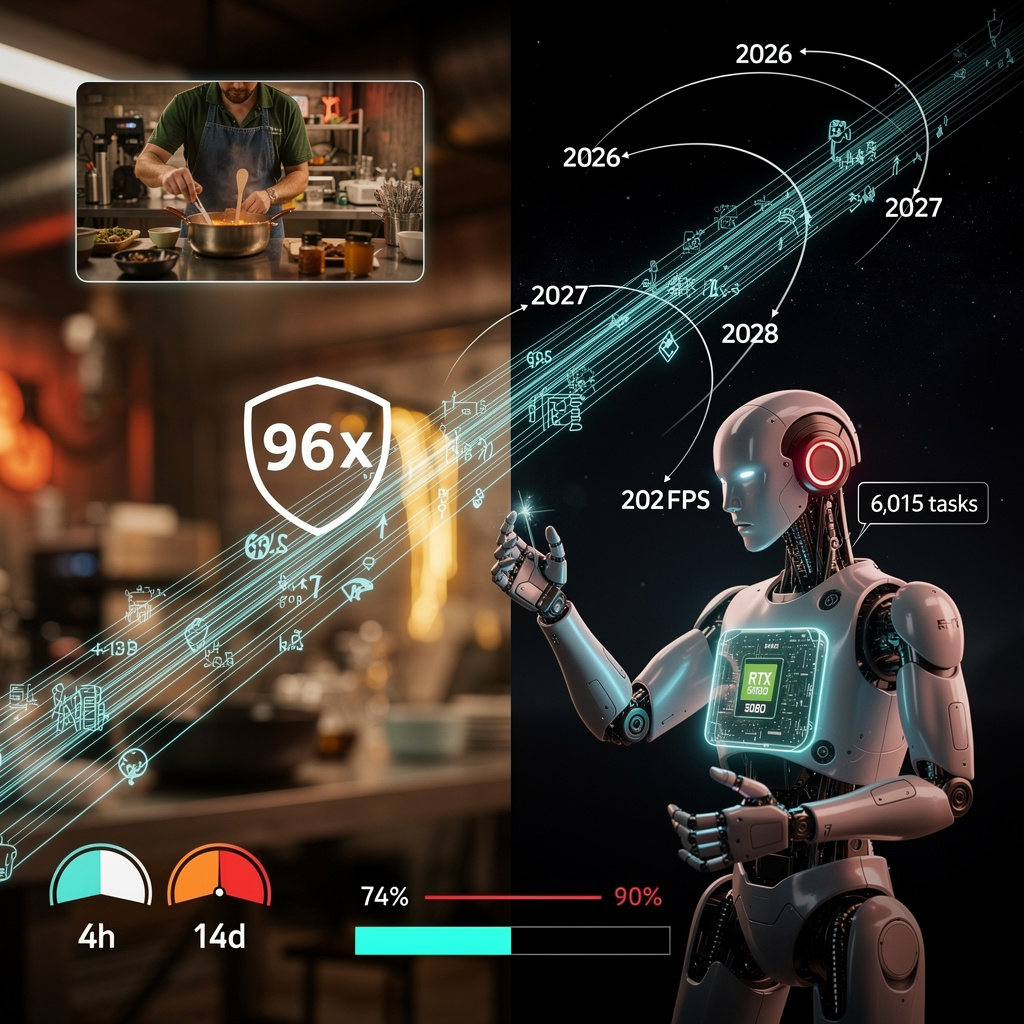

Forecast 2026‑2028

- 2026: Over 95 % of new supercomputer installations will baseline on Linux 6.20+; container runtimes (Kata, gVisor) become standard for workload isolation.

- 2027: ARM‑centric exascale prototypes achieve ≥ 30 % of peak FLOPS using Linux‑enabled heterogeneous nodes.

- 2028: Native kernel support for emerging NPU families reduces AI workload latency by ≥ 25 % versus legacy CUDA‑only stacks.

Private Multicloud Links: A Pragmatic Response to Cloud‑Outage Vulnerabilities

Why Deterministic Connectivity Matters

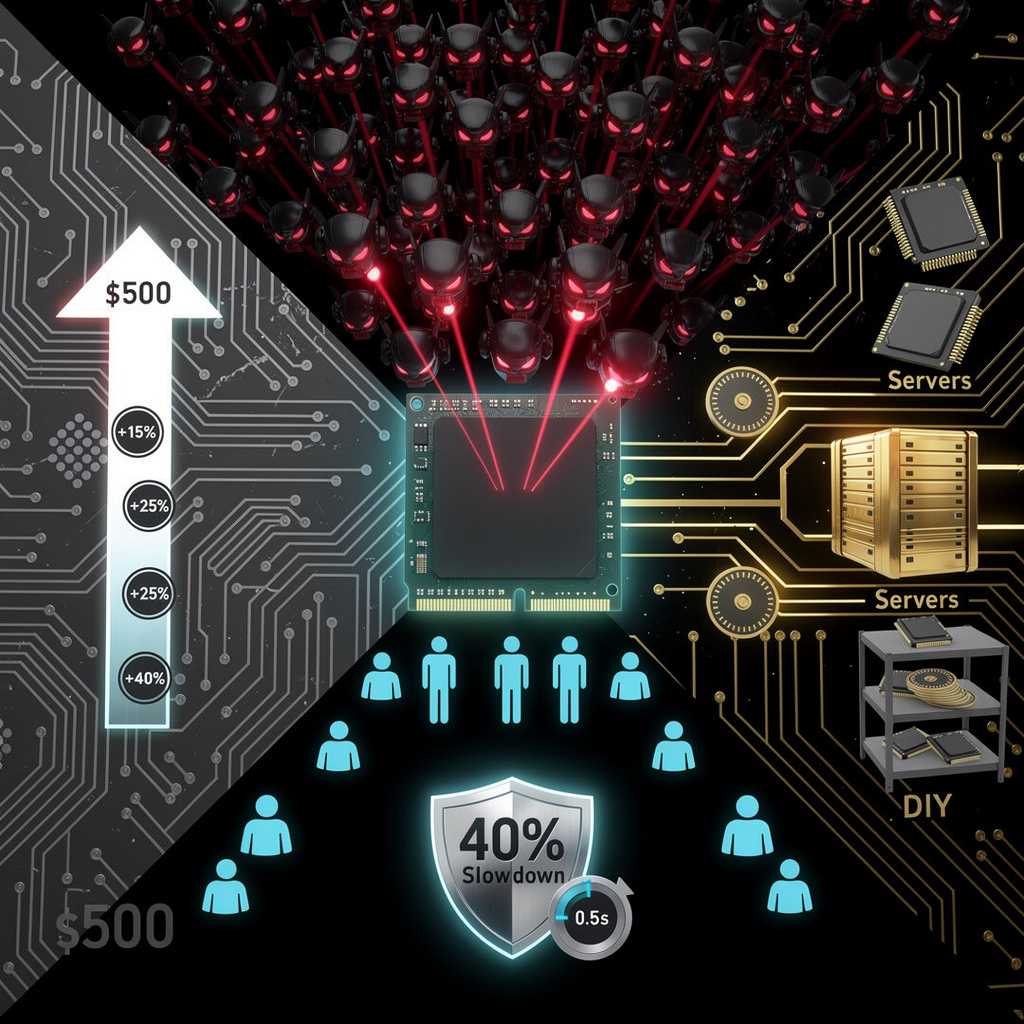

Recent regional failures at both AWS (Oct 2024) and a subsequent AWS region‑wide outage (Oct 2025) exposed the fragility of relying on public‑internet routing for inter‑cloud traffic. Parametrix estimates the 2025 incident cost U.S. enterprises between $500 M and $650 M, a figure that makes a compelling business case for private, latency‑guaranteed links. The new AWS‑Google private multicloud service directly addresses this risk by offering a dedicated, encrypted path that reduces round‑trip latency up to 45 % relative to public routes in the five preview regions.

- Latency improvement: up to 45 % reduction

- Bandwidth starting at 1 Gbps, engineered for 100 Gbps at GA

- SLA: 99.99 % availability with sub‑millisecond failover

Economic Incentives for Early Adoption

AWS reported $33 B Q3 revenue; Google Cloud posted roughly $15 B in Q4. The service’s launch coincided with a 1.77 % rise in AWS stock (to $233.22), while Alphabet’s price held steady, indicating market confidence in the joint offering without over‑valuation concerns. For enterprises, the cost of maintaining private links (including provisioning and subscription fees) is offset by the avoidance of outage‑related losses and the reduction of egress charges associated with public‑internet traffic.

Targeted Use‑Cases Drive Value

The service aligns with workloads that cannot tolerate latency spikes or manual routing adjustments:

- Enterprise SaaS integrations (e.g., Salesforce, Vertex AI) requiring near‑real‑time data exchange

- Managed Service Providers offering private peering as a differentiator

- High‑Performance Computing (HPC) research and financial risk modeling where deterministic bandwidth is essential

- Legacy migration projects that benefit from unchanged VPC architectures

Technical Foundations Enable Seamless Integration

An open‑API definition published on GitHub allows orchestration tools to automate provisioning. The routing architecture deploys four independent encrypted paths per region, with latency‑aware selection every 10 ms. Compatibility with AWS Transit Gateway, Cloud WAN, and Google Cloud Cross‑Cloud Interconnect reduces the effort required for existing customers to adopt the service.

Future Outlook: Towards a Tri‑Cloud Fabric

Azure’s planned integration in 2026 promises to extend private connectivity to three hyperscalers, potentially raising average private inter‑cloud bandwidth per enterprise to >150 Gbps. Forecasts suggest that within a year, 12‑15 % of inter‑cloud traffic will shift to private links, and cross‑cloud HPC job submissions could grow by 30 % by Q4 2026. Regulatory pressures on data sovereignty will likely increase SLA clauses related to cross‑border handling by 5‑7 %. The convergence of technical capability, economic rationale, and emerging demand positions private multicloud networking not as a niche offering but as a baseline infrastructure component for latency‑sensitive, high‑throughput applications.

Comments ()