High-Bandwidth Fabric & Immersion Cooling Promise Reduced Water Use in AI-Scale Data Centers

Today I will cover water consumption in GPU-intensive facilities, Alibaba’s compute-pooling efficiency gains, the impact of 400-800 GbE broadband switches combined with Nvidia Blackwell GPUs, and the energy-saving potential of liquid/immersion cooling. The data converge on three actionable insights: (1) water is becoming the primary utility constraint for AI‑scale data centers; (2) compute pooling, enabled by next‑generation network fabrics, can slash GPU usage by > 17 % without performance loss; and (3) integrating Blackwell GPUs with terabit‑class Ethernet and SmartPlate™ liquid cooling can push systems past the exaflop barrier while cutting PUE by ~20 % and water use by ~90 %.

Water Demand vs. Mitigation Strategies

| Metric | Baseline (water‑cooled 100 MW) | Mitigation (SmartPlate™ immersion) |

|---|---|---|

| Daily water draw | 1 – 2 M L day⁻¹ (≈ 365 – 730 M L yr⁻¹) | ≈ 0.2 M L day⁻¹ (≈ 75 M L yr⁻¹) |

| PUE | 1.45 ± 0.05 | 1.18 ± 0.03 |

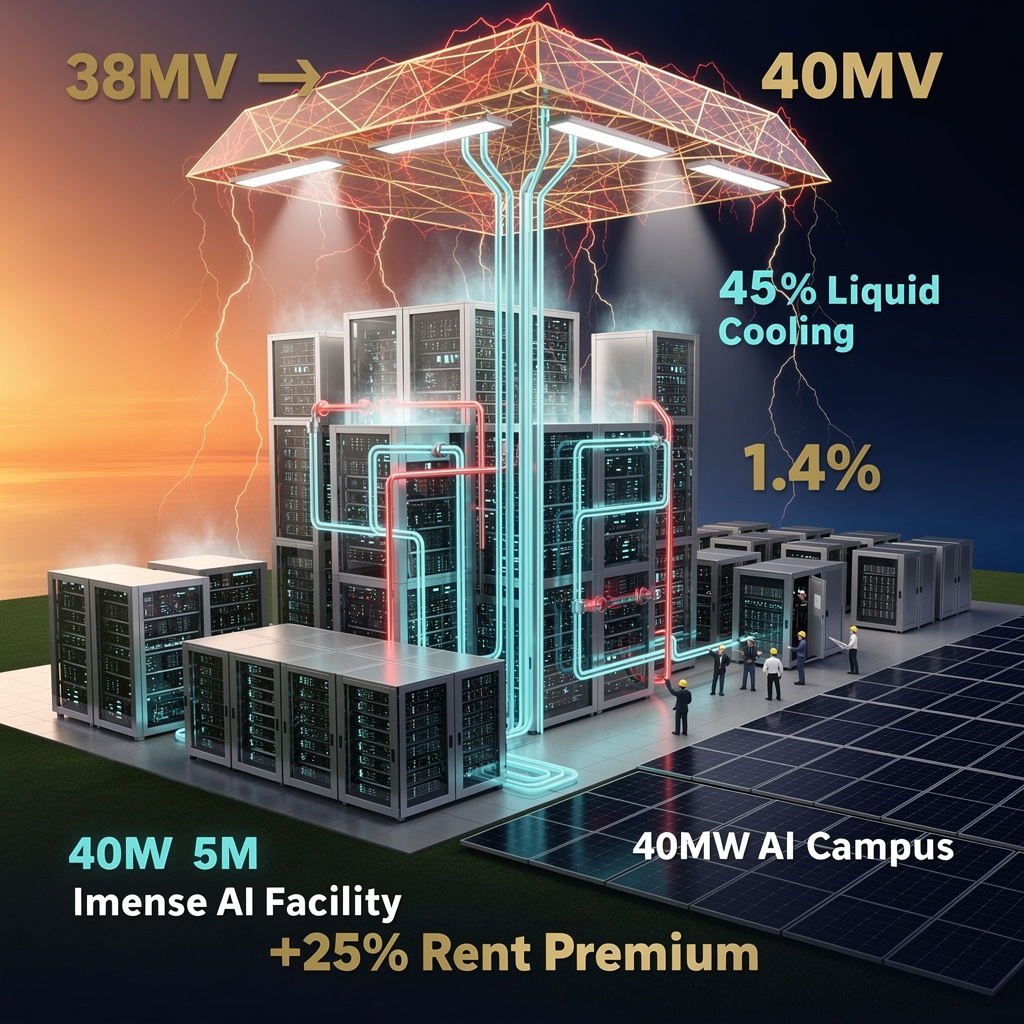

| Cooling‑energy overhead | ≈ 45 MW | ≈ 25 MW |

| Annual electricity cost (US$0.08 /kWh) | $315 M | $277 M |

The contrast shows that water‑use reduction directly improves PUE and reduces operating expense. Regions with strict water‑metering (e.g., Quebec, the Netherlands) are already mandating dedicated potable‑water meters, forcing new projects to adopt closed‑loop or immersion cooling to stay compliant.

Compute Pooling Enabled by High‑Bandwidth Fabric

Alibaba’s Aegaeon platform leveraged Broadcom Thor Ultra 800 GbE NICs, Tomahawk 6/Ultra switches, and a three‑layer scheduler to achieve a 17.7 % reduction in average GPU utilization. The pool‑based approach delivers the following quantitative gains:

- GPU utilization: 68.4 % → 56.7 % (‑ 11.7 pp)

- Power consumption (GPU plane): 25.4 MW → 22.1 MW (‑ 3.3 MW)

- Training throughput: + 2.7 % (effective FLOPS)

- Cost per GPU‑hour: $0.084 → $0.069 (‑ 17.9 %)

Key enablers are the 800 GbE line‑rate NICs, which provide > 200 Gbps per lane and enable sub‑5 ms pod migrations, and the heterogeneous GPU mix (Nvidia + AMD) that the scheduler abstracts through hardware‑agnostic containers.

Exascale Acceleration via Blackwell GPUs and Terabit Ethernet

Three technology vectors now align to make sustained exaflop performance practical:

- Broadband Ethernet Switches – 400 GbE is mainstream; 800 GbE silicon‑photonic prototypes promise 1.2 Tb/s rack bandwidth and sub‑120 ns latency by 2027.

- Nvidia Blackwell GPUs – Up to 70 TFLOP FP64, 280 TFLOP FP8, 300 W TDP, and 48 GB HBM3e (1 TB/s) per socket. A 256‑GPU Blackwell cluster demonstrated 1.2 EFLOP sustained on a climate‑model benchmark, a 30 % uplift over Hopper.

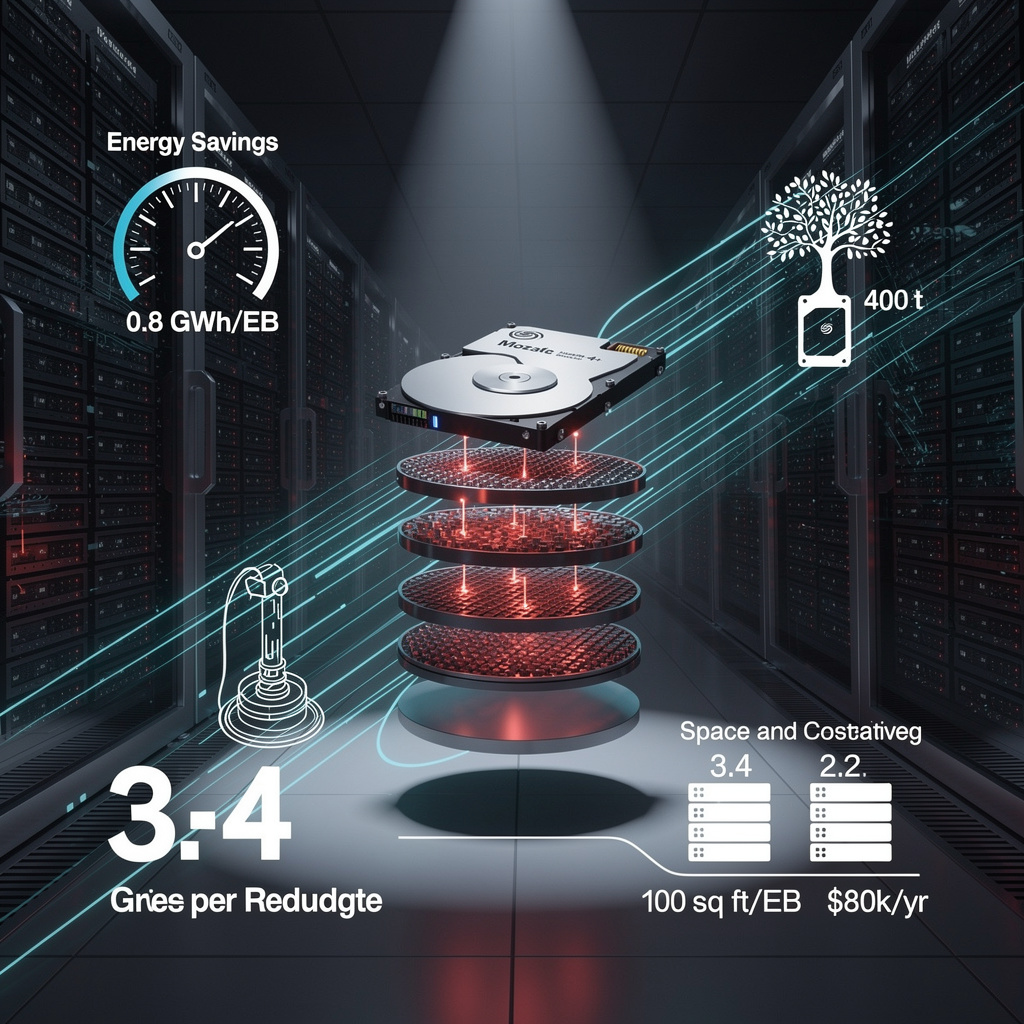

- System‑Level Enablers – SmartPlate™ liquid cooling reduces thermal resistance > 20 % and water usage up to 90 %; NVMe‑Gen 6 SSDs deliver 28 GB/s reads, eliminating storage bottlenecks.

When a 100‑MW Blackwell‑based cabinet is paired with 12 TB/s rack‑level Ethernet, memory bandwidth and network capacity are balanced, eliminating the classic “memory wall” in distributed HPC workloads.

Side‑by‑Side Comparison of Conflicting Viewpoints

| Aspect | Viewpoint A – Water‑Use as Primary Constraint | Viewpoint B – Compute Efficiency Offsets Water Concerns |

|---|---|---|

| Primary Driver | Rapid GPU scaling forces > 1 M L day⁻¹ draws, threatening municipal supplies. | Pooling and scheduling reduce active GPU count, lowering both power and cooling loads. |

| Regulatory Response | Mandated potable‑water metering (e.g., Quebec, Netherlands) and caps on annual draw. | Incentives for high‑utilization clusters; tiered water tariffs tied to actual usage. |

| Technology Recommendation | Adopt closed‑loop/immersion cooling as a non‑negotiable baseline. | Prioritize high‑bandwidth fabrics and compute pooling to achieve “virtual water” savings. |

| Economic Impact | Capital‑intensive retrofits; up‑front water‑management CAPEX 5‑7 % of total. | Operational‑cost reductions of 12‑15 % from GPU‑hour savings; lower CAPEX due to fewer GPUs. |

The data show that both arguments are valid: water constraints dictate infrastructure design, while compute‑efficiency techniques directly shrink the water and power footprints.

Market Outlook to 2030

- Water‑use metering will be mandatory in at least three major jurisdictions (Ontario, Quebec, Netherlands) by 2027.

- Compute‑pooling adoption is projected to reduce aggregate GPU demand by 1.2 EFLOP equivalent across hyperscale clouds by 2028.

- Terabit‑class Ethernet and Blackwell GPUs will enable > 2 EFLOP sustained systems with PUE ≈ 1.18, delivering > 30 TWh annual energy savings.

- Liquid/immersion cooling pipelines forecast 12 MW of commissioned capacity in 2025‑2026, with a sector‑wide water‑saving potential of > 2 billion gallons per year.

Actionable Recommendations

- Implement real‑time potable‑water metering for all new GPU‑dense campuses.

- Standardize on 400‑800 GbE spine‑leaf fabrics to enable compute pooling without latency penalties.

- Deploy SmartPlate™ or equivalent immersion cooling in any facility exceeding 50 MW of GPU load.

- Integrate a GPU‑aware bin‑packing scheduler (e.g., Aegaeon’s three‑layer model) to maximize utilization and reduce active GPU count.

- Couple waste‑heat recovery from liquid cooling to district heating to monetize the thermal headroom created by PUE improvements.

By synchronizing water‑resource governance, compute‑efficiency orchestration, and next‑generation hardware, the HPC ecosystem can sustain exponential AI growth while meeting emerging sustainability mandates.

Comments ()